|

Eric C (RL)

|

Eric C (RL)

Posted 5 Years Ago

|

|

Group: Administrators

Last Active: 3 Weeks Ago

Posts: 552,

Visits: 6.0K

|

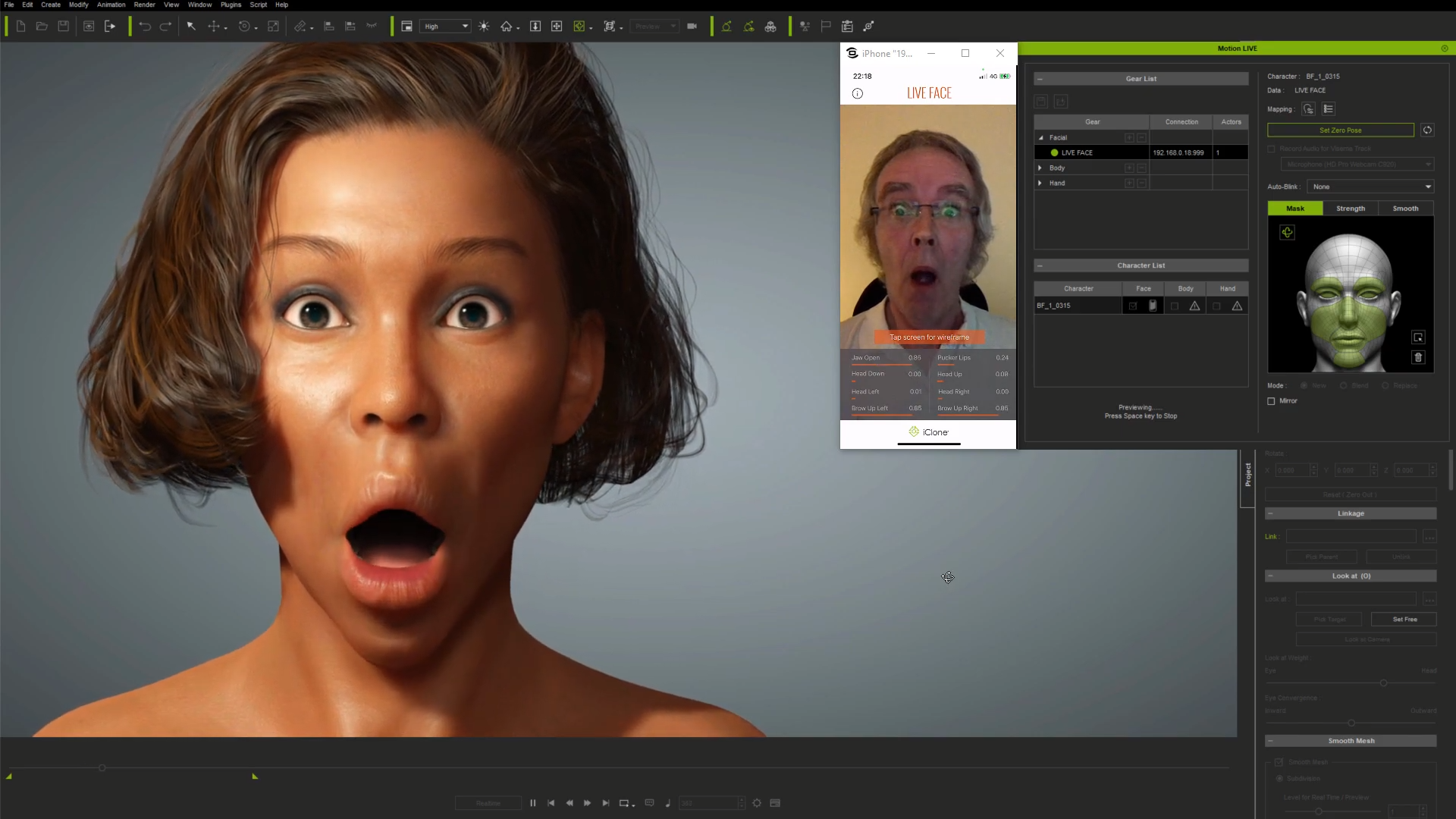

What's new for Motion LIVE 1.1 and LiveFace Profile 1.05 Reallusion's latest facial mo-cap tools go further, addressing core issues in the facial mo-cap pipeline letting you produce better animation. From all new ARKit expressions to easily adjustable raw mo-cap data as well as re-targeting, to multi-track facial recording and essential mo-cap cleanup - we're covering all the bases to provide the most powerful, flexible and user-friendly facial mo-cap approach yet. Read below and find out what we have achieved in this update. The biggest issue with all live mo-cap is that it's noisy, twitchy and difficult to work with, so smoothing the live data in real-time is a major necessity; Because if the mo-cap data isn't right, or the the model's expressions aren't what you want, and the current mo-cap recording isn't quite accurate then you need to have access to the back-end tools to adjust all of this. Finally, while you can smooth the data in real-time from the start, you can also cleanup existing recorded clips using proven smoothing methods which can be applied at the push of a button.

Learn more information from the iPhone Face Mocap page.- 1. iPhone LiveFace App Update (High Tracking Precision)

LIVE FACE data streaming has increased in accuracy from two decimal points to five.

>>> (*Requires to update LIVE FACE iPhone App to version 1.0.8 (Feb 22, 2021) / (see video)

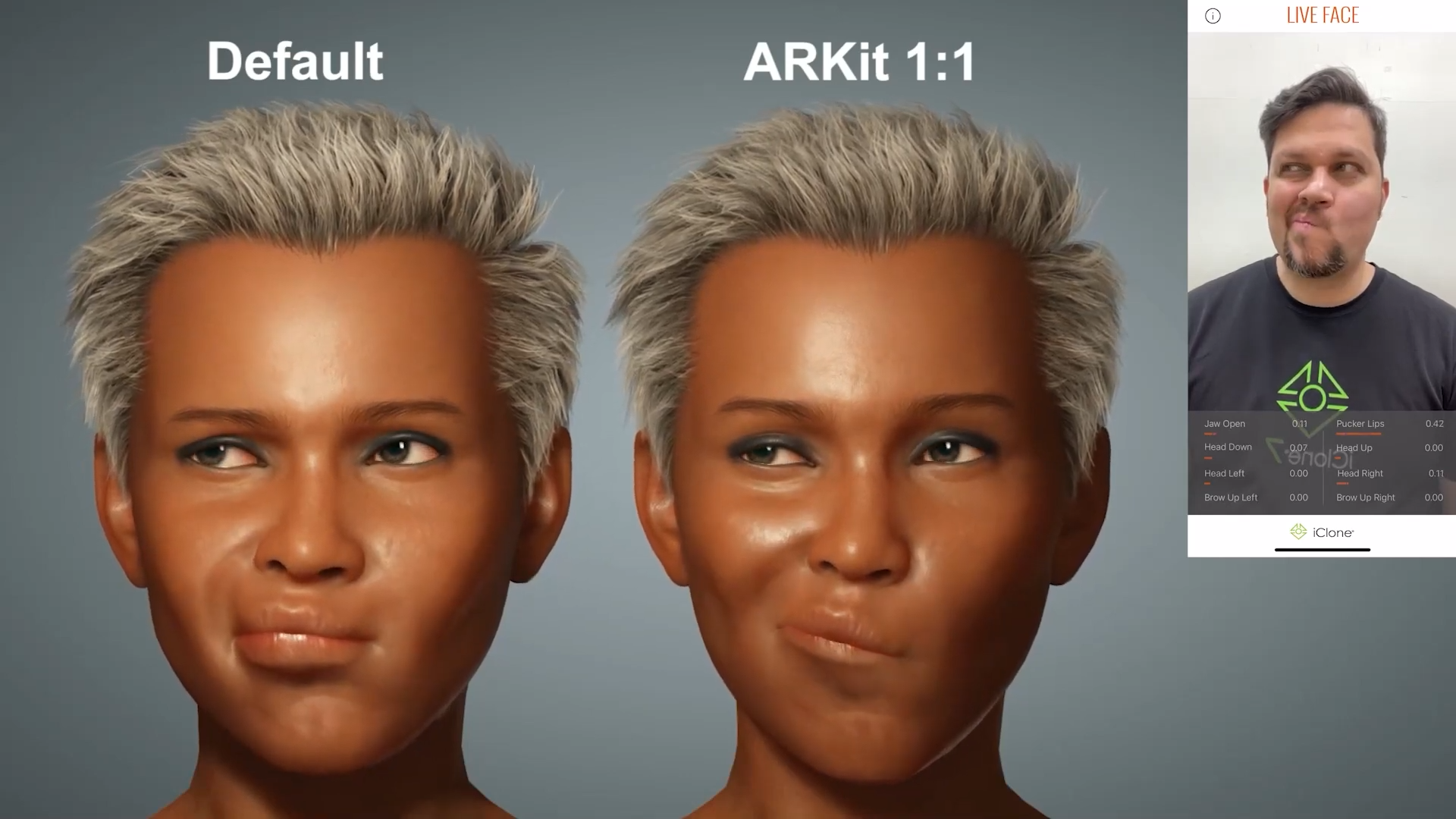

- ARKit 1:1 Blendshapes (52+11)

iPhone LiveFace supports ExpressionPlus (ARKit 1:1 mode). The iPhone tracking data is now one-to-one mapped to 52 scan-based blend-shapes from CC3+ character base, giving much more expressive facial performance. / (see video)

Additional tongue movement is also included. (Visit "Facial Expression page")

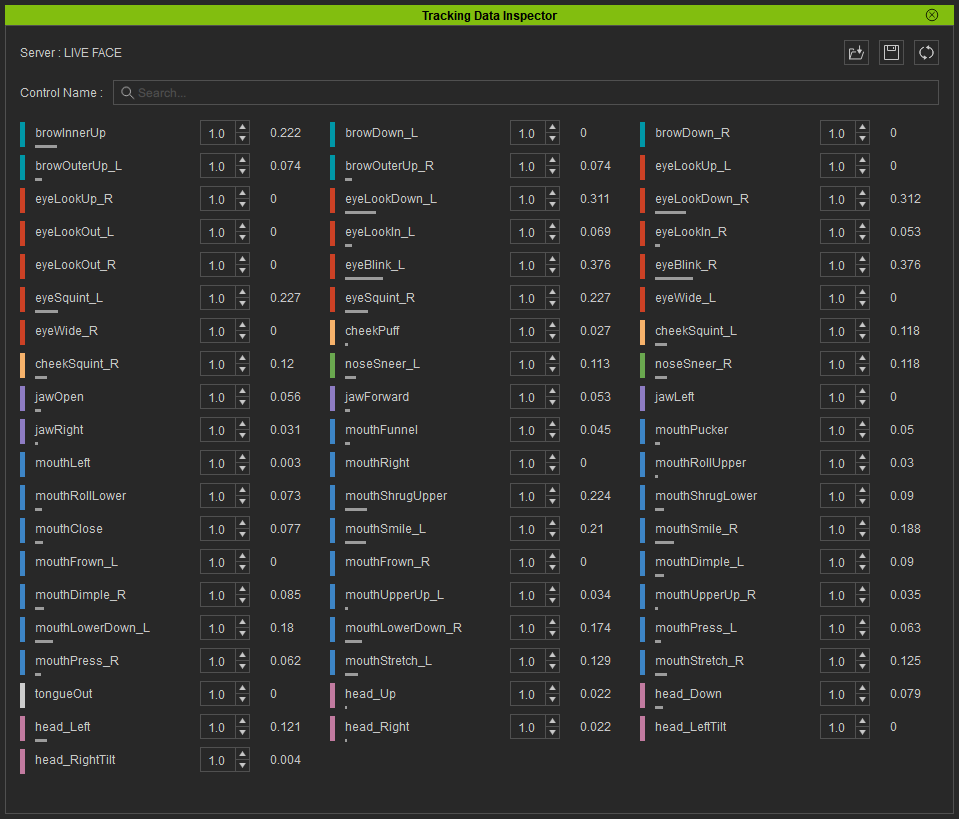

- 2. Tracking Data Inspector: Multiplier added

- No longer just a data inspector, with "Multiplier", you can boost or weaken the tracking data strength.

- Use this feature to compensate the imbalance of tracking data strength, and help accommodate the performer's effective range.

- Added Color Coding for brows, eyes, jaw, cheek, and tongue. It helps to easily identify triggered facial region.

(see video)

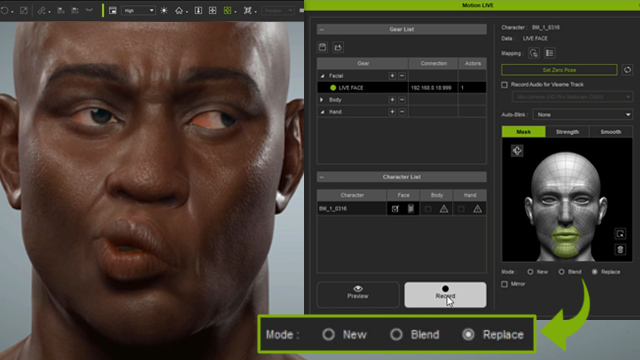

- 3. Multi-pass Recording: Capture Mode - New, Add, Replace

- New: Start a new recording, overwriting the original clip.

- Replace: A very powerful feature! Now you can use Replace to erase selected muscle area, or replace the selected muscle area with new puppet performance.

- Add: Blend the new puppet performance onto the underlying clip.

(see video)

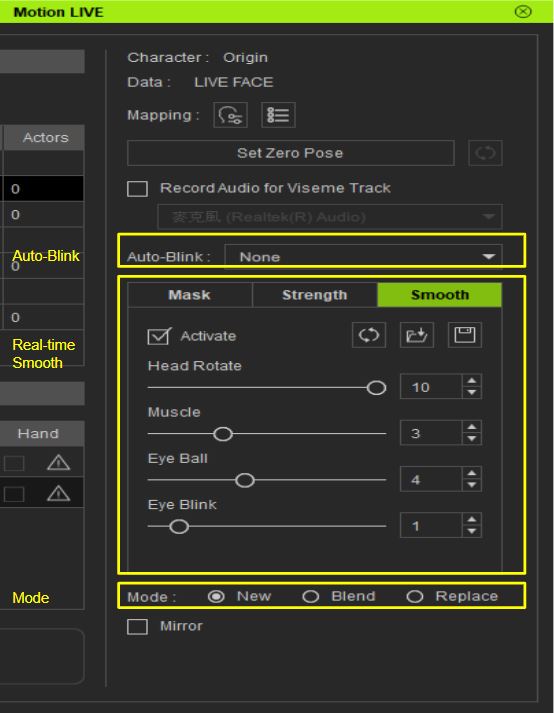

- 4. Real -time Smooth Tab

- Added a new Smooth tab, with separate Smooth Sliders for Head Rotation, Muscle, Eyes, Eyelid.

- Usually we need to give some smooth values to Head Rotation for natural head turn.

(see video)

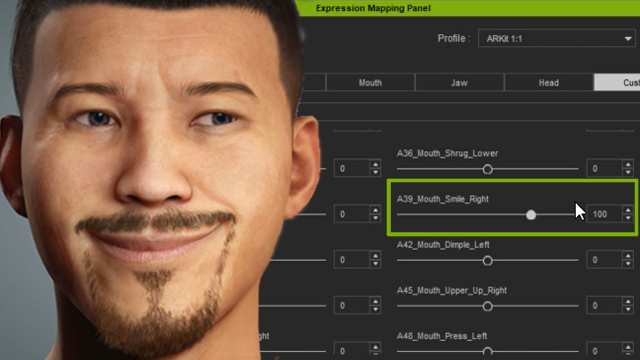

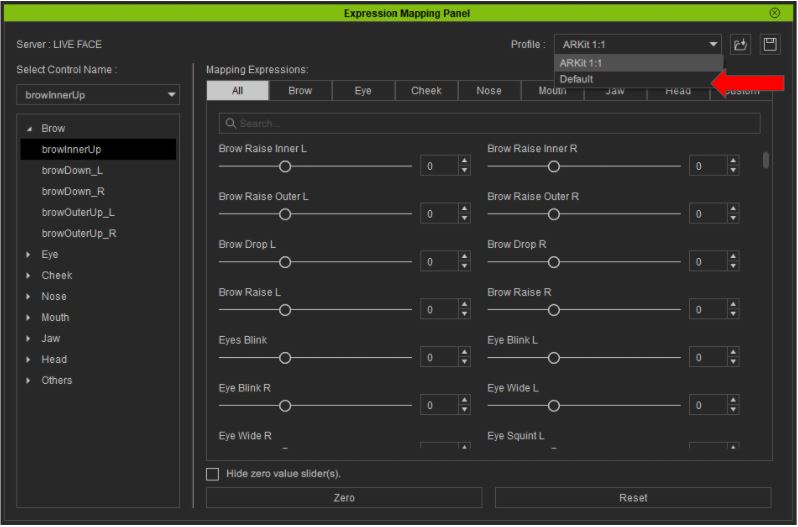

- 5. New Expression Mapping

- Re-targeting (tracking data to blend-shape) is made easy with the new, flexible mapping UI.

- Default and ARKit 1to1 presets provide instant re-targeting for CC3+ avatars.

- Customize expressions easily by blending them.

(see video)

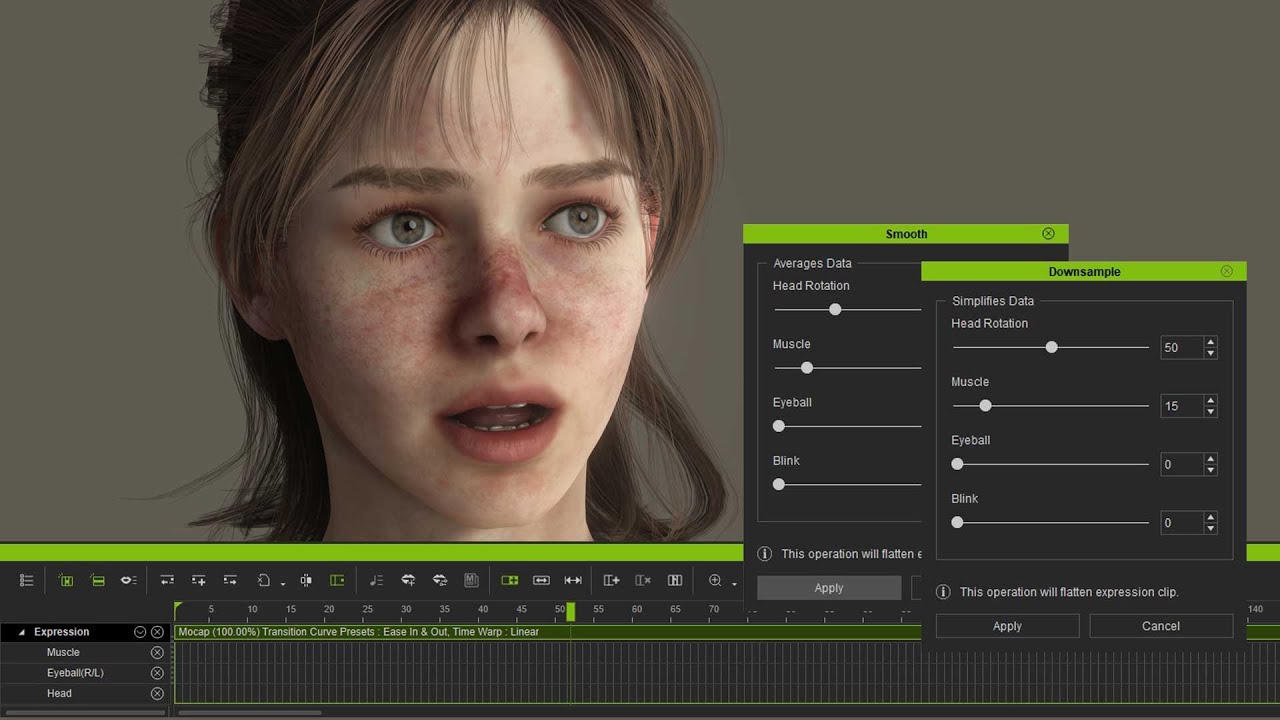

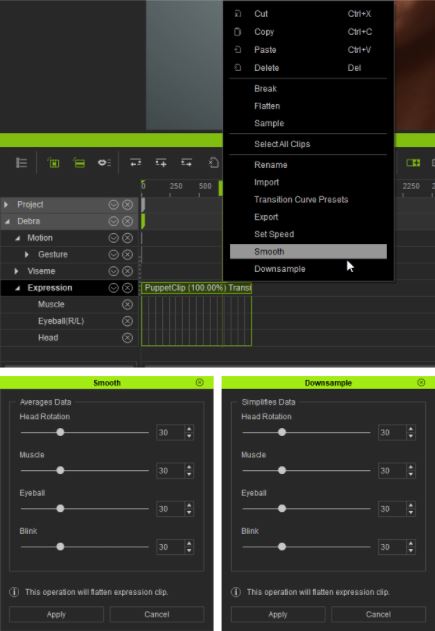

- 6. Post Smooth (Timeline - Expression Track - Clip)

- This feature can effectively fix mo-cap judders, snappy head rotation, or any eyelid, eyeball, or muscle twitches

- Smooth recorded data on clips using either Smoothing (moving average) or Down-sampling (key reduction) methods.

(see video)

Known Issues:

- If the CC3+ character was denied the update to ExpressionPlus, the mocap result will not be shown, and one will need to switch back to Default in the Mapping panel.

- Using two different Full Body Profiles (Neuron or Rokoko) on the same character like applying A profile to the body and B profile to the hands, will cause the application to crash.

|

|

Eric C (RL)

|

Eric C (RL)

Posted 5 Years Ago

|

|

Group: Administrators

Last Active: 3 Weeks Ago

Posts: 552,

Visits: 6.0K

|

Other Enhancement and Updates for Motion LIVE

The following information is useful for both Live Face and Faceware.

In order to familiarize everyone with the new Motion LIVE user interface, we have provided the following information:

- Auto Blink

New Auto-Blink option can be conveniently accessed right inside Facial Mocap panel to prevent unwanted eyelid movements when recording new expression clips. The settings will be stored with the character upon save, and is linked to the Auto-Blink setting inside the Modify Panel.

- Two types of Real-time Smooth (clip smooth)

- Down-sampling: For keeping the tempo (or timing) of the movement, such like neck rotation.

- Smooth: Gives an averaged (curved) smooth result.

- Record mode introduction video:

- Tips for Tracking Data Inspector U/I

1. The control panel is now resizable and color-coded, making it easy for users to navigate.

| |

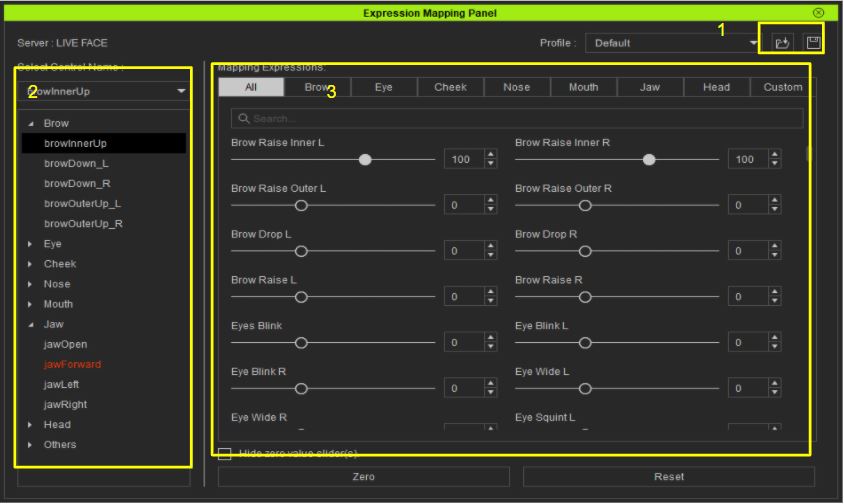

- You can modify the Expression Mapping Panel to only show the mapped sliders by clicking on the All tab button and activating the Hide zero value slider(s) checkbox.

- The window can now be resized.

- Introduction to Expression Mapping Panel User Interface

- Section 1: Profile settings gives quick access to the official default mapping settings. The additional ARKit 1:1 preset is available for Live Face, otherwise only the Default settings remain. >> (learn more about ARKit 1:1 Mapping)

- Section 2: shows the current server control name, the list is separated into groupings for faster perusal. If a control name is marked in red, then the relevant expression data is missing for the current character.

- Section 3: displays all of the applied expressions on the current character.

- We will release an update with greater number of classifications for more efficient user operation.

|

|

Eric C (RL)

|

Eric C (RL)

Posted 5 Years Ago

|

|

Group: Administrators

Last Active: 3 Weeks Ago

Posts: 552,

Visits: 6.0K

|

Live Face APP Related Update

App Update Make sure the APP is updated to v1.0.8 version before using ARKit 1:1. >>(Update now)

Save/load Json idiot-proofing

Error message will appear when an incompatible or corrupt file is loaded.

Improved accuracy

data streamed form the iPhone have increased accuracy from 2 to 5 decimal places for higher fidelity and unhampered connection.

ARKit 1:1 Mapping Profile

As long as the server is set to Live Face, and the character generation is CC3+, the ARKit 1:1 profile will be select-able.

Note: if the CC3+ character lacks ExPlus data, then an update prompt will appear.

Automatic Porting

When the port number is left blank, the default connection will be filled (currently only LIVE FACE is available).

|

|

Eric C (RL)

|

Eric C (RL)

Posted 5 Years Ago

|

|

Group: Administrators

Last Active: 3 Weeks Ago

Posts: 552,

Visits: 6.0K

|

Other Character Creator related Updates

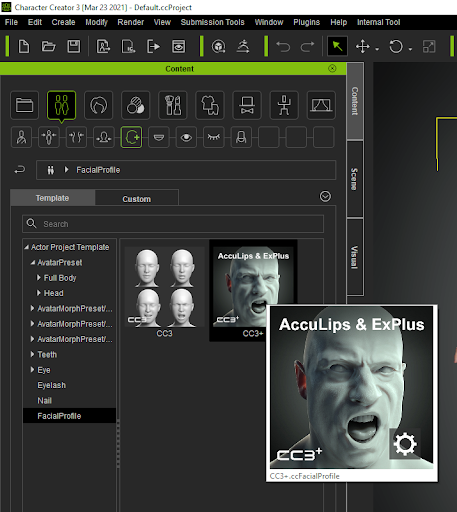

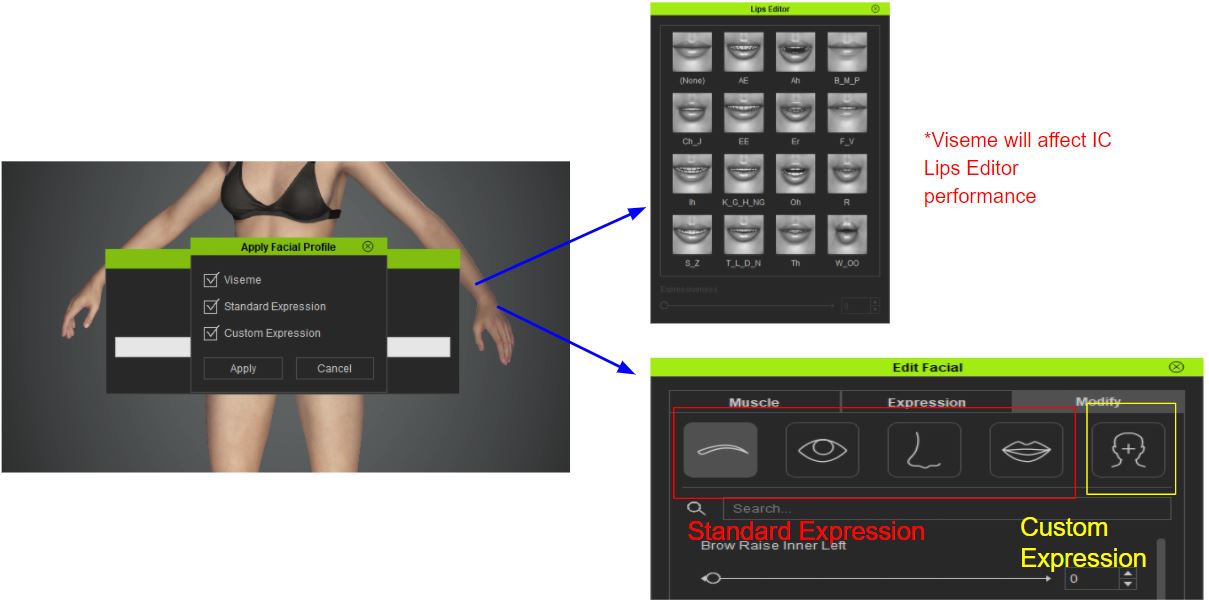

- CC - ccFacialProfile

The additional FacialProfile format is responsible for saving character expression and viseme blend-shapes (cross-generation application is not allowed). One can easily apply ExPlus data and update viseme blend-shapes compatible with AccuLips to CC3+ characters with version 7.9.

- CC - ccFacialProfile

Three modes will be available upon application of a facial profile.

|