|

Author

|

Message

|

|

Susan (RL)

|

Susan (RL)

Posted Last Year

|

|

Group: Administrators

Last Active: Last Year

Posts: 89,

Visits: 2.8K

|

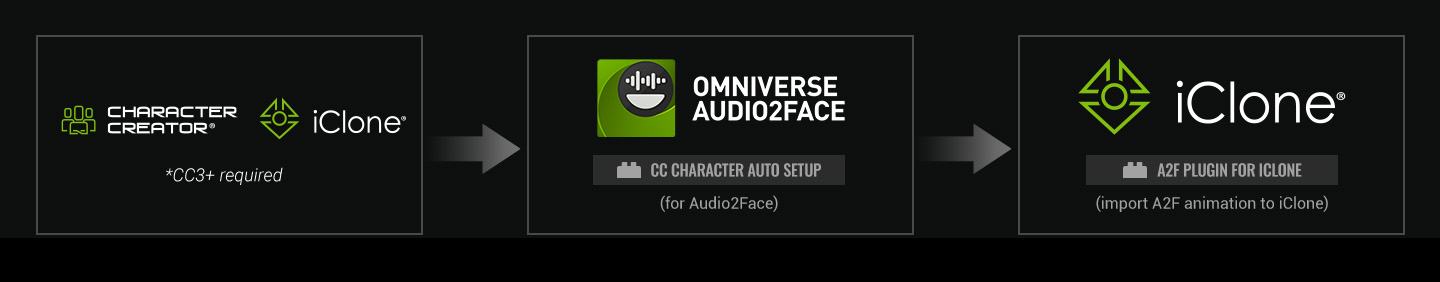

Seamless Solution leveraging iClone, Character Creator, and Audio2Face

Reallusion is thrilled to announce the seamless integration between Character Creator, iClone, and Audio2Face. This robust connection, empowered by NVIDIA’s AI animation technology, revolutionizes multi-lingual facial lip-sync animation production. Not only does this integration bolster NVIDIA’s Audio2Face with a versatile cross-application character system, it also enhances facial editing capabilities, enabling users to export character animations to leading 3D engines like Blender, Unreal Engine, Unity, and Omniverse.  NON-LINEAR AI ANIMATION GENERATED BY AUDIO2FACE Lip-Sync Animation and Expressions Straight from Audio As an AI-powered application, Nvidia Audio2Face (A2F) produces expressive facial animations solely from audio input. In addition to generating natural lip-sync animations for multilingual dialogue, the latest standalone release of Audio2Face also supports facial expressions, featuring slider controls and a keyframe editor.

Multi-Language Lip-Sync and Singing Animation

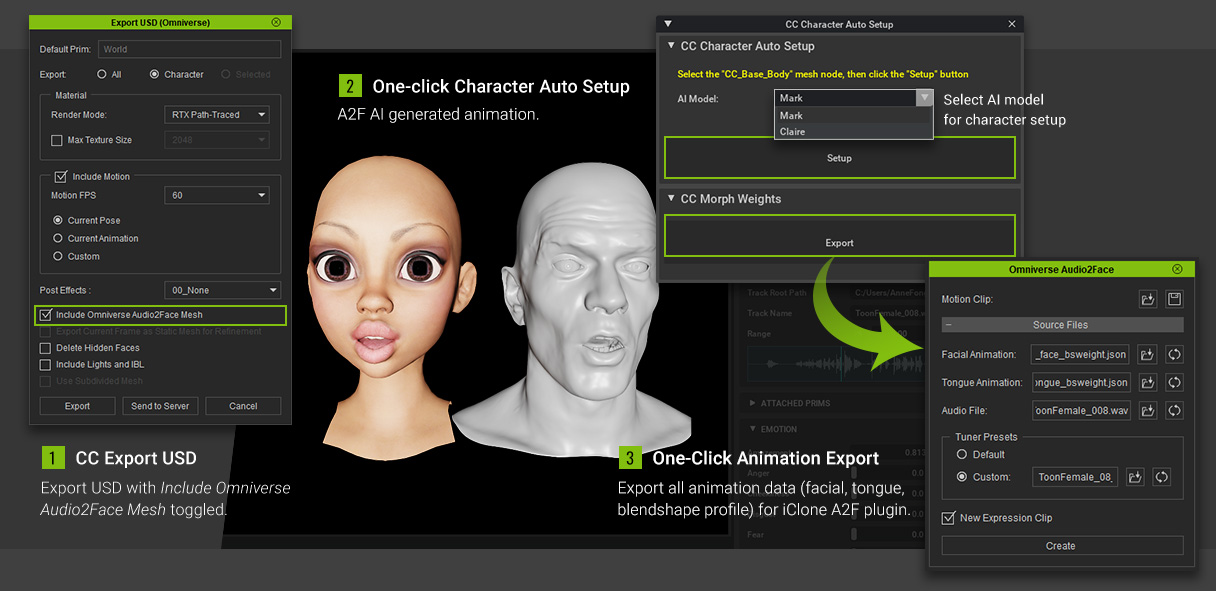

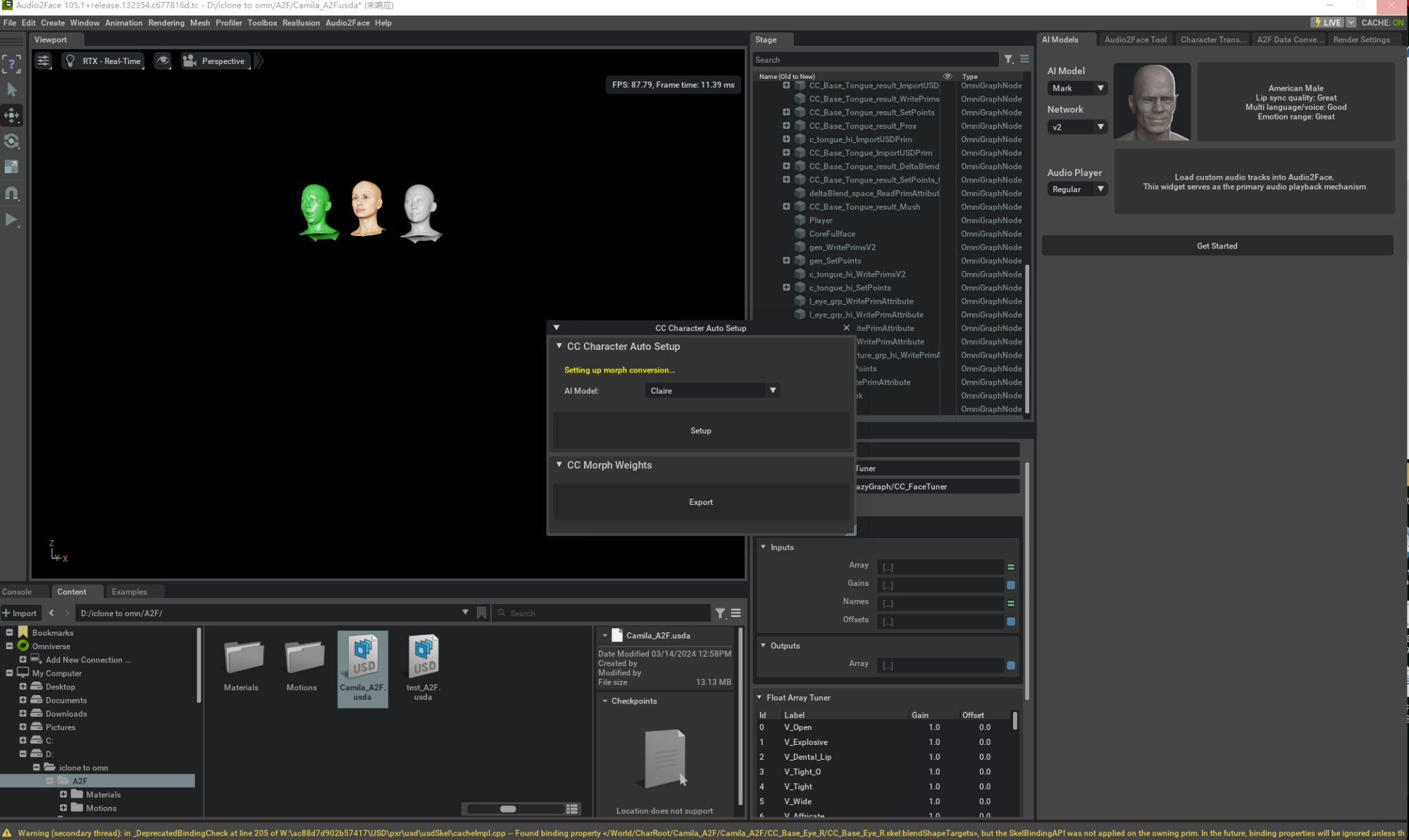

Unlike the majority of English-centric lip-sync solutions, Audio2Face stands out with its exceptional ability to generate animation from any language, including songs and gibberish. Besides the standard AI model Mark, have access to Clair, a new deep-learning model tailored for female characters proficient in Asian languages. Clair's friendly complexion is well-suited to customer interaction. SEAMLESS CC - A2F - ICLONE INTEGRATION Two complimentary plugins enable an automated workflow. With just a single click, configure a CC character in NVIDIA Audio2Face, animate it in real-time alongside an imported audio track, and seamlessly transfer the talking animation back to iClone for additional refinement before exporting it to 3D tools and game engines.

One-Click CC Character Setup in Audio2Face

The CC Character Auto Setup plugin for Audio2Face is the result of a collaboration between NVIDIA and Reallusion, condensing the manual 18-step process into a single step. By importing a CC character and choosing a training model — Mike or Clair — artists can instantly witness lifelike talking animations synchronized with audio files. Experiment with motion sliders, automatic expressions, and even set keyframes. The finalized animations can then be sent to iClone for additional refinement.  Full-Spectrum Animation Refinement using iClone The free Nvidia Audio2Face plugin for iClone is tailored to receive animation data from Audio2Face. In addition to importing animations, it enhances the liveliness of facial features, resulting in a superior cut suitable for final production.

Facial Adjustment by Parts

Animations can be tweaked via a dynamic interface. Adjust various parameters such as expression strengths, head movements, or adding darting eyes to enliven the performance. Enlarge the jaw open range to enhance emotional tension and fine-tune the position of the tongue to mimic precise enunciation.  Smoothness Enhancement Smoothness Enhancement

Generative AI animation is susceptible to noise, particularly when audio files are captured by low-fidelity devices or within unfavorable environments. Reallusion Audio2Face integration circumvents these limitations by deploying a highly refined noise filter to eliminate jitters and achieve optimal results despite poor audio quality. RAISING THE BAR After obtaining a satisfactory animation from Audio2Face, a finishing touch becomes necessary, particularly when faced with emotional shifts or when emphasizing specific mouth shapes at varying levels of dialogue. iClone empowers facial editing, allowing for refined lip sync, the addition of natural expressions, and the incorporation of head movement sourced from mocap equipment.  The integration of Character Creator, iClone, and Audio2Face marks a significant milestone in AI-driven animation technology, offering creators unprecedented source audio flexibility and efficiency in their production workflows. The Character Creator Auto Setup plugin and iClone plugin are now available as free downloads from Reallusion, empowering creators to streamline their animation pipelines and unleash their creative potential.

To learn more about iClone A2F Plug-in, please visit:

|

|

|

|

|

rosuckmedia

|

rosuckmedia

Posted Last Year

|

|

Group: Forum Members

Last Active: 7 Months Ago

Posts: 3.2K,

Visits: 4.8K

|

Hi Susan, Wow, that's an excellent job.👍👍👍 Thanks to Reallusion and Nvidia I'll try it out today. Greetings Robert

|

|

|

|

|

K_Digital

|

K_Digital

Posted Last Year

|

|

Group: Forum Members

Last Active: 2 Months Ago

Posts: 327,

Visits: 1.4K

|

Nice, but only working with "RTX" etc.

|

|

|

|

|

MakeRealVR

|

MakeRealVR

Posted Last Year

|

|

Group: Forum Members

Last Active: 11 Months Ago

Posts: 2,

Visits: 124

|

Is there a demo project of Audio2Face being used with iClone and Unity? I'm trying to figure out the workflow.

|

|

|

|

|

Sophus

|

|

|

Group: Forum Members

Last Active: 4 days ago

Posts: 230,

Visits: 2.6K

|

Yes, Nvidia prevents other companies to use their AI technology. They don't allow to write software to make CUDA work on other hardware as well. So it won't work with Intel or AMD. There basically no competition.

|

|

|

|

|

Leodegrance

|

Leodegrance

Posted Last Year

|

|

Group: Forum Members

Last Active: Yesterday

Posts: 72,

Visits: 3.7K

|

Just started testing this plug-in out. Looks promising. I remember over a year ago having to set up the targeting manually, which was a total pain. Now, porting the character from CC4/iClone is just one click after selecting the A2F model. I seem to recall though, that Audio2Face has a batch function to generate multiple audio files one after the other. But, in using the plugin to send the json morphs back to iClone, looks like it has to be done one file at a time. Or, am I missing something?

|

|

|

|

|

risto_986282

|

risto_986282

Posted Last Year

|

|

Group: Forum Members

Last Active: Last Year

Posts: 6,

Visits: 81

|

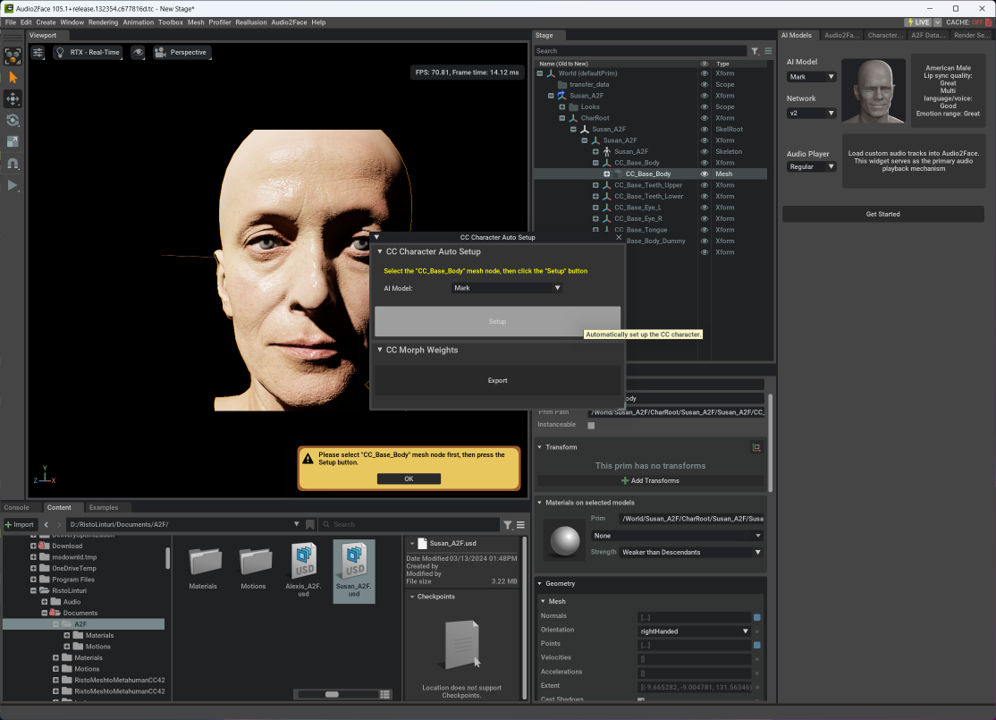

I am happy for the new more simple workflow, but for some reason I do not get it to work for me. I could work with the previous one, but when I activate the autosetup it claims I should select the CC_Base_Body mesh node. I have done so. I also have tried several characters and reinstalled. But I get the same fault continuously. I would be happy to hear some ideas. EDIT: I got it solved - for me it required to use the right mouse button and select Open when opening the USD-file. Opening it by dragging it or double click did always cause this problem with several different characters.

|

|

|

|

|

risto_986282

|

risto_986282

Posted Last Year

|

|

Group: Forum Members

Last Active: Last Year

Posts: 6,

Visits: 81

|

I got the autosetup working. The only difference is that instead of dragging the A2F.usd -file or double clicking it, I use right mouse button and select open from the menu to open the file. Then it works. I wonder if the default is wrong or what happens, but I have repeated this with several files.

|

|

|

|

|

Joanne (RL)

|

Joanne (RL)

Posted Last Year

|

|

Group: Administrators

Last Active: Last Month

Posts: 300,

Visits: 4.9K

|

Leodegrance (3/13/2024)

Just started testing this plug-in out. Looks promising. I remember over a year ago having to set up the targeting manually, which was a total pain. Now, porting the character from CC4/iClone is just one click after selecting the A2F model. I seem to recall though, that Audio2Face has a batch function to generate multiple audio files one after the other. But, in using the plugin to send the json morphs back to iClone, looks like it has to be done one file at a time. Or, am I missing something?Currently, A2F plugin does not have the function of batch loading back the Json files. I'll pass this suggestion to the development team. Thank you~

|

|

|

|

|

liulifeng

|

liulifeng

Posted Last Year

|

|

Group: Forum Members

Last Active: Last Year

Posts: 1,

Visits: 8

|

Does anyone know the reason why the software crashed at this progress?

|

|

|

|