|

Eric C (RL)

|

Eric C (RL)

Posted 3 Years Ago

|

|

Group: Administrators

Last Active: Last Week

Posts: 552,

Visits: 6.0K

|

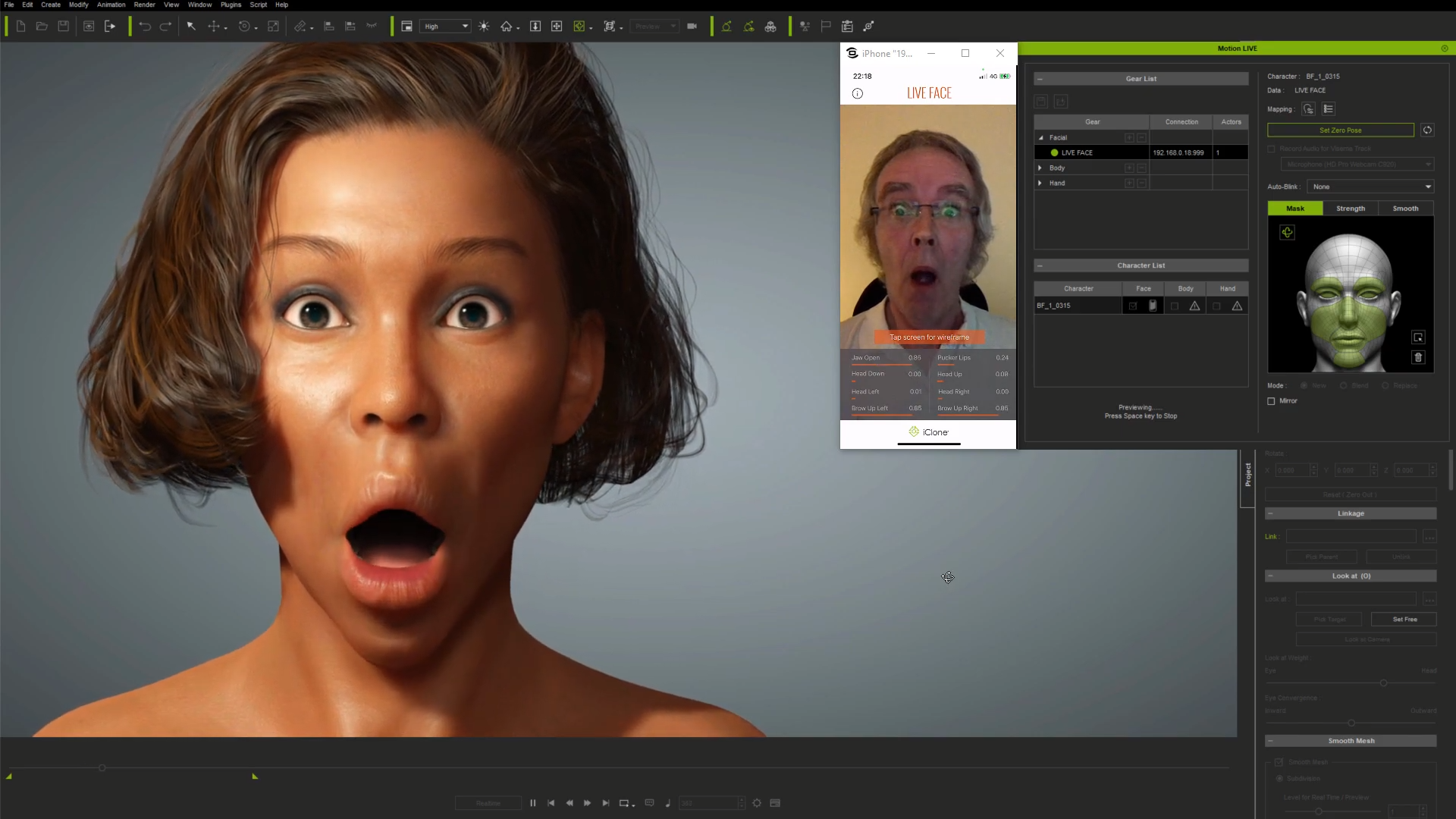

What's new for Motion LIVE 1.1 and LiveFace Profile 1.05 Reallusion's latest facial mo-cap tools go further, addressing core issues in the facial mo-cap pipeline letting you produce better animation. From all new ARKit expressions to easily adjustable raw mo-cap data as well as re-targeting, to multi-track facial recording and essential mo-cap cleanup - we're covering all the bases to provide the most powerful, flexible and user-friendly facial mo-cap approach yet. Read below and find out what we have achieved in this update. The biggest issue with all live mo-cap is that it's noisy, twitchy and difficult to work with, so smoothing the live data in real-time is a major necessity; Because if the mo-cap data isn't right, or the the model's expressions aren't what you want, and the current mo-cap recording isn't quite accurate then you need to have access to the back-end tools to adjust all of this. Finally, while you can smooth the data in real-time from the start, you can also cleanup existing recorded clips using proven smoothing methods which can be applied at the push of a button.

Learn more information from the iPhone Face Mocap page.- 1. iPhone LiveFace App Update (High Tracking Precision)

LIVE FACE data streaming has increased in accuracy from two decimal points to five.

>>> (*Requires to update LIVE FACE iPhone App to version 1.0.8 (Feb 22, 2021) / (see video)

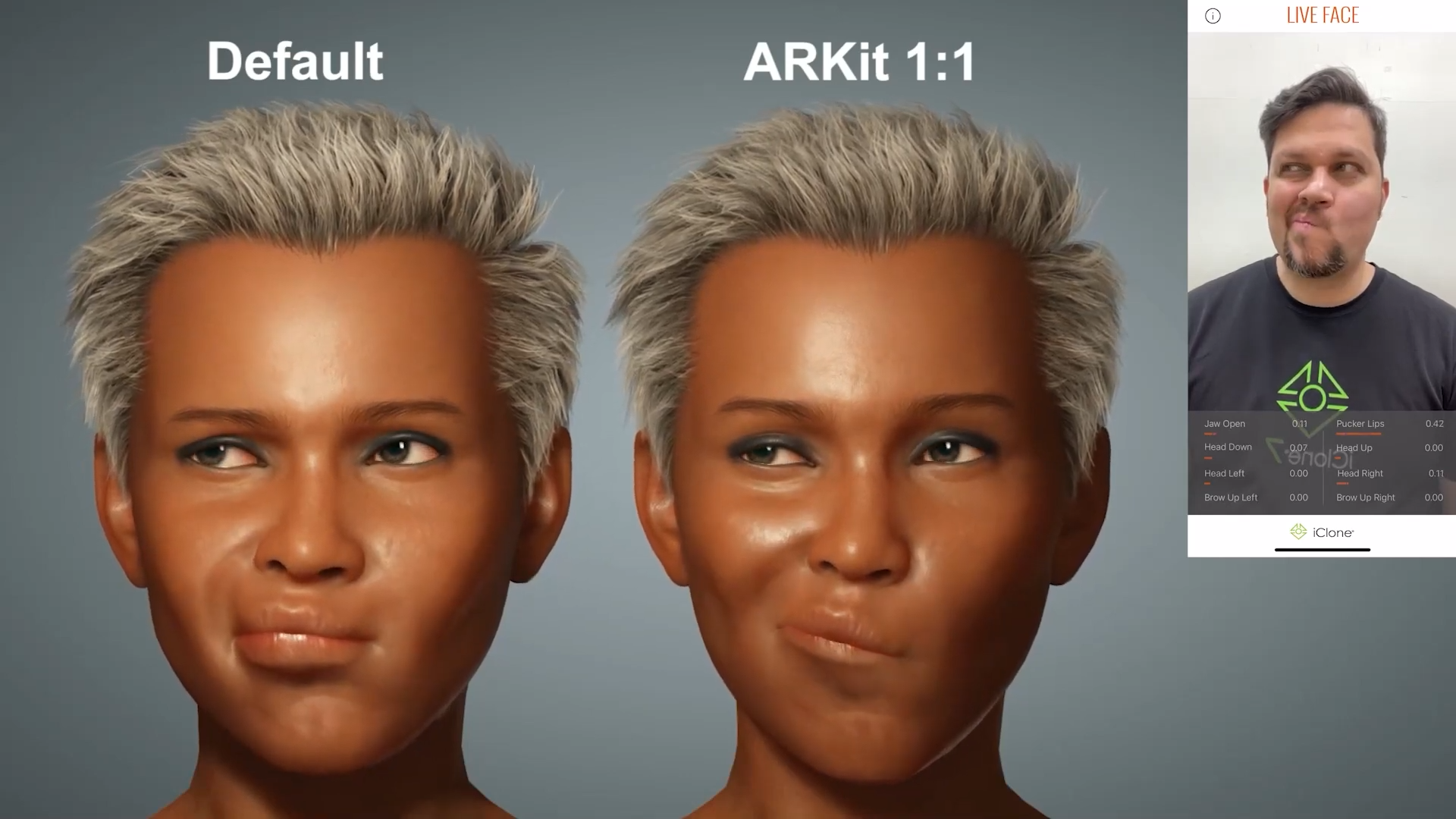

- ARKit 1:1 Blendshapes (52+11)

iPhone LiveFace supports ExpressionPlus (ARKit 1:1 mode). The iPhone tracking data is now one-to-one mapped to 52 scan-based blend-shapes from CC3+ character base, giving much more expressive facial performance. / (see video)

Additional tongue movement is also included. (Visit "Facial Expression page")

- 2. Tracking Data Inspector: Multiplier added

- No longer just a data inspector, with "Multiplier", you can boost or weaken the tracking data strength.

- Use this feature to compensate the imbalance of tracking data strength, and help accommodate the performer's effective range.

- Added Color Coding for brows, eyes, jaw, cheek, and tongue. It helps to easily identify triggered facial region.

(see video)

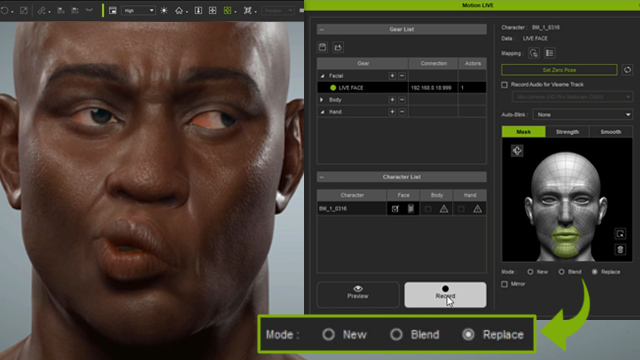

- 3. Multi-pass Recording: Capture Mode - New, Add, Replace

- New: Start a new recording, overwriting the original clip.

- Replace: A very powerful feature! Now you can use Replace to erase selected muscle area, or replace the selected muscle area with new puppet performance.

- Add: Blend the new puppet performance onto the underlying clip.

(see video)

- 4. Real -time Smooth Tab

- Added a new Smooth tab, with separate Smooth Sliders for Head Rotation, Muscle, Eyes, Eyelid.

- Usually we need to give some smooth values to Head Rotation for natural head turn.

(see video)

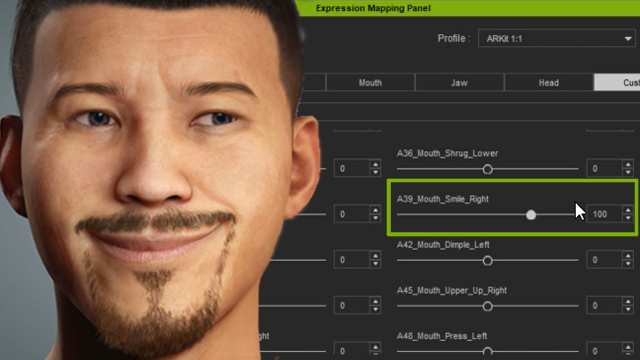

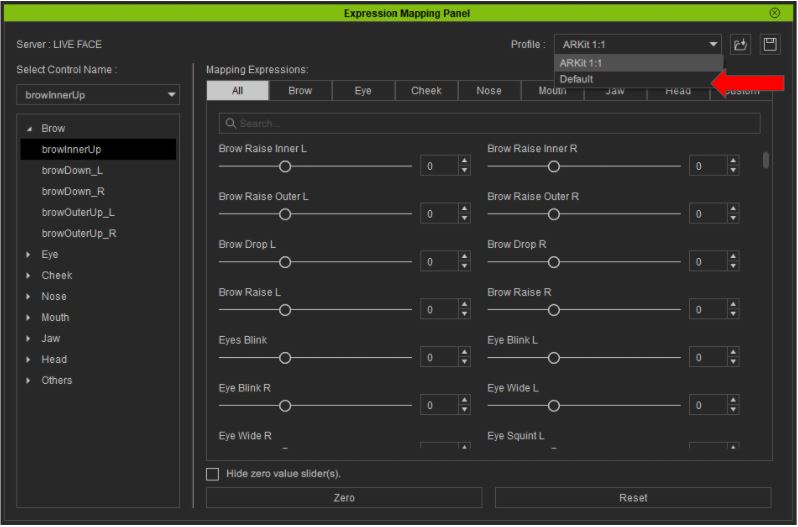

- 5. New Expression Mapping

- Re-targeting (tracking data to blend-shape) is made easy with the new, flexible mapping UI.

- Default and ARKit 1to1 presets provide instant re-targeting for CC3+ avatars.

- Customize expressions easily by blending them.

(see video)

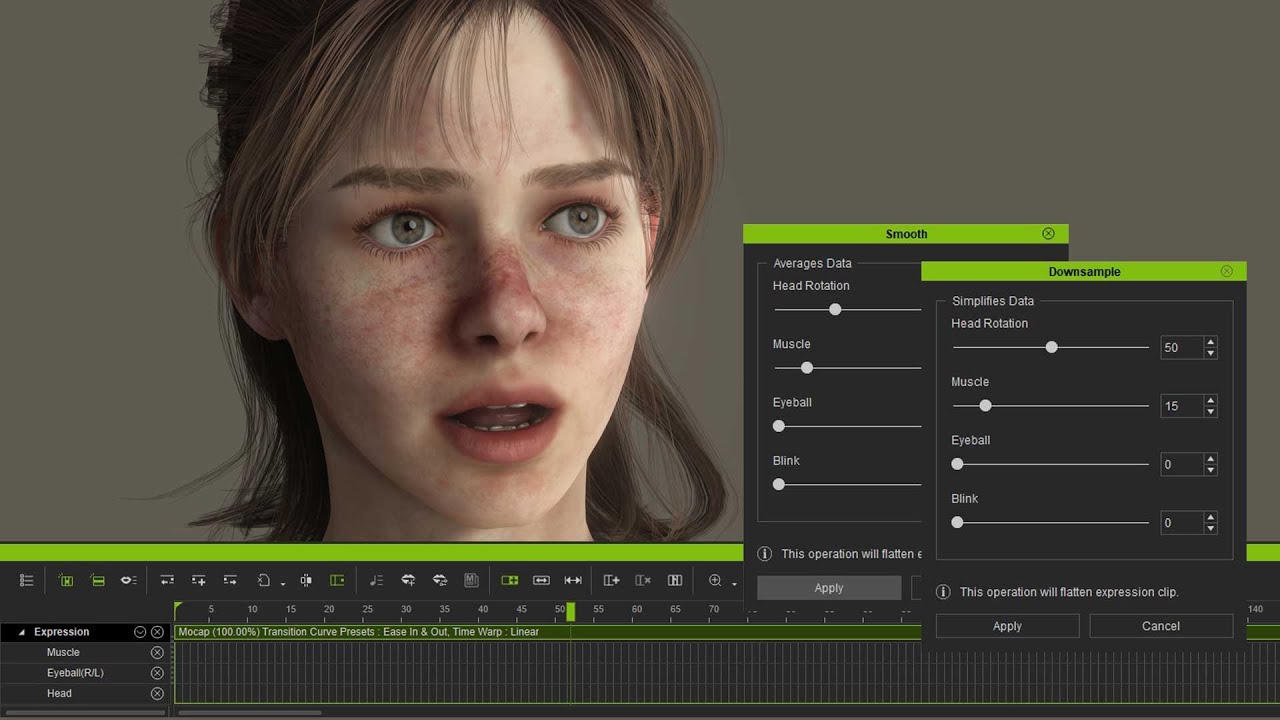

- 6. Post Smooth (Timeline - Expression Track - Clip)

- This feature can effectively fix mo-cap judders, snappy head rotation, or any eyelid, eyeball, or muscle twitches

- Smooth recorded data on clips using either Smoothing (moving average) or Down-sampling (key reduction) methods.

(see video)

Known Issues:

- If the CC3+ character was denied the update to ExpressionPlus, the mocap result will not be shown, and one will need to switch back to Default in the Mapping panel.

- Using two different Full Body Profiles (Neuron or Rokoko) on the same character like applying A profile to the body and B profile to the hands, will cause the application to crash.

|