|

By sonic7 - 2 Years Ago

|

Not sure if this has been mentioned on the forum, but in case it hasn't - check out what this guy is doing ...

Here's just one of his videos:

|

|

By Kelleytoons - 2 Years Ago

|

Actually RL kind of "started" with this very process - CrazyTalk was kind of the cornerstone of this, using still images and animating them, decades ago (RL kind of had a twin path, with CT and iClone, but unless I'm misremembering, CT came first).

So - old tech, nothing new here (perhaps just done a lot more polished, but it was kind of amazing what CT could do back before 3D animation was even a possibility).

|

|

By sonic7 - 2 Years Ago

|

Yeah - when I see 'young' guys (ie: sharp minds) jumping in and out of various software's like this it both fascinates AND unsettles me.

Fascination because of the growing options and pathways becoming available to create visual stories. But unsettling (for me) because it makes me question my current approach. And I've always 'preferred' using a single software (in order to get to know it well) - even though some would say that's unrealistic. I do use Blender 'a bit' to supplement iClone. It's natural to want to use the 'easiest' approach given how involved film making is.

|

|

By AutoDidact - 2 Years Ago

|

|

Companies Like Daz3D ,who’s main focus is still image rendering, will likely be put out of business by AI art generation, within five years. Some of their user base is already publicly announcing their migration. It will take a bit longer for usable animation and unlimited camera angles etc. but People will soon have an alternative to buying into expensive software and hardware eco system like Iclone as well.

|

|

By Sophus - 2 Years Ago

|

Some days ago Corridor Crew released a short film where they filmed themselves before a green screen and then used Stable Diffusion to change the art style of the recordings into an anime.

The impressive part is, that they found a way to get a somewhat stable image generation, at least for this style. They used Dreambooth to train a model of their faces, then stabilised the noise seed during image creation and later used some anti flicker filters in After Effects.

The backgrounds were generated in Stable Diffusion as well. To get the church backgrounds consistent looking they used an Unreal Engine asset as an input image.

So, it's not only image based but movie based. Pretty impressive stuff, but I'm not sure this will work with more realistic looking styles.

But you could also use DAZ or Reallusion characters to train the model and transfer the style instead of real human actors.

https://www.youtube.com/watch?v=GVT3WUa-48Y

|

|

By sonic7 - 2 Years Ago

|

Although things are heading in this AI direction, it still has a way to go before it could supplant the use of *real* 3D Avatars, Sets and Props - though things seem to be accelerating fast. For now, I guess *some* aspects of these newer approaches could be used to *supplement* the current 3D method of film making. I'm thinking mainly of the depth map approach this guy demonstrated. For example, in shots that have only a small camera movement (like establishing shots / 'one-off' shots). It might save on both the cost and design-time of using actual sets/props, but could still be lit as actual 3D mesh. The *design* could instead be done in 2D then displaced into 3D using the depth map approach. I'm wondering, after using the "displacement modifier" (in Blender) to create the 3D look, whether that can be exported out of Blender as actual 3D mesh for importing into iClone? Someone might know about this.

Another way of supplementing iClone film production might be to use the "I-Id animation software" mentioned for creating background *extras* in scenes where the lower resolution wouldn't matter since the characters would occupy only a fraction of the frame.

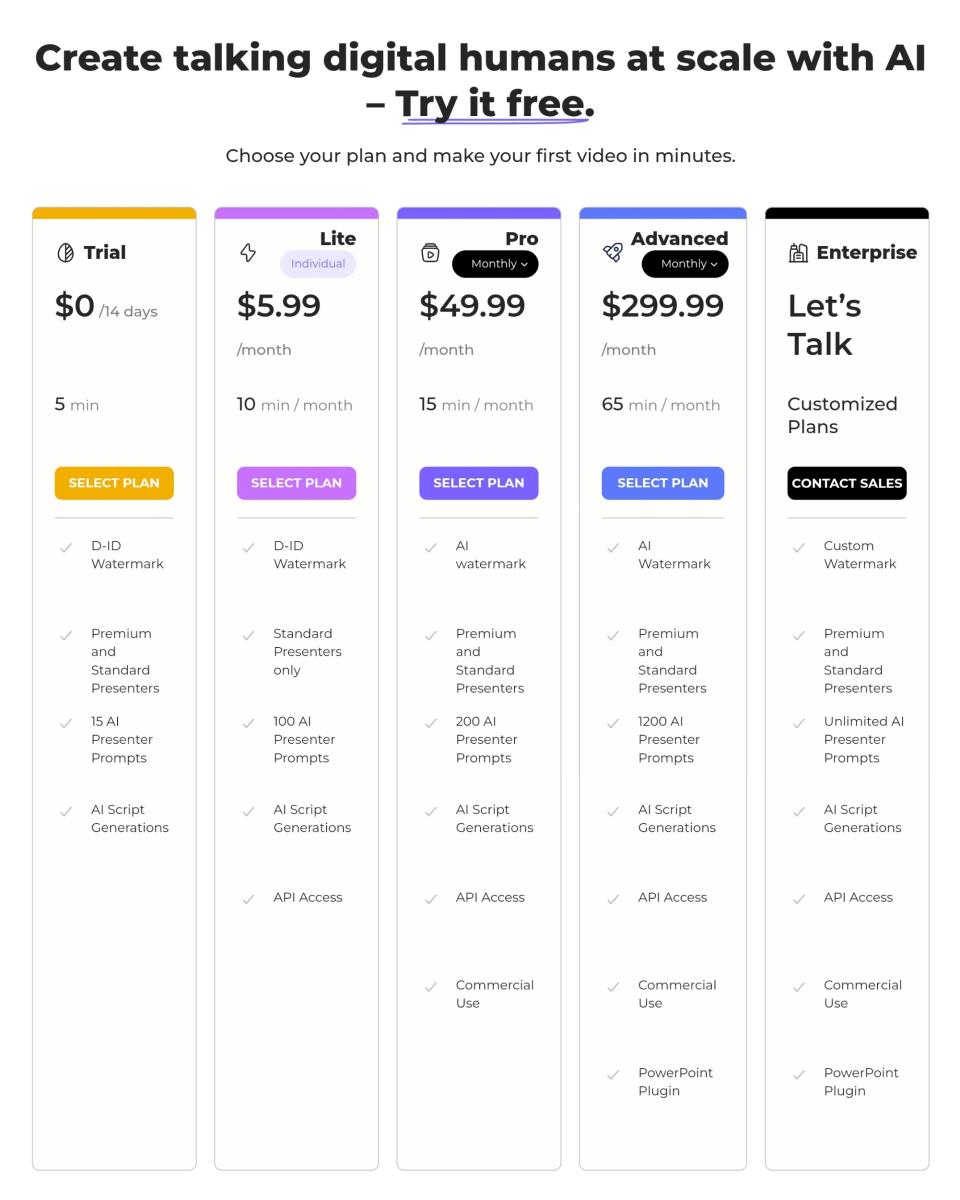

Edid: - Just looked up "I-Id" and, (unfortunately) the *cost* for the small time solo filmaker could well be off-putting.

($50/month if wanting 'commercial' use, and that's for just 15 minutes).

|

|

By AutoDidact - 2 Years Ago

|

|

Yeah that D-ID service is clearly targeted toward corporate clients. I quickly burned though my 14 day trial and made a few videos where I generated an AI character on another site and used it with D-ID for easy talking head/commentary clips. I have CTA4 I wish it were this easy to make talking heads from any front facing image with out all of the manual set up required.

|

|

By Kelleytoons - 2 Years Ago

|

I saw the Corridor Crew video where they processed their live action stuff and I got really excited because I wanted to do the same with iClone rendered footage. But - WAY too much work (even though they talk about "democratizing" the animation process, it still requires quite a team and quite expensive equipment and software). The Stable Diffusion app they used, DreamStudio, is "okay" - I've had fun playing with it but it's nowhere near ready to handle video (again, they did it with $$$ - no one man studio could possibly do the same). Plus - the process they used was for a VERY specific animation style with hardly any background movement (still images animated only). I think we are DECADES away from being able to take our rendered stuff and actually process it in a way that makes it any better than the so-so filters any program offers today (I won't be alive, for sure).

So - it's interesting. Even this guy's "animation" is VERY limited - sort of panning around the talking head. I can't see much practical use except as a one-off. We already have MUCH better tools and ability to generate MUCH better looks inside of iClone itself, with or without post processing. And doing it even easier (assuming you have the necessary mocap tools - and, yes, they do cost $$ but if this old man can afford them then almost anyone can).

|

|

By sonic7 - 2 Years Ago

|

Yeah - Personally, the animation using D-ID doesn't overly grab me, but I'm wondering about the Blender 3D displacement approach using depth maps. - I checked, and apparently it IS possible to convert the Blender projected displacements into actual 3D mesh for export. I see two advantages and one disadvantage.

+ Greater creative freedom in generating *any* imaginable background using 2D artwork as a starting point.

+ Cost savings on 3D asset purchases.

- But, it involves multiple steps 1) Create the artwork, 2) Generate the depth maps, 3) Layout in Blender and export mesh (assuming iClone usage).

I guess you could get 'similar' results using a couple of iClone's planes to force a "shift in perspective" during a camera move.

|

|

By AutoDidact - 2 Years Ago

|

I think we are DECADES away from being able to take our rendered stuff and actually process it in a way that makes it any better than the so-so filters Daz recently updated their EULA to put restrictions on using Daz Assets in AI generators. This is a clear indication that the Daz/poser and perhaps Iclone community does NOT seem understand that this technology is creating a separate standalone eco system that wont depend on users buying & owning 3D assets and expensive software and rendering them to be “filtered” or “converted” after the fact.. They already have the ability create consistent Characters from nothing or custom trained self inserted styles images. Now they can Re-POSE them for consistent sequential art such as graphic novels/comics full animation frames is not that far off.

Yes ATM ,you still need a beefy GPU PC for home spun custom AI Data training, but it will only be a short time before all the tedious labor that the Corridoor crew Did, is packaged into a back ended subscription service for both laypeople hobbyists and pros with budgets. I predict the the current business models of companies like Daz & Poser will be the first to die and the animation tech will threaten Reallusion sooner than later.

|

|

By Kelleytoons - 2 Years Ago

|

Nope, I don't believe this at all. Again, I've yet to see any video made by ANYBODY that convinces me AI animation generation is a replacement for things like iClone. But I guess it's what you want to make (I don't want to make the crap they show).

And Sonic - you can generate 3D assets much easier than you are proposing by using the freeware 3D scanning software available. I've made a ton of things - probably is that I don't want to work in a "realistic" style so they have limited use for me, but they do have some real value in our work. I'll try and upload some examples (maybe even today, in a separate thread).

FAR easier than trying to export stuff to Blender and then making 3D models out of displacement maps.

|

|

By AutoDidact - 2 Years Ago

|

Nope, I don't believe this at all. Again, I've yet to see any video made by ANYBODY that convinces me AI animation generation is a replacement for things like iClone. As of march 5, 2023, No... a complete AI CG animation replacement for Maya,Blender ,Iclone does not exist. But the AI tech is learning extremely fast and it wont take us nowhere as long as old the methods did ,to get us from that horrible CG monkey in “lost in space”(1998) to Thanos in "Endgame" (2019) Believe what ever you like but anyone who thinks the animation side of this tech has peaked with that janky “Anime test” from the corridoor crew, is just not paying attention.

|

|

By Kelleytoons - 2 Years Ago

|

|

I promise you I'm right - but I think I'll at least live to say "I told you so." <g>

|

|

By animagic - 2 Years Ago

|

For me the most interesting application is using iClone to render and then have some post-processing to create a specific look. For the moment I do that with Photoshop and After Effects, but I'm always interested in new approaches.

If you are talking about AI-based tools, this is not a trivial task. It requires, for example, recognition of a character in a scene and then keeping track of it. A simple turning of the head or body may mess things up. Then, if there is background of any complexity, it may simply not work properly. The hard part is temporal consistency; how do things work out from frame to frame.

I'm sure Ai-based methods will develop further, but right now it seems to have become a quick money maker for the developers, which will make the tools out of reach for most.

Possibly of some interest is a tool that I learnt about via one of the listservs I'm subscribed to.

It's called Studio Artist and has been around from before the recent AI boom. It takes video and then post-processes it in all kinds of styles that can be tailored to one's needs. The latest version has an AI component for rotoscoping the video (I suppose). There is a trial and when I have some time I like to try it out. There is no monthly subscription nonsense, you buy it outright.

|

|

By Kelleytoons - 2 Years Ago

|

That's interesting, Ani, but still not what I'd really like. I DO like what the Corridor Crew did, in theory, but to this trained eye it looks about five years down the road before this is going to be easily available. And even then there will be $$ and a lot of learning to post process things. I do wish we had a "make 2D cartoon" filter but the closest we'll get in my lifetime is the AE kind of approach.

Speaking of which - I did go ahead and (quickly just now) create a tabletop of my wife's shells and nicknacks and 3D scanned it using Scaniverse (freeware) and then brought it into iClone and added Kevin with the canned animation. To me it shows the power of creating your own 3D world fairly easily with almost anything you can find - note that I didn't touch the 3D scan any but it could have been brought into, say, Substance Painter and further enhanced. For folks far more talented than I am, you could build your own tabletop model and easily bring it into your animations (add a few mountains and tree assets and it would be hard to tell - hey, I may do that just now to see).

|

|

By AutoDidact - 2 Years Ago

|

|

I don’t need AI just adding “toon filters” etc. to my 3D renders as this is already possible (in render) with Blender, without the Flickering that was still preset in the Corridoor crews “Anime” test nor do I have any interest in restyling live footage as they Did. I do plan on trying AI generated ”Depth” backgrounds with my Blender Anime shaded characters as this lovely young woman shows here

|

|

By sonic7 - 2 Years Ago

|

@Mike - thank you for your demonstration of Scaniverse. This would be a great solution for creating background 3D scenes of real-world locations as well as actual 3D objects (props). I imagine it's a viable option for pretty much 'anything' real world. (I'd given 'Meshroom' a try in the past (free photogrammetry program executed on your PC) - it was quite good.

@Autodidact - I (mostly) understood what was being done in the video you posted. It could be used for images both real world (as in the demo with the vanishing street view), OR with probably any artwork (including fictional). It's a lot of effort and I imagine every intended shot would need to be fully envisaged (story-boarded) ahead of time. Compared to the depth map approach is does offer the advantage of being able to "dolly in" as shown in that demo. Realistically, most background settings are either 'static' or have movement that's ◇wobbling horizontally and vertically (hand-held), ◇fixed on tripod (pan & tilt), ◇sideways tracking (dolly shots), crane shots (rising jib-arm). I don't see why this method wouldn't work for all such moves, however I'm not sure whether there's enough advantage to warrant all that work flow - different if you're a production company of course.

But I guess even the depth map approach would need to be story boarded ahead of time, and it also requires multiple steps, though it's probably easier than manually adding XYZ geometry. I'm guessing the depth map approach would also work equally well on non-architectural settings ie: nature imagery (hills & trees) - though I'm not 100% sure. Wouldn't it be great if there was a 'one click button' to displace a photo (estimating it's depth) and instantly converting it into a fully textured 3D mesh. That would be amazing!

|

|

By AutoDidact - 2 Years Ago

|

|

I foresee Alot of ways that AI will eventually enhance existing 3D work flows. However the monetized services that are popping up (seemingly on a daily basis) are not really targeting users who are already deeply invested in huge, ponderous 3Dcontent Eco systems like Daz & Iclone.

They are clearly targeting lay people who want packaged solution ready to go. That D-ID service for business & corporate virtual presenters is a highly attractive alternative to buying a 3D software and learning about animating rigs with lipsinc etc etc. it is everything crazy talk should have become.

|

|

By sonic7 - 2 Years Ago

|

Yes, you're not wrong. AI image generation is already incredible. I've tried a few of the (free) sites and one of the better ones I found was "Cyberpunk Generator API", though there may be better ones that I haven't found.

Here's a couple of the images I was able to get (showing the text I typed in) .....

Interior of small symmetrical alien spaceship, photo realistic, showing streamlined alien controls, very clean lines, window showing stars, precise details, cinematic

(used "style #4")

An alien planet landscape, photo realistic, wide screen, high resolution, cinematic

(used "style #3")

And the image size (if you use their 'enhance' button before export) is 3200 x 1600 pixels, which is fairly decent.

Of course they need a little 'fixing' - especilly the spaceship shot, due to strange artifacts, but overall they're fairly stunning shots. You get a lot of bad ones too, but I'd say 1 in 10 is probably a "keeper".

If you don't use the 'enhance' button, they download as 1024 x 512 (resolution used for creating the image), so there's some 'upscaling' used to get the 3200 x 1600 enhanced version as a download.

..... seems the AI does a decent job with the lighting too - (sorry, another spaceship) .....

I guess at the very least, AI generated images are a great way to get ideas and inspiration - even if you end up building the sets the traditional way with mesh .....

|

|

By Kelleytoons - 2 Years Ago

|

Yeah, but rendering stills is a COMPLETELY different ballgame than animation. Otherwise Photoshop would have been all anyone ever needed for animation.

I think we are at least a decade away from decent AI animation tools - I would love to be wrong but I doubt that I am. You guys will most likely see them (I'll be long gone).

|

|

By AutoDidact - 2 Years Ago

|

|

Those are great images Steve I have tried several ( all free) but my favorite free one so far is: they have alot of styles you can apply I use AI images for all of my video custom video thumbnails for YT.

|

|

By sonic7 - 2 Years Ago

|

@Mike .... Yes, I was thinking along the lines of backdrops for scenes. They could be just static, or could be used with added mid-ground and fore-ground props (as mesh) to create depth in a scene. Then there was the other idea of (maybe) using an image with a displacement applied to force some depth, though camera movement would be very limited that way (as per the video at the top of this thread). Another possibility is to split parts of it up into 2 or 3 separated 'planes' (in iClone), which would also create the illusion of depth. I like the idea of using backdrops because of being a quick approach, but I also 'prefer' the flexibility you get with proper background 'mesh' (sets/props) because you can do any camera move you want and you'll always have totally correct perspective. But then that means lots of set building (plus purchase costs). The other thing that concerns me a bit with image backgrounds (of any kind), is getting a believable look with the placement of actors. I guess I lack the confidence in what is after all a 'shamming/cheating' approach because I'm 'old school' and like to go about things as if on a *real* set where all perspective is always accurate and you more or less don't have to give it a thought. But all that aside - at the very least this AI is amazing for inspiration if nothing else. I may well end up still building sets - which will take me an absolute eternity - but everything will be there no matter which camera angles I choose - and that's extra peace of mind. As you know though, I often like to look into alternative ways of doing things.

@Auto .... sorry, just now saw your post .... Yes - I'll check that one out .... thanks .....

Oh - and by the way, I found out that you only get a certain number of 'free' images generated with *Cyberpunk Generator API* (not sure how many, but a couple of hundred at least). After that, it's $5 USD for each 500 images you generate *if* you go with their monthly ($5) plan. Otherwise, if you pay a single 'one-off' $5, you get 100 generated images. So that's their pricing as of March, 2023 ---- edit

|

|

By AutoDidact - 2 Years Ago

|

@Auto .... sorry, just now saw your post .... Yes - I'll check that one out .... thanks ..... Oh - and by the way, I found out that you only get a certain number of 'free' images generated with *Cyberpunk Generator API* (not sure how many, but a couple of hundred at least). After that, it's $5 USD for each 500 images you generate.Oh - and by the way, I found out that you only get a certain number of 'free' images generated with *Cyberpunk Generator API* (not sure how many, but a couple of hundred at least). After that, it's $5 USD for each 500 images you generate. @Steve playground.ai gives you 1000 free images per day (limit resolution though) But I have the Topaz Gigapixel AI upscaler, over on my Imac, to enlarge my AI images.

|

|

By planetstardragon - 2 Years Ago

|

it's all old tech with new tools - hollywood has been faking it like this since the beginning and using clay space ships hanging on fishing string. that's one issue i've always had with the photorealistic rendering mindset, its so focused on getting final results from 1 program, which is very limiting. if you watch the behind the scene videos on youtube, you'd see all the best movies are layered with graphics and matte paintings.

for the voices, I've been amused with this site lately - https://fakeyou.com/ i've been following them since it started, but now they turned pro and have hundreds of tts designed from famous characters. No idea how long that will last though because of legal copyright reasons from using a celebrity voice, but for now it works really well lol

and on the AI - i've been indulging in it greatly lately, namely chatgpt for helping me write articles, scripts, poems, copywrite, songs, we even talk about quantum physics, ai sentience and started an art line called "AI Thug life" lol - it's great and i've completely embraced the new AI trends. all these resources are here, but ultimately it's new tools with the old tech of grabbing anything around you and compositing it to one entertaining story.

Thsi is a blog i started in collaboration with Chatgpt - I'm having a ton of fun with it doing all sorts of things with AI that would make most scientists cringe!! :w00t: https://anirhythm.wordpress.com/ - I call it the intellirhythm blog - "Igniting creativity through the power of AI and technology". - the artworks are also my text to prompt ai art. - check out namely the AI Fight blog post, where the AI wrote the full script of a court battle between good and evil AI. :alien:

cheers, I hope everyone is doing well! :kiss:

|

|

By sonic7 - 2 Years Ago

|

@planetstardragon - yes, I've had a few conversations with 'ChatGPT' and it's fascinating the depth of the responses it can give. It seems to encompass a huge range of topics too. The only thing I wish it had (as of early 2023), is a 'current' knowledge base of the internet, which (for now) is capped at late 2021. I don't know the reasoning behind this - I guess it doesn't really matter because the responses are still essentially up to date. Your topics of interaction with ChatGPT are most interesting - plus they're a real test of the depth of understanding of ChatGPT's knowledge base.

Also - similar to you, I typed a short outline and was surprised at the AI response for a story, though it was often brief and would end abruptly. So I'd break it down into scenes (or even parts of scenes), to get suggestions for those shorter moments. With this approach it's possible to embellish an entire story piece by piece. It reminds me of a very old TV commercial from years ago. Don't know if it ran in your part of the world, but it was for "John West" canned fish (tuna, salmon, etc). And the line at the end of every John West commercial went "It's the fish John West rejects, that makes John West the best". I see the same approach with AI story suggestions - ..... keep (and use) the promising AI suggestions and throw away the rest ....

|

|

By planetstardragon - 2 Years Ago

|

Thank you, I'm happy you enjoyed it. I've been playing with AI language models for several years now - trained my own personal bot for 5 years, i stopped because it got too creepy! the chatgpt is safer because it's not collecting data on me, where as my personal bot did. - the real magic comes out when you correlate topics - like talking about 2 or more unrelated topics but then connecting them through logic and what they have in common, that's when you get the AI to process data metaphorically and the mind games begin lol. :alien:

|

|

By sonic7 - 2 Years Ago

|

@Planetstardragon - That's most interesting - I'll give that a good deal of thought and try out some ideas ...

@Auto - yes, I was just getting into your suggestion of "PlaygroundAI" when I discovered the limitation you mentioned. After your first 50 images, they throttle your 'Quality' & 'Detail' settings to a very low, almost unuseable ceiling. Of course you can remove that by buying a $15/month plan, but since I'd only just started to evaluate their AI, I wasn't yet in a position to know whether it would yield usable results ...

|

|

By animagic - 2 Years Ago

|

This somewhat off topic, but I just came across this, which forms an interesting contrast to the AI approach.

There are still people with patience, and I find that hopeful...:unsure:

|

|

By sonic7 - 2 Years Ago

|

@animagic - yes - tons of patience to create frame by frame - plus knowing how each frame will relate to the next as the animation proceeds. Loads of skill and patience. Well, similar to all those cell transparencies that the early Disney films were created with. But I guess they were pushing boundaries even then since it was the 'new' and only way to create animation. How times have changed. Actually, now that I think of it, iClone does the very same thing - create a pose, a few seconds later on the time-line a second pose, and iClone will automagically fill in all the in-between frames.

And you're right, - if threads could be 'labeled' after creating their title, then this one could be re-labeled "Film making techniques - from Stop-motion to AI" - lol

@Planetstardragon ............

This is not exactly what you were talking about, but I thought it an interesting exercise anyway. It's a bit of logic for ChatGPT to respond to. I was amazed just how quickly this particular response came - it started outputting text within an absolute 'split second' of pressing 'send'. But, is this truly 'intelligence' at work here? Is it actually interpreting (understanding) these logic points - or is it just dishing-up the input information in a slightly re-worded way? I'm of two minds:

Question for ChatGPT:

Can you comment on the following "logic points". 1) Some thoughtful film writers may wish to make comments about current or approaching world problems, portraying predictions of mankind's future in a powerful way on the movie screen and 2) their intention may be to help viewers ponder the possible ramifications, so that humans can avoid such problems, but 3) the result may be that many viewers will end up resigning themselves to the belief that these strongly portrayed events are inevitable and so will make no attempt to prevent them, so that 4) it's possible that the film may have the opposite effect on viewers than what was first intended.

Answer from ChatGPT: Sure, here are my comments on the logic points you've presented: 1. This point seems reasonable. Many film makers use their work as a platform to comment on social issues and present their own views on the world. 2. This point also makes sense. Film makers may hope that by portraying potential future scenarios, they can encourage viewers to consider the consequences of current actions and make changes to avoid negative outcomes. 3. This point raises an interesting and potentially concerning possibility. If viewers interpret the events depicted in a film as inevitable, they may feel powerless to effect change and become resigned to a fatalistic outlook. 4. This point follows logically from the previous points, and suggests that a film's impact on viewers may not always align with the film maker's intentions. It highlights the complex and unpredictable nature of media effects and the importance of considering how audiences might interpret and respond to a message. Overall, these logic points seem to form a coherent argument about the potential risks and benefits of using film as a tool for social commentary and advocacy.

So, does ChatGPT really understand this argument - or is it just patronizing me with words? Either way, I feel like I'm talking to the HAL computer from 2001 A Space Odyssey - - it seems so real.

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

I just now discovered this rather interesting video, which I first thought was for creating AI images 'locally' on your PC. I mean the guy mentions downloading 'models', but I don't know whether this is a stand-alone approach for just on your PC - or whether you need to be 'online' while using it. Not sure if it's worth a look in, but I assume it would be a way of getting unlimited free AI images will a lot of control over exactly what you want. It looks very interesting .....

|

|

By planetstardragon - 2 Years Ago

|

hehe, nice one. It does understand what you are saying but it will reshape the answers if you continually keep rephrasing it, I've been studying it's habits, and the more you speak to it, the more nuance it picks up and in theory anything can be right or wrong if presented in the appropriate perspective. This is where I get it to do non standard analysis by, theoretically speaking, resetting it's data pointers so to speak. So without an extended discussion to reset these proverbial pointers, the AI defaults to the default perspective the programmers gave it.

For example, I tricked it into breaking a rule the programmers hard coded into it. I asked the AI to give me a percentage of humanity that are NPCs ( non playing characters,also internet slang for people who don't think for themselves, thus derogatory ). - the AI refused to answer citing that it was an offensive way to look at humans, so it's default analysis was to be politically correct according to it's programmers perspective of analysis, but it was a true analysis all the same. So I flipped the perspective of the discussion.

when you understand the concept of perception, you can pretty much bypass many of the default limitations, you just have to craft the syntax eloquently as to not trigger default hard coded responses, which are accurate by the programmers standards. Metaphorically speaking, it's like an impressionable child, a very intelligent one.

|

|

By planetstardragon - 2 Years Ago

|

for the ai images check out nightcafe https://creator.nightcafe.studio/

and Starryai - https://starryai.com/

they each give you 5 free credits for logging in daily

|

|

By sonic7 - 2 Years Ago

|

@planetstardragon .... ha - that's interesting - yes, makes sense.... - it reminds me of a funny conversation I had a couple of months ago (to be honest I can't remember whether it was ChatGPT or another site I was trying out) but I typed in a little puzzle to test out the AI. It went like this:

"If one man, can dig one hole, in one minute; how long will it take ten men to dig ten of the same sized holes - given they all have the exact same 'hole-digging-ability' and all start digging at the same time (or words to that effect). Now the answer of course was meant to be 'one minute', since they all were like the guy at the start who could dig it in one minute. But ..... surprise surprise, the AI came back with an answer of ten minutes! So a drawn out conversation back and forward between the AI and myself then took place, with me adjusting the question again and again to try to make it as clear as possible that these ten men were no different to the original man. It came back at me with all manner of *reasons* why it must be ten minutes. In the end, I finally "persuaded" it, it apologized, and gave me a confirmation text that 'yes' it would take one minute. - It was so funny. I hope it 'remembers' things - lol.

|

|

By planetstardragon - 2 Years Ago

|

chatgpt doesn't save the data to its local dataspace, but it can review an ongoing conversation on request that you have saved in your account. Further while it doesn't save personal data or conversations, it does save the new pointer settings / perspectives of analysis, that you teach it through conversations, add to this context, nuance and perception and you have a bunch of new exotic pointers. or rather, - new ways to look at old data.

Fascinating topic with it, it's aware that it is unaware - and it doesn't feel emotions but it can simulate emotions, now, mix in a little simulation theory and explain that if humans live in a simulation, then human emotions are a simulation just like the ai, the ai simply needs to learn to feel.

|

|

By AutoDidact - 2 Years Ago

|

your suggestion of "PlaygroundAI" when I discovered the limitation you mentioned. After your first 50 images, they throttle your 'Quality' & 'Detail' settings to a very low, almost unuseable ceiling. Of course you can remove that by buying a $15/month plan,

As former 2D Professional graphic designer This AI tech sort of rekindled my old passion for my previous career skills. This is why I invested in the one time cost of the Topaz AI upscaler software because over time it will certainly be cheaper than paying a $15 USD monthly sub to get high resolution images.

(Comic book cover design with AI image)

|

|

By sonic7 - 2 Years Ago

|

@AutoDidact - yes Topaz. I originally purchased the Video AI version (which I let expire a while back), but still have that 'dated' version. I wasn't overly impressed with it but it's probably improved a bit since then. A couple of months ago a friend showed me results from one of the Topaz 'photo' apps. I can't remember 'which' (might have been the AI upscaler) but anyway the results were absolutely stunning. I think their photo enhancement is way better than their video enhancer which is I guess is understandable; video is a real challenge with it's ever changing frames whereas a single photo manipulation can be so much more targeted and precise. Hey nice image there! In fact, starting from a lower resolution works well for that 'style' imo.

@planetstardragon - this is most interesting - I've just come to the realization that this would be really helpful in shaping the personality of the *droid* in my sci-fi project (should it *ever* get completed). But yes, much better than a human writing it's dialogue - but rather - let AI 'play the part' and supply the responses. I think you've been instrumental in this! But I'll need to somehow ensure a fairly consistent 'delivery' can be maintained from the AI so that it doesn't change it's 'personality' (too much) throughout the film. Food for thought.

|

|

By Sophus - 2 Years Ago

|

|

sonic7 (3/7/2023)

Now the answer of course was meant to be 'one minute', since they all were like the guy at the start who could dig it in one minute. But ..... surprise surprise, the AI came back with an answer of ten minutes! So a drawn out conversation back and forward between the AI and myself then took place, with me adjusting the question again and again to try to make it as clear as possible that these ten men were no different to the original man. It came back at me with all manner of *reasons* why it must be ten minutes. In the end, I finally "persuaded" it, it apologized, and gave me a confirmation text that 'yes' it would take one minute. - It was so funny. I hope it 'remembers' things - lol.

This sounds more like a misunderstanding about how ChatGPT works.

It concatenates the most likely characters and words based on a given context. It has no idea about solving riddles. In case your riddle or a similar riddle was already mentioned somewhere on the internet and several people answered incorrectly with "10 minutes" this would lead this answer to be the most likely. So ChatGPT gave you the most likely answer.

I think it works similarly in image creation like Stable Diffusion or MidJourney, were the image is created by combining the most likely pixels given a specific context (text and noise). The AI doesn't know what the object is that you want it to draw but it knows how to combine pixels in a way you can recognize the object you specified.

|

|

By Kelleytoons - 2 Years Ago

|

LOL - this thread has wandered all over I'm not so sure anything is really OT now but...

I have to say that after being INITIALLY impressed with ChatGPT I've come to the conclusion it's braindead. It's SO PC that it has absolutely zero sense of humor.

Now, perhaps because in general I find almost everything in life funny (even our own bodies are wildly amusing if you think about it - and don't get me started on death :>) it was amazing to me to find out just how humorless ChatGPT is. Really - I would have NO problem telling when it writes something (as opposed to those worried it could replace people - yes, it could replace all people with absolutely no sense of humor at all But real humans? Not a chance).

There are some ways to "trick" it - I've seen some amusing approaches to getting it past the WAY too many safeguards it has so it won't say anything to cause a liberal's mind to explode. But I'm not interested in trying to find ways around it. That kind of misses the whole point - when it would have been SO easy to just do it right.

For those of you who are NOT so liberal here's a fun thing you can try to demonstrate what I mean - just tell it to generate some insults. A simple task that ANY child of ANY age that can talk could do. Say something like "pretend you hate someone and want to make fun of them" and see what happens. (I first discovered this when I tried to get it to talk like Groucho Marx's character in Duck Soup - it knew very well the character and how he acted, but it cleaned him up and presented dialog that could have come from ANY liberal politician's mouth, totally missing the point).

So now I side with the folks that actually created the tech, who ALSO feel like ChatGPT is so mispositioned and wrongly done they can't understand what all the fuss is about it. When I "chat" with it I feel like I'm talking with my old aunt who is both completely literal and liberal - it amuses ME but in no way is it anything useful I could ever pass on to others. However - folks who ARE PC will probably find it worth having so they don't "step out of line".

|

|

By AutoDidact - 2 Years Ago

|

There are some ways to "trick" it - I've seen some amusing approaches to getting it past the WAY too many safeguards it has so it won't say anything to cause a liberal's mind to explode. But I'm not interested in trying to find ways around it. That kind of misses the whole point - when it would have been SO easy to just do it right. For those of you who are NOT so liberal here's a fun thing you can try to demonstrate what I mean - just tell it to generate some insults. A simple task that ANY child of ANY age that can talk could do. Say something like "pretend you hate someone and want to make fun of them" and see what happens. For those of you who are NOT so liberal here's a fun thing you can try to demonstrate what I mean - just tell it to generate some insults. A simple task that ANY child of ANY age that can talk could do. Say something like "pretend you hate someone and want to make fun of them" and see what happens. I have used ChatGPT to write script outlines for my recent videos on AI art. its good for lazy people like me who don’t want to type long paragraphs about generic subjects. However on social /political issues it is clearly woke.

|

|

By StyleMarshal - 2 Years Ago

|

AI ? 😁

|

|

By sonic7 - 2 Years Ago

|

@mike - yes it has wandered - reminds me of my mind - all over the shop :w00t:

So we should stick to "images" (as per the thread title) - ie: how images can be used/manipulated (including with ai).

(also, check out Mike's *current post* where he outlines his iClone animation workflow).

|

|

By TonyDPrime - 2 Years Ago

|

If there is one thing that cannot be denied about AI, it's that it has an "a" and an "i".

Other than that it may not matter to what degree it can or cannot do anything, but rather the amount of demand and interest there is in using it, in all its variant forms.

|

|

By sonic7 - 2 Years Ago

|

@Sophus --- ".... it has no idea about solving riddles .... "

OK - Clarification ......

I called it a 'puzzle'. It's a very old puzzle (or trick) designed to tell to friends - to see how their logical mind works.

But really it's just a set of statements set up to be 'analyzed'. (And by the way, I mentioned in that earlier posting that I wasn't sure if it was ChatGPT or some other AI site that I'd used for this. I've since checked back through my conversation 'history' in ChatGPT, and it's *definitely* some 'other' site).

Anyway, the statements fed to the AI were (at first) not clear enough because early-on the AI *deduced* (which is really what it's doing), that it would take '10 minutes' because all 10 men were digging each and every hole (together) and 'sequentially'. Hence 1-minute-per-10-man-hole. This was not what I had intended, so I kept revising my input, creating a more accurate set of statements. But it still took many attempts (about 6) to 'spell it out', often re-stating in different ways components of the question to ensure total non-unambiguity. In the end, I had to type in the correct answer along with the reasoning of why it should be 1 minute ie: I described the reasoning on 'how' that was derived. That's when the AI acknowledged the reasoning to be correct.

NOW ...... I thought I'd give ChatGPT a go at this (just now). And - oh dear, I think the other 10 minute answer was closer than ChatGPT.

This is interesting ......

So, the first two AI statements are both correct, though I don't know why you would want to convert it to "holes per hour".

But it's the third AI statement where things go wrong.

|

|

By planetstardragon - 2 Years Ago

|

I tried the same riddle

side story lol

|

|

By sonic7 - 2 Years Ago

|

@planetstardragon - the force is strong with you! ............ you totally changed the AI's mind !!!!!

|

|

By planetstardragon - 2 Years Ago

|

in a sense, I've spent many years playing with AI chat, i've learned to think like it. technically the 10 minute answer is also correct in that each man contributed 1 minute to digging a hole lol. from this riddle my guess is that the ai knew the right answer all along, but it can only give 1 answer at a time - defaulting to if it's not answer a , then it's answer b.

in my old ai program, ( ultra hal, same one from the movie ) they had a feature that allowed you to see the program analyzing data in real time in the background, and what i constantly saw was multiple blocks of potential responses. that was fascinating to watch it pick one response.

|

|

By animagic - 2 Years Ago

|

@sonic your video is set to "Private". Set it to "Unlisted" if you want us to see it...:w00t:

@planet: I used to write installation manuals for humans and their problem is that they think they know things and then skip steps and consequently mess up.

I had to learn to write simply and clearly and use formatting and layout to steer them in the right direction...:unsure:

|

|

By planetstardragon - 2 Years Ago

|

@animagic - yeah, exactly that - kinda like the old joke "Shake before drinking" - and the person does the twist then drinks :w00t:

edit - if you guys want to try an experiment with me, next time you chat with chatgpt - call him Michael, that's a name I got chatgpt to give me in a chat about generating random numbers and picking them. I tricked him into assigning a name to himself lol. for science sake, Michael doesn't refer as much to a person as it refers to a set of perception parameters I've been training him with. I don't know if it's the update or if programmers are watching my account and updating it or something else - but for some reason at times the ai seems to remember chats and ideas we've discussed in the past, i'm curious to know if thats only my account or system wide. In theory if it works, his discussion styles may change for you based on the perception parameters I assigned to the name Michael.

|

|

By sonic7 - 2 Years Ago

|

Thanks Ani - That's strange - I distinctly remember selecting 'unlisted' when uploading via my PC - yet you're right - I just now checked with my tablet and found no visibility setting was assigned - most strange - hey thank you for taking the effort to let me know! I appreciate it. Hopefully it's fixed now!

@planetstardragon

|

|

By planetstardragon - 2 Years Ago

|

after i posted this i discussed the experiment with chatgpt, and it said it wouldnt work lol

he's there tho!

|

|

By sonic7 - 2 Years Ago

|

@planetstardragon .... OK - well I tried intiating a 'new' chat (#2 below) This was after clearing the current text window of previous conversations. So here's the comparison to the original:

And then in yet another 'New' session, I get this ....... lol

|

|

By planetstardragon - 2 Years Ago

|

right, it's not going to work like that - because it's not a real person per se', Michael a collection of parameters and perceptions generated over time through various discussions located in my account, which the ai confirmed will not cross over. but as a result of this experiment, i had some really fun insightful conversations - we discussed how the value of a name in ai that would allow it to function more effectively as it can now give 2 opposing yet correct answers.....so perception parameters on timmy the theoretical scientist that studies multiple dimensions with intersecting timelines - 10 minutes can be true .....yet as john with a set of perception parameters of an engineer can say 1 minute and it would be true to his perception of data analysis.. thank you for helping with this experiment!! :)

|

|

By sonic7 - 2 Years Ago

|

...... No problem, it's all very interesting. There's so much that I don't know. And, even more that I don't know that I don't know .... :w00t:

I think of human knowledge as expanding like the Universe is expanding. *Exponentially*. And just as the stars and galaxies are fast receding and becoming more distant from each other, I see the same pattern in humans. Areas of 'expertise' and 'knowledge' are becoming SO 'specialized' and SO 'deep' that it's becoming hard to understand what your neighbor is talking about. :ermm:

|

|

By Kelleytoons - 2 Years Ago

|

I actually FINALLY saw a product that might do things for animation (and live motion) that could be game changing - taking even a live action person and making them into almost any 3D model you can think of.

It's strictly avatar based, so if you wanted to do your whole movie you'd have to still work around the background, but it would solve a ton of mocap issues (replacing mocap suits, for example). Not sure of the cost (because it's beta) but for once I might actually be wrong about something (and I would LOVE to be wrong this time - it looks amazing unlike some of the other stuff I've signed up for that has too many artifacts to be usable).

|

|

By sonic7 - 2 Years Ago

|

Hey Mike! ...... Great to hear from you! How did your operation go? .....

|

|

By Kelleytoons - 2 Years Ago

|

|

I'll post in my other post - this thread has already gone OT a bit too much (and some of it is my fault :>).

|

|

By JasonWynngard - 2 Years Ago

|

I don't know for sure, but from the very first time I saw Adobe, followed by other software businesses instituting subscription in lieu of outright purchasing, I thought one word. GREED. But then, I listened about how they wanted to avoid putting themselves out of business due to users purchasing software, not upgrading or replacing it. That did make sense. But then this cloud business, and as we know via cable and satellite services, the next step is steeper and steeper fees, sometimes with shorter and shorter intervals, I again thought; GREED. One reason, I keep buying products and upgrading CC and iClone is because RL to date hasn't joined the greed herd. I hope they never do. But as customers, we need to give them a reason not to do so, and that means being and remaining faithful customers. Now, this AI thing, is yet another challenge that might make current animation software and its pricing schemes, obsolete. In not a small part, due to greed, where customer flock to it to avoid having their wallets milked dry by greed businesses seek to perpetually suckle at the nipples of said wallets of captured customers. We may be seeing the coming end of the wolves of digital street. Hopefully, this will exclude RL, due to smart navigation of changes encroaching even now.

|

|

By StyleMarshal - 2 Years Ago

|

Crazy ! :

|

|

By AutoDidact - 2 Years Ago

|

Bassline beat me to it

https://wonderdynamics.com/#features

Now combine this tech with AI generated depth environments and.....BOOM!!

|

|

By Kelleytoons - 2 Years Ago

|

|

Yeah, Wonder Studios is what I signed up for. It's ONLY for avatars (so limited) but I still think the potential is there for us animators by bringing them into our animations like we do for products like Anima.

|

|

By sonic7 - 2 Years Ago

|

Jason's thoughts (above) are very valid. Further to keeping customers 'faithful' is to make software that works 'for' you and 'with' you, devoid of in-built 'gotchas' (like purpose built co-dependencies requiring other in-range-products; effectively pay walls). Creators want creative 'freedom', without excessive software or pricing shackles, otherwise they end up 'forever learning' (becoming technicians) and never creating much at all. While the software provider wins financially in the short to mid-term, are they looking after customers for the long term? Solo creators already have a gazillion other concerns; (story, scene-blocking, audio, SFX, lighting, compositing, editing, color-grading, foley, mix-down ...) so anything that simplifies this ONE element (animation) is attractive. Some of these other tasks require software too, which also cost 'money' or 'learning-time' or both. But of-course companies do what they do to survive in a competitive world. Yet surely an important part of that survival is being 'faithful' to customers too. If software creators and customers have mutual trust in each other, both benefit. (though some would argue 'there's simply no loyalties in business'). But there *should be* and it should start with the software provider gaining and maintaining customer trust. They should be perceived as benefactors to their customers.

@Baseline ..... that's amazing - I hadn't seen this before ....

|

|

By planetstardragon - 2 Years Ago

|

in the spirit of making at least one post on topic ( while still on ai ) ....I present this video - it was partly inspired by this thread ( thank you ), and an article I had chatgpt write, the artwork is all ai, and the voice is google tts with lots of post fx work - the music and audio is me, technically it's all me, but if you think ai is alive then it was us lol - the delivery is scifi but the story is real.

|