|

By illusionLAB - 8 Years Ago

|

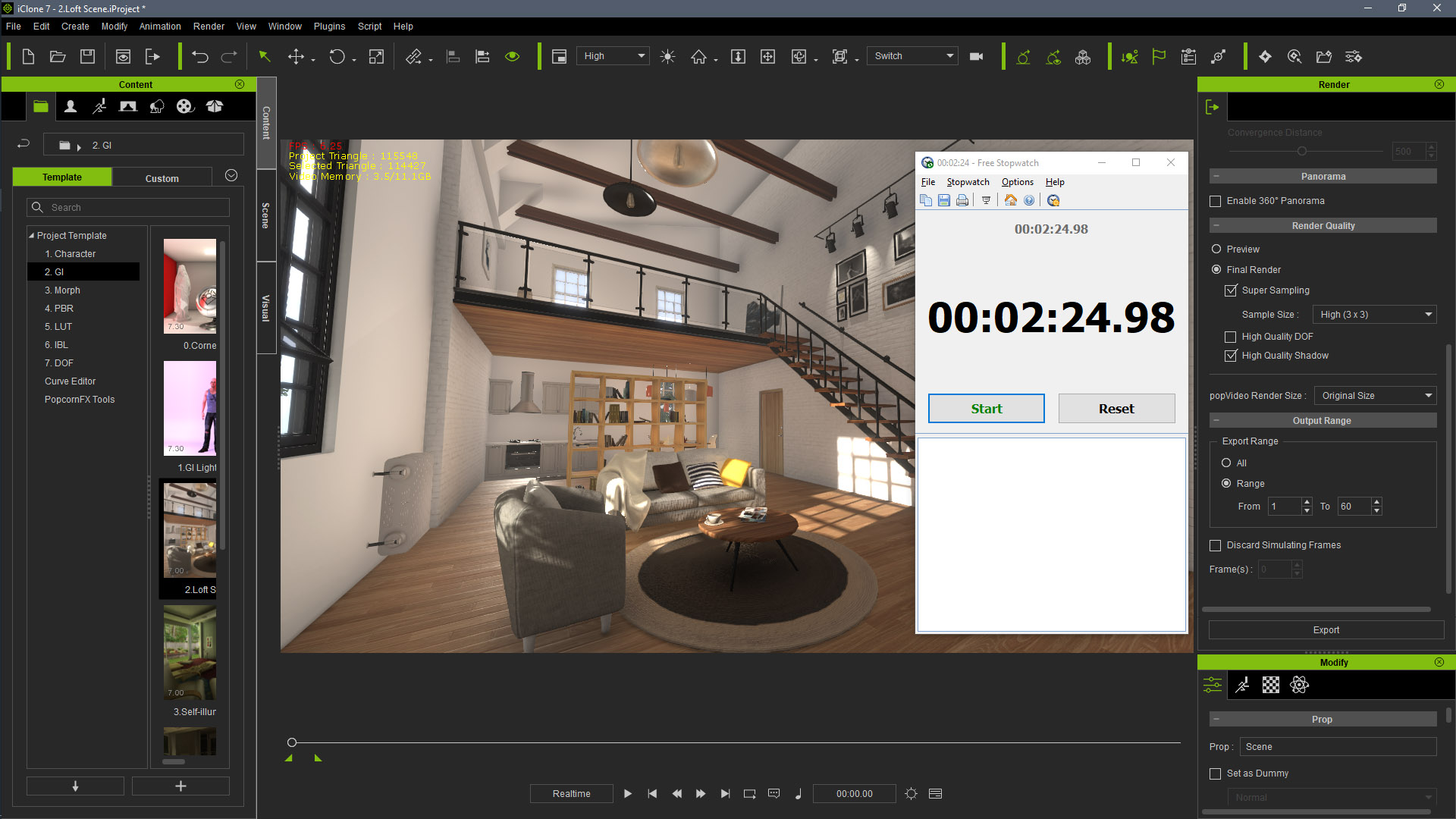

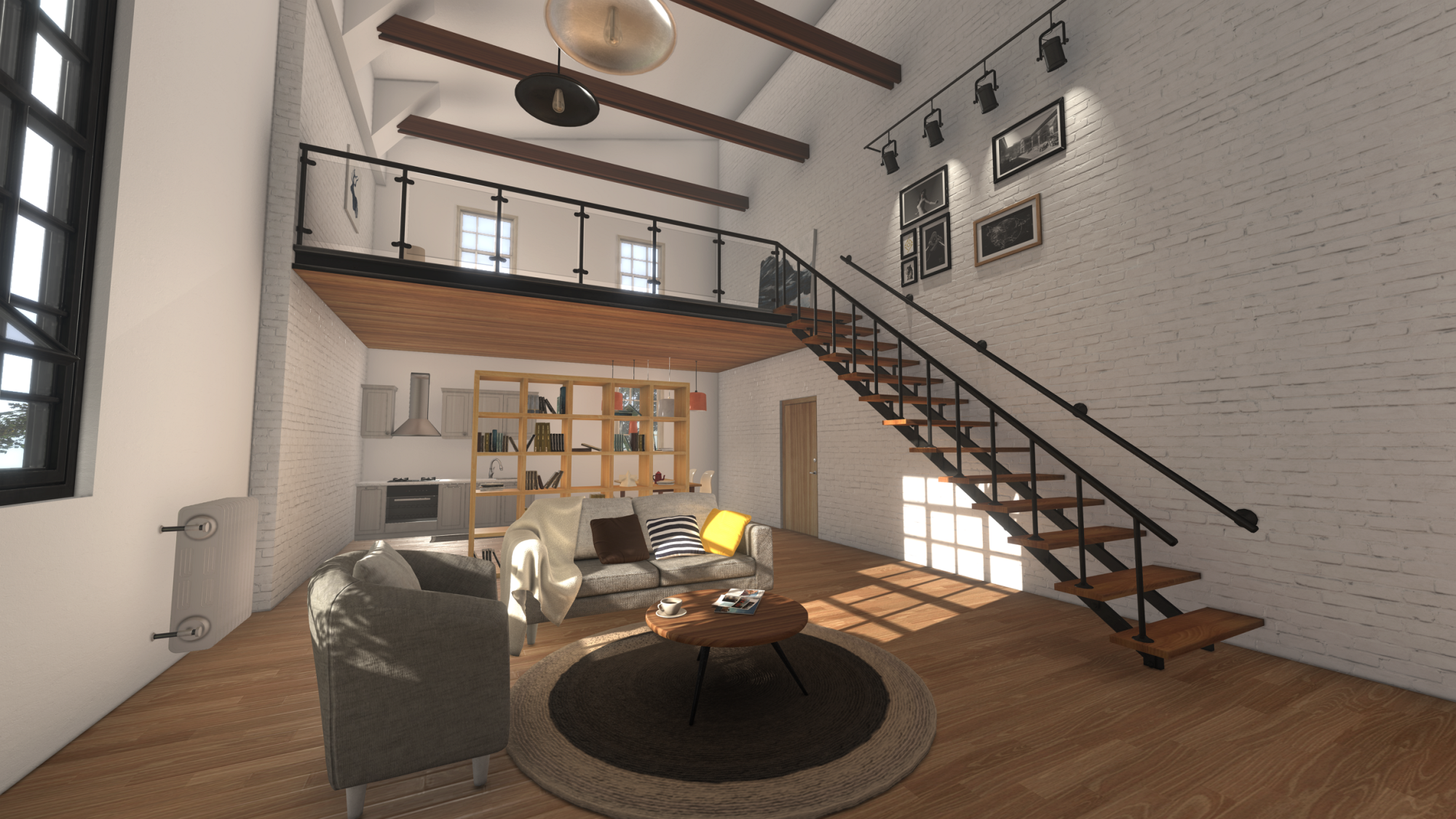

I propose we standardize a 'benchmark' with the "Loft Scene" which is in the default iClone project templates (GI category). It's a pretty stressful scene as almost every power hungry feature is enabled (except DOF). It's important for everyone participating to use the same settings - I've pasted a screen grab on the image, but in simplest terms... render range the first 60 frames - export frame rate 24 fps - UHD 3840x2160 - PNG sequence - everything else set at highest quality. Also, leave your viewer at default size for you screen as we have determined small/large sizes affect render times (and quality!?!?).

card: 2070 RTX

24 frames render time: 3 min 10 sec

2560x1440 monitor

|

|

By 4u2ges - 8 Years Ago

|

|

Also, leave your viewer at default size for you screen as we have determined small/large sizes affect render times (and quality!?!?).

In other words: hide taskbar, then in iClone CTRL+7, then "Render>Render Image" from menu and detach the "Render" tab. Right? That is how you rendered? Or you used "Standard" workspace?

I will post my results shortly.

|

|

By duchess110 - 8 Years Ago

|

Hi illusionLAB

Did as you suggested for the settings. My test only just quicker 3mins.

Card Titan X Pascal

24 frame render

Monitor 2560x1440 IIYama Pro Lite

Also tried it the way 4u2ges suggested time just that bit quicker 2 mins 30 seconds.

|

|

By 4u2ges - 8 Years Ago

|

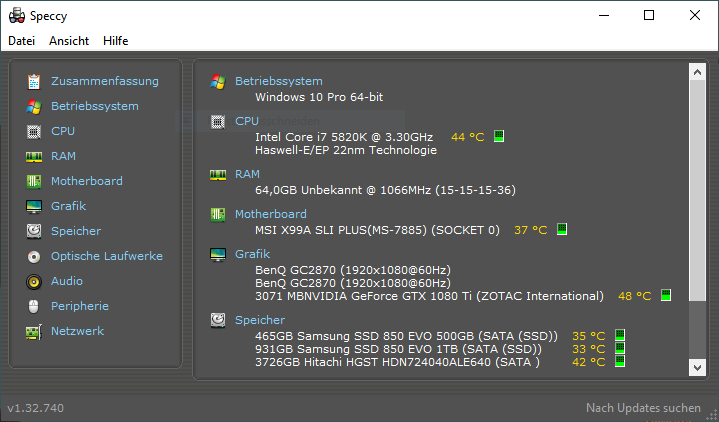

GTX 1080Ti

1920x1080 monitor

So I did 2 tests:

1. "Standard" Workspace - CTRL+6

2. "Full Screen" Workspace - CTRL+7 (with detached render tab)

Though, there would always be difference in render performance between those who use 2K and 4K monitors.

On 4K monitor render would be a bit slower but the quality better I believe.

|

|

By Kelleytoons - 8 Years Ago

|

Maybe after we have some more results someone will compile them all in a chart.

I like the fact this is frame based (which is all I ever do) and at 24fps (again, ditto). I only have HD monitors, though, but I do have dual ones so perhaps I can put something a *bit* different together.

|

|

By Rampa - 8 Years Ago

|

|

Might be worth examining the output closely to determine if the 3X ss is creating a better image. Rendering in preview will get you 66% faster, probably. ;)

|

|

By wildstar - 8 Years Ago

|

|

my two cents. supersampling algorithm, used by iclone is old and uses cpu to do its work, so no matter what gpu you use, the correct thing is to let the render in preview. and make the comparatives. because there is the time taken only by the gpu in copying the frames of the iclone buffer. PEace!

|

|

By 4u2ges - 8 Years Ago

|

To compare Preview vs 3x3:

The best (DSR+3x3)? ....

|

|

By Kelleytoons - 8 Years Ago

|

|

I assume when you say "hide the taskbar" you really mean "hide the menu bar", but I don't see a way to do this.

|

|

By 4u2ges - 8 Years Ago

|

|

Kelleytoons (12/30/2018)

I assume when you say "hide the taskbar" you really mean "hide the menu bar", but I don't see a way to do this.

No, I really meant a Taskbar. It does occupy some space on the screen (specially in my case where I have it on the left and it is quite wide). So hiding it gives almost a full screen.

Almost, because as you said, there is no way of hiding an iClone menu.

|

|

By Kelleytoons - 8 Years Ago

|

|

Oh, okay -- I don't have the taskbar on my second monitor but when I saw your screen shots I didn't see the iClone menu bar.

|

|

By Kelleytoons - 8 Years Ago

|

|

Okay, my results are similar to Gergen -- 1:40 (full screen -- I didn't try it any other way). I moved the render window off to my second monitor. Kind of surprised my Titan isn't faster than the 1080ti but I will be interested to see if any RTX can best those times appreciably (for the price they would need to be around twice as fast -- assuming you buy the same memory).

|

|

By 4u2ges - 8 Years Ago

|

Kelleytoons (12/31/2018)

Okay, my results are similar to Gergen -- 1:40 (full screen -- I didn't try it any other way). I moved the render window off to my second monitor. Kind of surprised my Titan isn't faster than the 1080ti but I will be interested to see if any RTX can best those times appreciably (for the price they would need to be around twice as fast -- assuming you buy the same memory).

But what was the screen resolution on the second monitor Mike?

We can start taking bets... :)

I'd say RTX 2080Ti would render it @2K monitor in 1:10

|

|

By illusionLAB - 8 Years Ago

|

The point of the exercise is to maintain a "standard" (as best we can)... it's not about workarounds or 'alternate' methods for speed or marginally better renders. Stick to the guidelines so we get a pretty good sense of how iC performs on various cards - surely that's the point of a "benchmark" isn't it?

So, the procedure should be:

1. open iClone

2. load "loft scene"

3. adjust render settings

4. render

5. post your time

|

|

By Kelleytoons - 8 Years Ago

|

4u2ges (12/31/2018)

Kelleytoons (12/31/2018)

Okay, my results are similar to Gergen -- 1:40 (full screen -- I didn't try it any other way). I moved the render window off to my second monitor. Kind of surprised my Titan isn't faster than the 1080ti but I will be interested to see if any RTX can best those times appreciably (for the price they would need to be around twice as fast -- assuming you buy the same memory).But what was the screen resolution on the second monitor Mike? We can start taking bets... :) I'd say RTX 2080Ti would render it @2K monitor in 1:10

I'd take that bet -- but we are talking facts, not speculation here. Someone ought to be able to do that test.

|

|

By sonic7 - 8 Years Ago

|

GTX 1070

1920 x 1080 screen (MSI laptop)

Used the laptop's 'Sport' mode - as opposed to 'Comfort' or 'ECO' modes (which would be slower)

60 frames range selected, 24 frames rendered. (3840 x 2160 PNG sequence)

|

|

By wildstar - 8 Years Ago

|

|

The point is take how fast is the gpu for render, but if you use supersampling the benchmark wil not be precise ,cause if someone render with a 1080 and a old amd fx and another ppl render with a 1080 and a new rizen 7 the times will be diferent, actualy the unique thing make the iclone render be slow is the supersampling. If you use preview iclobe just use gpu, cause that i complain so much about reallusion put TAA to render frames. Cause TAA is gpu supersampling is not

|

|

By 4u2ges - 8 Years Ago

|

|

illusionLAB (12/31/2018)

The point of the exercise is to maintain a "standard" (as best we can)... it's not about workarounds or 'alternate' methods for speed or marginally better renders. Stick to the guidelines so we get a pretty good sense of how iC performs on various cards - surely that's the point of a "benchmark" isn't it?

So, the procedure should be:

1. open iClone

2. load "loft scene"

3. adjust render settings

4. render

5. post your time

We have to be more specific (that is why I ask a question how did you render - given I did not see the actual screenshot of iClone in your post). And a default view-port size can be different for everyone.

When I open iClone, my default workspace is different from the "Standard". And my "Standard" maybe different, than someone else's (I already see the difference between my standard and duchess110, for instance).

That is why I'd say we do a full screen as well (this would ensure a benchmark precision).

|

|

By illusionLAB - 8 Years Ago

|

OK, this is strange. I deleted my render and started again... this time the "standard" clocked in at 2:54 and the "full screen" clocked in at 2:54. I do think monitor size is factoring in - which it shouldn't - but as iClone essentially does everything on the GPU I guess that's the price you pay.

Wildstar... not sure where you're getting your info, but iClone's super sampling is actually the GPU outputting an image that is either 2x or 3x your render output setting (it's clearly stated in the User Guide). It's an old school way of getting less jaggies. In fact, I just rendered the sequence again once with 3X SS and once with it turned off - the result is exactly opposite... that is, the CPU works harder with the SS turned off! It's not much, so it's probably because it's writing the files quicker. Have a look at this image... 3X SS the CPU is at 6% and GPU is at 85% - No SS the CPU is at 9% and the GPU is at 47%. I took these screen grabs when the renders were 50% complete just to make sure there was no caching spikes.

|

|

By sonic7 - 8 Years Ago

|

Yeah - like 4u2ges says - it's actually ultra critical which work space 'version' you use.

After reading Mark's recent *5 point* guide to this test - I decided to re-do mine.

This time I got 4 mins 2 secs for the 'standard' test - I'd earlier got 3 mins 38 secs.

I repeated twice more - and 4 mins 2 secs each time.

Then I hit CTRL + 2 (which is what I may have used originally) - and the real-estate hardly changes at all - yet then I got 3 mins 50 secs ....

So yeah .... it's a matter of "standardizing" - cos it's ultra critical what you use .

|

|

By justaviking - 8 Years Ago

|

Your iClone preview window size affects rendering times.

This is a known thing (for years, now), and has been reported to iClone many, many times on the forum, and also in Feedback Tracker.

Here is a very recent one.

https://www.reallusion.com/FeedBackTracker/Issue/Add-option-to-DISABLE-Preview-Window-updates-while-rendering-to-improve-performance

The first sentence mentions the impact of the window size. Look at the timing data in that Issue. It varies drastically with preview window size.

That is why some people here are getting specific on using "Full Screen" or other techniques to help standardize it as much as possible.

|

|

By Rampa - 8 Years Ago

|

I would suggest 1920 by 1080. My 4K monitor will be 4 times that full screen! :)

When making tutorial videos, I always set my screen recorder to 1920 by 1080, and then size the iclone window to fit perfectly in the marquee. Perhaps it is a good idea for anyone with greater than 1920 by 1080 monitor to do something like that. That way it is insured that every test will be exactly the same screen dimensions.

Shout out if your doing the test at smaller than 1920 by 1080.

|

|

By wildstar - 8 Years Ago

|

i read on this article on wikipedia talking about supersampling. and at the time i read the article talks its a cpu cost feature not gpu, now the article talk is gpu. Or someone change it, or i imaginated it . btw is a old method and is one of the reasons the iclone render be slow and not realtime like unity or unreal here the limitation of render speed is the speed of the hard drivers... :) . MSAA is better ( MSAA is native on unity but you can dont use it and use TAA if want )

the article

https://en.wikipedia.org/wiki/Supersampling#Computational_cost_and_adaptive_supersampling

|

|

By sonic7 - 7 Years Ago

|

From my understanding, this is the benchmark procedure Mark is requesting we all do:

(and no, my PC doesn't render this fast - lol)

|

|

By RobertoColombo - 7 Years Ago

|

Hi,

here are my result with 2080 TI.

"standard-screen" mode (CTRL+2), but only with the timeline and the render control window enabled: 3 mins 16 secs "standard-screen" mode (CTRL+2), but with timeline off and no control windows within iClone space (render control window placed into another monitor): 1 mins 41 secs "full-screen" mode (CTRL+7): 1 mins 30 secs It is evident how iClone lose time during the rendering trying to figure out to fit the chosen resolution into the monitor characteristics. Here we go for a 4K rendering and the bigger the viewer widnow, the better it is. If you compare with the results that I found here : https://forum.reallusion.com/Topic292155.aspx?PageIndex=3, there the rendering was 1K and teh biggest possible viewer window was not the best scenario because it effectively goes beyond the 1K, so iClone seems to lose time to generate the data for the extra pixels. Note: I tried to render the 4K project with iClone window minimized (Windows key + "m" while iClone is running the rendering) but there is no change. I think, like surely everybody here, RL should make teh effort to split teh rendering on the monitor from teh rendering on the file, without having th elatter one being impacted by the monitor characteristics. Peter (RL), if you can, pls, let us know if this is feasible.... there is already a ticket on the FT for this. Cheers Roberto |

|

By Kelleytoons - 7 Years Ago

|

Roberto,

I guess (because iClone is weird) we also need to know your monitor size.

|

|

By RobertoColombo - 7 Years Ago

|

Hi Mike,

i have 3 monitors set at these resolution:

1. 1920x1080

2. 1650x1050

3. 1440x900

anyway, it is obvious that the rendering time dependency vs. viewer window size vs. monitor characteristics should be removed.

Cheers

Roberto

|

|

By Kelleytoons - 7 Years Ago

|

Okay -- now that I know I won the bet with Gergun I'm happy <g>.

So it seems the 2080 Ti isn't much better when it comes to iClone rendering, which is no real surprise, Folks shouldn't be buying it for that -- either for the (coming) Iray (where we would *assume* it will be faster -- but it might not be) or for other purposes, but for inside iClone there are much better buys.

I still want a compilation of all this but I'm also interested in knowing what the RTX Titan will do (given the price that may be a long wait until someone has one. Wait -- didn't that new guy post he had two? Or was it an alcoholic dream? I drank WAY too much yesterday. If so, though, perhaps he'll chime in here with those numbers).

|

|

By RobertoColombo - 7 Years Ago

|

Hi Mike,

according to the results reported in this thread, 2080 TI vs. 1080 TI is about 15% faster when rendering at 4K on a 1K monitor.

Problem is that 1080 TI are out of stock... otherwise I would have surely bought it instead than an expensive 2080 TI.

Roberto

|

|

By toystorylab - 7 Years Ago

|

My Animation Machine:

Workspace FULL SCREEN - 2:14

Workspace STANDARD (customized) - 2:38

My Render Machine

Workspace FULL SCREEN 1:57

Workspace STANDARD 2:31

I wondered that my Render Machine (1080 + 32 Ram) is faster than Animation (1080 Ti + 64 Ram) one...

But the CPU (i7-7700K @ 4.20 GHz) is better than (i7-5820K @ 3.30GHz)

Any Thoughts?

Fact is FULL SCREEN is faster, never used it before.

Question is: is the Quality equal/better/worse in Full Screen?

Could not see difference...

Any Thoughts?

|

|

By 4u2ges - 7 Years Ago

|

|

Okay -- now that I know I won the bet with Gurgen I'm happy <g>

Not so fast Mike :)

I was was estimating based on results on my PC (I have overclocked CPU@4.4).

Looking at the Toystorylab results I can see the dependency on CPU clock speed as well.

Considering Roberto' CPU specs, my ballpark figure appears to be in a range.

BTW I corrected the spelling of my name in the quote.

@Toystorylab

About the quality. People report differently. But my personal observation is, that quality is better rendering with larger viewport as oppose to with smaller view-port.

I posted some samples here: https://forum.reallusion.com/FindPost394410.aspx

===========================================================

But once again I want to stress the importance of equal benchmark setup (though it is not going to be equal anymore as it seems the CPU is also playing a significant role - maybe saving images to the disk?)

Here are some additional testing to show how different it might be (even with Windows Taskbar visible or hidden, which effectively changes an iClone view-port size):

2:37 - 1920x1080 - Standard Workspace/TaskBar visible.

2:25 - 1920x1080 - Standard Workspace/TaskBar hidden.

1:45 - 2560x1440 - Standard Workspace/TaskBar hidden.

1:24 - 3840x2160 - Standard Workspace/TaskBar hidden.

===========================================================

Based on that I'd say we test with:

1. 1920x1080 screen resolution (sorry 4K monitor owners - you have to change resolution before running a test)

2. Enable Auto-hide for Windows Taskbar.

3. Render with iClone Full Screen mode (CTRL+7) detaching Render Image tab after opening from menu.

@Roberto

1080Ti can be still be purchased on Ebay at the fraction of the original price. There is a small risk. But I know at least 2 people who recently got it from Ebay and quite happy. ;)

|

|

By Kelleytoons - 7 Years Ago

|

Are you a lawyer in RL? Because that's a weasel argument if I ever heard it (me? I was estimating speeds based on, what, reality?).

Nope -- I won, plain and simple.

|

|

By Anaximander - 7 Years Ago

|

|

GTX 1060 6Gb 31min 8sec

|

|

By 4u2ges - 7 Years Ago

|

|

Kelleytoons (1/1/2019)

Are you a lawyer in RL? Because that's a weasel argument if I ever heard it (me? I was estimating speeds based on, what, reality?).

Nope -- I won, plain and simple.

What reality are you talking about? If same 2 GPUs render the same scene/same resolution with half a minute difference? And that is a reality.

At minimum there is not enough data to make any statements about performance differences and compare anything here (If it is possible at all). We can sure speculate but lets data to be collected.

Advocating RL? - you lost me there :Whistling:

|

|

By toystorylab - 7 Years Ago

|

|

Anaximander (1/1/2019)

GTX 1060 6Gb 31min 8sec

For 24 Frames??

|

|

By Rampa - 7 Years Ago

|

|

If the window and render-settings being different resolutions have an effect because of their mis-match, as Roberto has pointed out, then maybe try with render settings set to "Viewport Resolution". Then make it fullscreen.

|

|

By 3dtester - 7 Years Ago

|

2560x1440 screen resolution, iClone default windowed mode

3 min 22

1920x1080 screen resolution, taskbar hidden, iClone fullscreen mode

2 min 51

(GTX 1070)

|

|

By duchess110 - 7 Years Ago

|

So I did the test on both the monitor size and the viewport resolution as a comparison both came out at the same speed but did notice quicker than previous tests being small in size than before. Did the full screen on both tests.

|

|

By Anaximander - 7 Years Ago

|

Yes, for 24 frames.

I did another test as per the full screen method and got 25min -43 sec. Faster, but 75% of the frames were unusable do to white mesh lines showing on props.

|

|

By toystorylab - 7 Years Ago

|

Wow, sorry but that's hefty...

A 1060 is 10 times slower than a 1080?

Sounds ridiculous.

What CPU and RAM do you have??

|

|

By Rampa - 7 Years Ago

|

That much slower and showing artifacts like that would indicate something may not be working right.

Download the latest driver for your 1060 here:

https://www.nvidia.com/Download/Find.aspx

Do a custom install and check the box for "clean install" so it removes the old driver completely.

|

|

By rollasoc - 7 Years Ago

|

Monitor at 1920x1080

PC Spec in my signature.

Followed the steps in the (sonic7) video a few pages back.

My standard iClone window setup : 2mins 25

My fullscreen test: (with toolbar) : 1min 42

Test 3 total fullscreen: 1min 35

Test 4 - The smallest window I could make (all other panels large): Gave up, it was still on Image 1 after 3 minutes.... Then after 10 mins, I had to kill the app, since cancel render wasn't returning.

edit:

rendering at 1080 total fullscreen takes 36 seconds, which is handy to know for non final renders.

|

|

By Anaximander - 7 Years Ago

|

|

Hello toystorylab:

Yes, I agree, a 10 times slower render seems very strange. I hope that someone else with a 1060 can do the test and either confirm or deny my results.

My system is as follows:

Ryzen 7 1700 3 ghz

asrock ab350m motherboard

16 gigs 3000 ram ( auto sets at 2400 in bios)

NVme drive for boot and iclone

Zotac 1060 Amp graphics card.

There could very well be some problems. It's the first time I have ever built a computer from scratch. Lots of learning to do on my part.

I updated the bios and did a clean install of the graphics drivers. Same results. A long time to render and lots of the white line artifacts.

I'm going to try and find some benchmarking software to see if there are any system problems.

As a sidenote, my best results seem to come from rendering in preview with the control 2 workspace. About 11 minutes, no white line artifacts. Interestingly enough I could see artifacts in the viewport window but they did not show up in the render.

Thanks for your interest.

|

|

By toystorylab - 7 Years Ago

|

|

Anaximander (1/3/2019)

I hope that someone else with a 1060 can do the test and either confirm or deny my results.

I'm no tech-guy, so i can't say anything about it except it's a strange behaviour.

So, yes, some 1060 testers needed!

|

|

By animagic - 7 Years Ago

|

Rendering will slow down severely if the VRAM of the GPU is completely filled, and iClone has to rely on CPU rendering. I believe some 1060s have only 3 GB of VRAM, so I hope you have the 6 GB version.

A good tool to check this with is GPU-Z as it will reliably show total VRAM, VRAM used, % GPU usage, clock speed, etc.

If the GPU is working at the top of its power rating, it may do an automatic shut-off for protection (or lower the clock speed), and this so-called "throttling" will hamper performance.

There are also reports about Ryzen and Nvida not liking each other, but those seem to be unsubstantiated. Also make sure that the PC is actually using the Nvidia GPU in case you have on-board graphics.

|

|

By illusionLAB - 7 Years Ago

|

As a matter of contrast... the same scene (well almost) rendered in Blender 2.80 eevee. 3 min 12 sec... regardless of viewport size (just sayin').

|

|

By The-any-Key - 7 Years Ago

|

Monitor: 1920x1080

Nvidia RTX 2080 8GB

Frame Size: 3840x2160

Standard: 3:00

Fullscreen (no bar): 1:45

Fullscreen (bar): 1:38

Frame Size 1920x1080

Standard: 0:54

Fullscreen (no bar): 0:35

Fullscreen (bar): 0:34

Optimize with fullscreen render: 0:35

|

|

By Kelleytoons - 7 Years Ago

|

|

Could you give us the times on 1920x1080? (The "normal" resolution for most purposes).

|

|

By Rampa - 7 Years Ago

|

|

You'll likely get even better results if check the box for "Optimize With Full Screen Render".

|

|

By The-any-Key - 7 Years Ago

|

Updated above post with:

Frame Size 1920x1080

Standard: 0:54

Fullscreen (no bar): 0:35

Fullscreen (bar): 0:34

Optimize with fullscreen render: 0:35

|

|

By Kelleytoons - 7 Years Ago

|

|

Thanks (those are almost exactly my times using my Titan XP, so at least it saves me any worry about upgrading. Now if ONLY someone with an RTX Titan could do those... no, that might end up costing me some money :>).

|

|

By The-any-Key - 7 Years Ago

|

The cost difference would be interesting too:

MSI GeForce RTX 2080 8GB AERO was on sale and cost me: 552 USD (regular price 733 USD)

|

|

By Kelleytoons - 7 Years Ago

|

You mean cost difference between the new Titan and your card? No comparison at all - it costs basically an arm and a leg (so you are left crippled :>).

But (and this is a big but) -- if it reduced my render times in half it would be worth it. It would have to be at least that fast -- anything less just wouldn't make any sense whatsoever. However, at this point in time I'm going to assume it will not do that (like I said, if someone can post the times it would be nice to know... and also pretty horrific to know. All at the same time :>).

|

|

By The-any-Key - 7 Years Ago

|

|

I was thinking of all of the tested. (Best times monitor 1920x1080 - frame size 3840x2160 - fullscreen - no bar) [Calced time if render a 10 minute scene with 24fps]

GTX 2080 TI GamingPro 11GB 1 348 USD 01:30 [15h] GTX 1080 TI mini 11GB 939 USD 01:35 [15h 50m] Titan XP 12GB 1 315 USD 01:40 [16h 40m] RTX 2080 8GB 980 USD 01:45 [17h 30m]

GTX 1080 TI 11GB 916 USD 01:46 [17h 49m] GTX 1080 TI 11GB 916 USD 01:46 [17h 49m]

GTX 1080 8GB 722 USD 01:57 [19h 30m]

TITAN RTX 24GB 3 127 USD 02:00 [20h] GTX 1080 TI 11GB 916 USD 02:14 [22h 20m] GTX 1070 8GB Laptop ??? USD 02:42 [27h 9m] GTX 1070 8GB 517 USD 02:51 [28h 30m] RTX 2070 8GB 679 USD 02:54 [29h] GTX 1060 6Gb 291 USD 32:08 [13d 9h 20m]

With these stats, my guess the TITAN RTX 24GB won't give you that much more performance (EDIT: seem to be confirmed now). Sure the 24GB will allow you to render a more complex scene. But the question is if you optimise your scene by removing polygons that don't affect the result. Can you still get the same result with a $1000 card compare to a $3000 card? Ok if you go for a 4K or more anti-aliasing sampling and bigger textures you need more VRAM and you get better quality render. |

|

By sonic7 - 7 Years Ago

|

@The-any-key ---- Hey, nice colation !!!

|

|

By Kelleytoons - 7 Years Ago

|

Not sure where you're getting those Titan XP times from, though (my times were around half the RTX times previously posted). But, yes, Cost/Performance is not going to be good for the new Titan no matter what. But I'm not looking at that. At my age the render times are FAR more important than the money spent to achieve them.

If the new Titan DID happen to reduce the times by half it would be worth it even at three or four times the cost. Again, for me it's all about time. Well, not ALL about time -- if I only saw a 20-30% increase it wouldn't be worth it. At least not initially (the costs for the card are sure to come down, although given how it's positioned it's unlikely they will ever come down much. But if they got reduced to, say, $2K and we got 30% increase I'd be tempted).

But so far no one has gotten one and in this particular group it's unlikely we'll see one (the market is not gamers or even 3D artists, but professional folks who need the raw computing power and/or RAM). And there's no way I'm buying a pig in a poke.

|

|

By toystorylab - 7 Years Ago

|

|

Kelleytoons (2/17/2019)

But so far no one has gotten one and in this particular group it's unlikely we'll see one

"Geoffrey James" has got two of them:

https://forum.reallusion.com/399762/Well-I-guess-I-just-got-serious-about-iClone?PageIndex=1

Or am i missing something??

But he never gave clear answer for this Benchmark...

|

|

By Kelleytoons - 7 Years Ago

|

I don't think he got the Titan XP (if he did he got it before it was officially released, since it wasn't out at the time).

I thought (and I could be mistaken) he got two 2080s.

And (again, I might not be remembering right) he posted times that were 30% SLOWER than my own Titan. So that's weird.

EDIT: Sorry, I keep misspeaking. The Titan I'M talking about is the new RTX Titan X -- a VERY different card. So even if that guy got the Titan XP it's not the one that was released about a month after he first posted. And that's the one people are unlikely to ever get.

Hmmm -- I guess that card was released at the very end of last year (you can actually buy it on Amazon right now). So it's possible he got it, but the specs he posted were sure disappointing (and he hasn't come back here, evidently).

Of course, that's fine for my pocketbook <g>.

|

|

By The-any-Key - 7 Years Ago

|

|

Kelleytoons (2/17/2019)

Not sure where you're getting those Titan XP times from, though (my times were around half the RTX times previously posted).

Okay, my results are similar to Gergen -- 1:40 (full screen -- I didn't try it any other way). I moved the render window off to my second monitor. Kind of surprised my Titan isn't faster than the 1080ti but I will be interested to see if any RTX can best those times appreciably (for the price they would need to be around twice as fast -- assuming you buy the same memory).

I took your latest for what I assume was when you tested it with:

Monitor: 1920x1080

Frame Size: 3840x2160

|

|

By Kelleytoons - 7 Years Ago

|

|

Okay, we were talking about 4K (I was thinking my "normal" render -- 4K is WAY overkill for all applications, since folks can't discern more than 1920x1080 at normal viewing distances. But I know there is a "cult of 4K" and I don't want to get into that here).

|

|

By animagic - 7 Years Ago

|

|

Kelleytoons (2/17/2019)

EDIT: Sorry, I keep misspeaking. The Titan I'M talking about is the new RTX Titan X -- a VERY different card. So even if that guy got the Titan XP it's not the one that was released about a month after he first posted. And that's the one people are unlikely to ever get.

He got two Titan RTX GPUs, so that should be flying... It has been available since last month, I believe (@ $2499 or so).

|

|

By argus1000 - 7 Years Ago

|

|

Kelleytoons (2/17/2019)

But so far no one has gotten one and in this particular group it's unlikely we'll see one (the market is not gamers or even 3D artists, but professional folks who need the raw computing power and/or RAM). And there's no way I'm buying a pig in a poke.

I just bought that new nVidia Titan RTX with 24 GB of VRAM. It's sitting on my desk right now, waiting for the technician to come Wednesday to install it (I don't install my hardware anymore. I'm getting too old), as well as the rest of the stuff I bought. I'll let you know the results.

|

|

By Kelleytoons - 7 Years Ago

|

|

Cool, I'll be anxious to hear (please do the benches with 1920x1080, as noted above).

|

|

By The-any-Key - 7 Years Ago

|

My money is on 01:30. If he run a test with:

Monitor set to 1920x1080

Frame Size: 3840x2160

|

|

By Kelleytoons - 7 Years Ago

|

Well, that would be great for me <g> (save me a TON of money -- if his time comes in under 1 minute I might as well file for divorce, before my wife does).

But I want him to run the more relevant test, at 1080p.

|

|

By argus1000 - 7 Years Ago

|

|

Kelleytoons (2/17/2019)

But I want him to run the more relevant test, at 1080p.

I thought I would do the test as the original poster wanted, and as sonic7 illustrated with a video in this thread.

Monitor :1920X1080

Frame size: 3840X 2160

Is that what you mean?

|

|

By Kelleytoons - 7 Years Ago

|

You can certainly run that, but unlike a lot of the 4K fanatics I believe the far more accurate and usable test would be done at 1080p (so 1920x1080). Otherwise the specs are the same.

But you can run both. Given your GPU that particular test ought to come in at no more than 45 seconds (it certainly won't take you more than a minute to do it so I'm sure you can spare the time).

|

|

By animagic - 7 Years Ago

|

Since upgrading to a GTX 1080 ti I had not done this test. I have a 4K monitor, and I had also never done the test at the lower resolution of 1920x1080, which turned out to make a difference.

I rendered with full-screen optimization enabled. Because there is always some initialization as well as some deviation in the time keeping (starting and stopping the stopwatch) I also looked at the timestamps (in seconds) of the PNG files to get an actual seconds per frame value. Here are the numbers:

Screen Setting | Frame Setting | Render Time | Sec/frame

3840x2160 (UHD) 3840x2160 2:10 5.00 1920x1280 (HD) 3840x2160 1:46 4.08 3840x2160 1920x1280 0:44.5 1.67 1920x1280 1920x1280 0:36 1.42

So for me rendering at a 1920x1080 screen size would give around 20 to 25% speed improvement. And, even though an UHD frame is four times the area size of an HD frame, it takes only three times the render time.

|

|

By The-any-Key - 7 Years Ago

|

Added your time to the table in my earlier post (monitor: 1920x1080 frame size: 3840x2160).

1:46 is the same time, on the second, as the other GTX 1080 TI.

|

|

By argus1000 - 7 Years Ago

|

I finally had my computer overhauled. New 24 cores/48 threads CPU, new motherboard, 64 GB OF RAM and finally the nvidia Titan RTX with 24 GB of VRAM.

I had a little snag while installing it. My technician had to phone Asus, maker of the motherboard, for them to modify the BIOS so we, could synchronize the RAM with it.

ANYWAY, here are my benchmarks for "the loft", with all settings at default, 24fps, 60 frames.

With frame size 3840x2160 2 minutes

With frame size 1920x1080 31 seconds

...and , most important, iClone's overall sluggishness has entirely disappeared . I can move cameras and characters easily now, even with a 2.5 million polygons scene. It's night and day from the nvidia GTA 1080 I had and still have.

I live a dream come true.

|

|

By The-any-Key - 7 Years Ago

|

|

Updated my list:

(Best times monitor 1920x1080 - frame size 3840x2160 - fullscreen - no bar)

[Calced time if render a 10 minute scene with 24fps]

GTX 2080 TI GamingPro 11GB 1 348 USD 01:30 [15h] GTX 1080 TI mini 11GB 939 USD 01:35 [15h 50m] Titan XP 12GB 1 315 USD 01:40 [16h 40m] RTX 2080 8GB 980 USD 01:45 [17h 30m]

GTX 1080 TI 11GB 916 USD 01:46 [17h 49m] GTX 1080 TI 11GB 916 USD 01:46 [17h 49m]

GTX 1080 8GB 722 USD 01:57 [19h 30m]

TITAN RTX 24GB 3 127 USD 02:00 [20h]

RTX 2070 8GB 679 USD 02:01 [20h 10m] GTX 1080 TI 11GB 916 USD 02:14 [22h 20m] GTX 1070 8GB Laptop ??? USD 02:42 [27h 9m] GTX 1070 8GB 517 USD 02:51 [28h 30m] RTX 2070 8GB 679 USD 02:54 [29h] GTX 1060 6Gb 291 USD 32:08 [13d 9h 20m]

Maybe we should run a longer monitor 1920x1080 and frame size 1920x1080 test for all. As my was 0:35 and the Titan had 0:31. It may also depend when we start the clock. I start as fast I click the save button (this adds the init time). A little longer test for monitor 1920x1080 and frame size 1920x1080 would maybe make the times more accurate. |

|

By Kelleytoons - 7 Years Ago

|

|

argus1000 (3/1/2019)

With frame size 3840x2160 2 minutes

With frame size 1920x1080 31 seconds

..

Oh THANK GOD! -- compared to my own Titan XP, this performance is only so-so (CPP ratio very, very poor -- price would need to be like $1800 to be even slightly viable).

I can now rest easily knowing I'm just fine. Thanks! (At this point now I'm interested to see what nVidia does this fall, when they'll release their new cards. Hopefully we'll see some amazing things then).

|

|

By illusionLAB - 7 Years Ago

|

|

Hey T-a-k, I just ran the test again with the "Full screen optimize" etc. and the time for my RTX 2070 was 2:01 - which feels about right as it's benchmark scores are usually neck and neck with the 1080ti.

|

|

By animagic - 7 Years Ago

|

The Titan RTX result is puzzling. It is worse than that of the GTX 1080 ti and on par with the RTX 2070... :unsure:

When testing it indeed important to reduce the screen resolution to 1920x1080. I have a 4k screen and reducing the screen resolution gave me better results.

|

|

By TheOldBuffer - 7 Years Ago

|

Hi guys. my first RTX 2080 Ti sadly died so I decided the replacement had to fit into a more suitable home so I've built a new computer around it.

I've ran both the benchmarks.

test 1 1920 x 1080 = 28.7 secs

test 2 3480 x 2160 = 1: 22

I'm very happy with the results ;).

|

|

By eyeweaver - 7 Years Ago

|

|

the TITAN RTX 24GB results don't make any sense!can someone explain?

|

|

By The-any-Key - 7 Years Ago

|

|

eyeweaver (4/19/2019)

the TITAN RTX 24GB results don't make any sense!can someone explain?

It make sense if you are a software developer. When you develop a software you choose what hardware features you want to integrate into the software. What resources the software can use and how to use them. This means that iClone don´t use the resources from the GPU in a optimal way. It can also be a bottleneck effect. Say you got a good GPU but a bad CPU, got bad memory clock, got 10000 processes running at the same time when you render, got outdated drivers, got errors in the OS... everything counts and can be a bottleneck.

|

|

By 3dtester - 7 Years Ago

|

It might be of interest what driver type was used.

Either the GRD (Game Ready Driver) or the CRD (Creator Ready Driver).

I expect the GRD to work better with iClone.

|

|

By eat_me_toyota - 7 Years Ago

|

|

Was scratching my head when it took my 2080 TI 1:32, but the monitor is 4K and CPU is 17 5930K. So guess that's an expected result.

|

|

By argus1000 - 7 Years Ago

|

|

eyeweaver (4/19/2019)

the TITAN RTX 24GB results don't make any sense!can someone explain?

2

I re-did the test. The first one was faulty. I have 2 computers, one with an nVidia GTX 1080 with 8 GB of VRAM. the other with the nVidia Titan RTX with 24 GB of VRAM. I rendered a 70 MB 16 seconds file at 1920X1080.

nVidia GTX 1080 with 8 GB VRAM: 16 minutes 30 seconds

nVidia Titan RTX with 24 GB of VRAM.: 7 minutes 40 seconds

Makes more sense?

|

|

By eyeweaver - 7 Years Ago

|

Thank you so much Argus..

currently I have an Nvidia rtx 2080 11 gb card.. in your opinion.. is it worth upgrading to Titan RTX 24gb?

|

|

By argus1000 - 7 Years Ago

|

|

eyeweaver (5/3/2019)

Thank you so much Argus..

currently I have an Nvidia rtx 2080 11 gb card.. in your opinion.. is it worth upgrading to Titan RTX 24gb?

If you have the money, I would day yes. Absolutely. The main advantage for me is the ease of maneuvering. That 24 GB of VRAM does the trick. I can now handle scenes of even 4 millions polygons with ease. My computer doesn't crawl to a stop anymore as when I had my nVidia GTX 1080 with 8GB of VRAM. It's like being in heaven.

|

|

By eyeweaver - 7 Years Ago

|

Thank you so much Argus..

money is not an issue.. currently I'm doing fine with my RTX 2080 ti 11gb.. regarding maneuvering scenes.. but regarding the speed of the final video render.. is there a big difference in speed?

|

|

By argus1000 - 7 Years Ago

|

|

I've already posted the results of my test a couple of posts back.

|

|

By eyeweaver - 7 Years Ago

|

I did the benchmark for the loft scene.. 60 frames range.. 24 FPS .. 1080 video.. it took only 27 seconds..

card is RTX 2080 TI

my processor is AMD Ryzen 4gh 32 cores.. and ram is 128GB..

am I doing the test right? or should I render the whole scene?

|

|

By Kelleytoons - 7 Years Ago

|

|

It's supposed to be the entire scene.

|

|

By StyleMarshal - 6 Years Ago

|

My benchmark test as described by the OP for the RTX 3090.

3840 x 2160 : 1,05 min

HD 1920 x 1080 : 19 sec

CPU : I9 14 cores

|

|

By Kelleytoons - 6 Years Ago

|

Yeah, because this thread has wandered (a bit) we need to make sure of terms.

The test is the entire scene, rendered at 24fps, to a frame sequence (individual PNGs) using iClone as full screen. You did the resolution right, did you also render the entire scene at 24fps to frames?

(And now I'm also wondering what effect your I9 has on this. Did you ever do the benchmark with your old video card in this same machine?)

|

|

By StyleMarshal - 6 Years Ago

|

Yes , 24fps , from 0 to 60. Image sequence , PNG.

Good question , unfortunately I never did a test with my old GPU.

|

|

By Kelleytoons - 6 Years Ago

|

Actually, the test is the entire sequence. So not just 60. Could you do it again with the entire sequence?

(This is a little confusing -- as I said, the thread wandered and folks were doing different tests. But the real test is the entire sequence).

|

|

By animagic - 6 Years Ago

|

|

I look forward to the results...:D

|

|

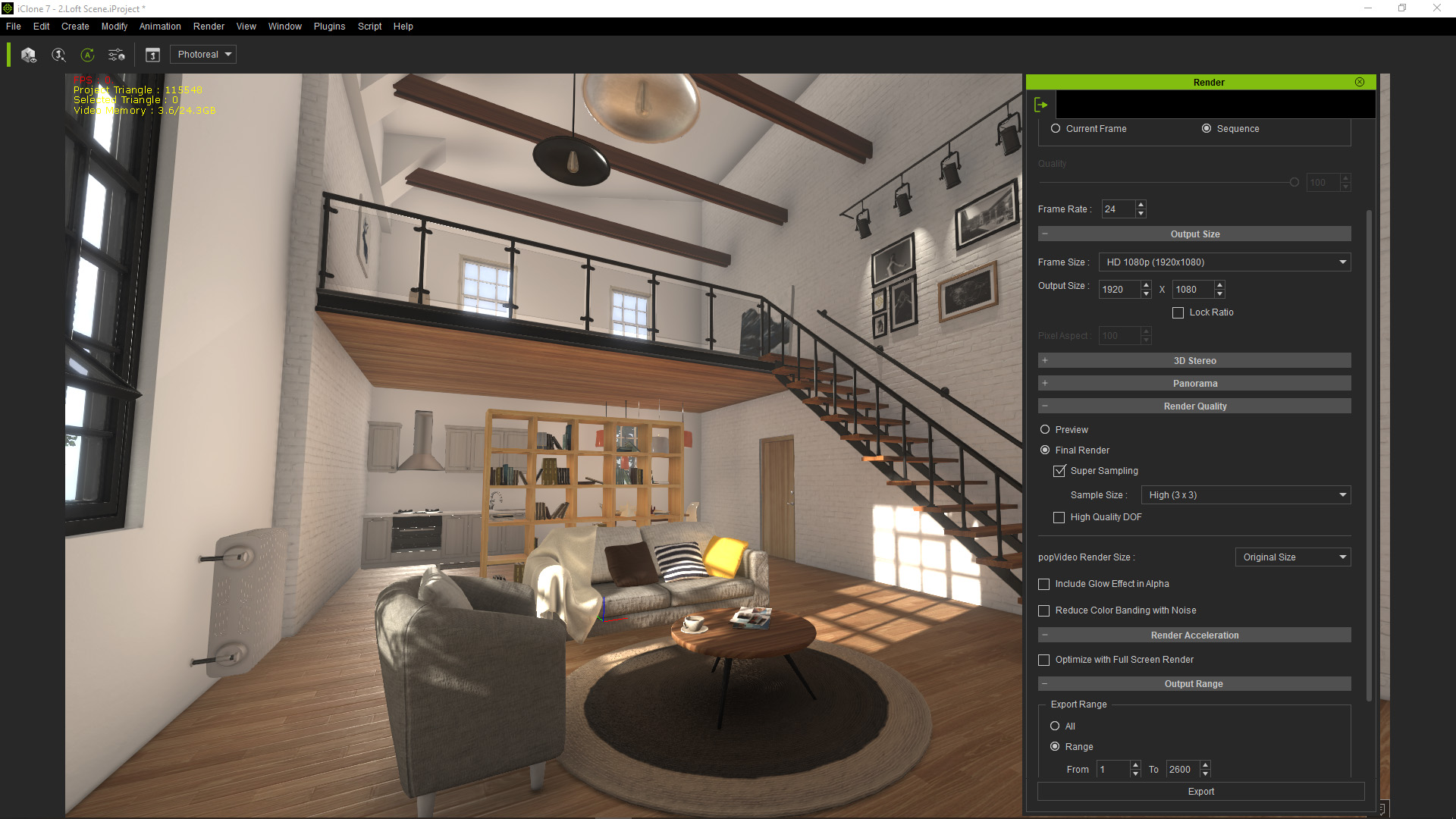

By StyleMarshal - 6 Years Ago

|

RTX3090 :

ok , did a render of the full scene in HD 1920 x 1080

24fps

Range :1 to 2600

4K monitor , I set the resolution to 1920 x 1080

Rendertime : 12.35 min

Without Supersampling : 6.08 min

I didn't test 4K yet. ( lazy) ;-)

|

|

By Kelleytoons - 6 Years Ago

|

Thanks, Bassline. That makes your 3090 about 40% faster than my Titan, which is a bit disappointing considering my Titan is two generations back. I would have hoped for at least double the speed (and some of that almost assuredly is due to your i9 versus my i7 -- we can't factor that out, unfortunately, but I would guess it adds at least 10-20% to the speed).

Now there's no question having double the memory is a Good Thing. Is it worth spending $1500 for, at least for someone like myself? Doubtful -- I rarely use even 10GB of the 12 that I have. Cutting my render times down 30% is not cost effective in my particular case (for someone without ANY graphics card the 3090 is certainly looking to be the one to get, though).

BTW, some folks float the theory that iClone will get "redesigned" to take advantage of this architecture. Don't hold your breath. They haven't done that for any new NVidia stuff for many years, and it's doubtful they ever will. They would have to completely redesign their renderer and that ain't gonna happen, I can promise you. So what we get now is what we will always have -- no one should be foolish enough to buy anything based on "possibilities".

(But, as I say, for someone without ANY decent GPU the 3090 looks to be the winner. For those with decent ones you need to evaluate the cost versus the memory and somewhat improved performance and decide what works for you).

Thanks Bassline, for the info (at least now I don't have to worry I can't find an 3090 to buy anywhere -- that turns out to be a Good Thing).

|

|

By StyleMarshal - 6 Years Ago

|

you are welcome , Mike :-)

For me the 24GB Ram are the most important !

|

|

By Nick GlassB - 6 Years Ago

|

|

This has been an interesting read. Obviously I think we would all be straight out purchasing a 3090 if we could. I bought a GTX 1660 a while back, which I thought at the time looked ok for the money. I now think it's causing be problems since I bought Iray into the mix. Takes like 15mins just to render the active preview. I'm thinking now about buying another card but am looking at possibly the 3070 or at a push the 3080. Just wondered if you guys had any thoughts on those or how best to proceed based on the sort of budget?

|

|

By animagic - 6 Years Ago

|

One important feature to look for is the amount of VRAM. You need at least 8GB, but more is better. With Iray, when the VRAM is filled up, the renderer switches to CPU and becomes much slower.

I bought a 1080Ti on eBay a few years ago, which has 11 GB. It is adequate, but I hit the limit sometimes, just for regular iClone scenes. I was lucky to get one that was only lightly used and it has been performing fine.

If I were to upgrade, I would get a 3900 because of the 24 GB VRAM. Of course, it is impossible to get one right now. In your case, I would probably get a 3080, which has 10 GB of VRAM.

What are the specs of your system?

|

|

By Nick GlassB - 6 Years Ago

|

Ryzen 7 3800x, 32gb ram, 250gb ssd, 1tb ssd dedicated to iclone. GPU seems to be my issue. Currently have a GTX 1660 6gb card which can't handle iray at all.

30 series cards are totally unavailable and it seems they will be until next year some time. Best I've found is a used 2080 super with 24month warranty. Even thought about picking up a 1080ti on the cheap for the higher vram then just grab a 3080 next year

I need something to get me going because I'm hardly getting anywhere right now.

|

|

By animagic - 5 Years Ago

|

I now have a RTX3090 GPU by buying a PC with it (Alienware Aurora R12), so I thought it would be fun to repeat the benchmark test.

In accordance with how this test has developed, I rendered the full scene in 1920x1080 @24 fps under a few different conditions. The results are as follows:

1920x1080 @24 fps, SS 2x2, full-screen option disabled 07:27 1920x1080 @24 fps, No SS, full-screen option disabled 04:02 1920x1080 @24 fps, No SS, full-screen option enabled 06:17

The full-screen option takes longer, but in my case the quality is comparable with 2x2 super-sampling. I think these are nice results.

As comparison, I did one of the renders with my other system, which has a GTX1080ti;

1920x1080 @24 fps, No SS, full-screen option disabled 09:35

|