|

By TopOneTone - 6 Years Ago

|

https://www.youtube.com/watch?v=CFGGJoJjvyk

This is my latest addition to the Chuck Chunder series. Really enjoying working on these and finding it has been a really good way to force me into learning how to use iclone features and plug-ins that previously I was not utilising very often.

The big issue I am very conscious of at the moment is the lip sync. You can see from this video, that despite putting a lot of time into lining up the phonemes and adjusting mouth openings its still far from perfect. Recently, I had a project for a client that involved two characters in a 3 minute discussion and it took me nearly a week to correct the lip sync and even then I still felt it wasn't perfect. I'll confess lip sync has not been something that I have paid a lot of attention to in the past, but I'm finding I'm spending a lot of time on it now and am very conscious of its impact.

I have a couple of theories that I'd like to throw out there for comment and hopefully stimulate some discussion on techniques for improving lip sync and maybe Kai or someone could make it the focus of an advanced tutorial.

1) I'm finding the sound is marginally ahead of the animation ie there seems to be a very slight delay between the voice and the lip movement. I guess in my non-techie way I could rationalise that in an automated system that will be the case, but I'm very conscious of having to regularly adjust for this.

2) This delay seems to vary across the sound track, so sliding the sound track along the time line to correct the first part of the speech may create a problem further on. I often end up splicing up the sound file into smaller portions just to deal with this.

3) I often have to make changes to the phonemes and I guess this could be influenced by the tone, volume and speed of the voice and I guess these could also impact on the above.

4) The weirdest thing I am regularly encountering, is that I am convinced that the render process has an impact on the lip sync outcome. There are times when I can see a clear difference between the lip sync I am observing on screen in iclone and the rendered video. This becomes apparent in the process I use for creating the Chuck Chunder videos. I have a pre-recorded sound track, which I splice up to create the individual character voice tracks for the lip sync. I then take the rendered scenes and compile them together replacing the individual voice tracks with the original complete mix sound track for the final edit, only to discover that various sections don't line up perfectly.

I'm not bagging iclone's lip sync, I'm just wanting to work out how to get a better end result than I'm currently getting. So if anyone can shed any light on what I'm encountering or offer tips or techniques to improve on my approach, I'd really appreciate it. Does anyone know if the lip sync system is likely to be developed in the future?

Cheers,

Tony

|

|

By Delerna - 6 Years Ago

|

I really don't know the answer but I wonder if doing something like I have heard relating to lip syncing singing might help? Might make it harder too ??????

Putting a voice singing on the character so he/she lip sinks to the song doesn't work very well. I heard some comments from others stating the same thing and recommending that you do the singing as a background sound and recording yourself talking the song rather than singing it to control the lip syncing better. I haven't tried it myself yet so I can't say it works but it sounds reasonable. I guess it might even help you to time the lip syncing with the actual voice. I figure you can speak the words in a way that helps the lip syncing better even though it might not sound good.The actual speaking will be in the background so it sounds good but doesn't effect the lip syncing. Whether that will be too hard to do or not I don't know. It's just what I have heard from some other people.

|

|

By Delerna - 6 Years Ago

|

Mind you, I just finished watching it and I really enjoyed it.

Yes I saw the lip sinking you are talking about but really I only took notice of it because you mentioned its issue.

If it wasn't for you saying that I wouldn't have taken much notice of it and still enjoy watching it. Maybe its just me but I love cartoon styles and don't expect to see perfection.

I'm not trying to say you shouldn't try to improve it. Just that I totally enjoyed it and the lip syncing didn't effect my enjoyment of it at all.

|

|

By Kelleytoons - 6 Years Ago

|

This might be a *touch* OT, but for really perfect lip sync there isn't any better program I've ever found (and I've used things costing $$$ -- even twice the cost of Faceware, which you'd think would be perfect for lip sync) than the freeware Papagayo. Of course, there's a catch -- it isn't designed for iClone.

What it does is analyze an audio track and generate the phonemes you need, but then allow you to easily (even a 10 minute scene takes but a minute or so) adjust them so they are perfect. It's designed for the Moho 2D animation program, exporting an ASCII file that that program loads up automatically. I've used it for broadcast television work and never had any complaints. Ah, but then how do you apply this ASCII file to iClone?

I was SO hoping that when the Python (add-on? feature?) came out we'd be able to program this, but since it appears like they are basically crippling any abilities it will have that clearly won't be possible (because if it was I'd have it done within a day or two -- it would be my highest priority). Failing that you could use it to manually adjust things (a PITA, but it *would* be accurate and it's basically what we had to do for broadcast work back in the day with very expensive track generators). At the very least everyone ought to download Papagayo just in case someday it goes away.

It's my fondest hope I live long enough for this to be automatically possible in iClone (I have SO many audio tracks recorded by professionals I need to animate -- would be amazing).

|

|

By TopOneTone - 6 Years Ago

|

Thanks Mate. Interesting suggestion I'll have to try that out and see if it works.

Glad it didn't get in the way of your enjoyment.

Cheers,

Tony

Plenty more episodes of Chuck Chunder at :

youtube : https://www.youtube.com/channel/UCGRNWyCMD7iPr4DqUNnHwgQ

facebook :https://www.facebook.com/Chundertime/

|

|

By Delerna - 6 Years Ago

|

|

I would like to know if it does. At the moment I am working on creating a lot of props for my next harry potter video and soon I will be starting to make my video and I am sure I will be needing to work on my lip syncing too. Knowing how that works will be helpful.

|

|

By Zeronimo - 6 Years Ago

|

I do not know what you think about it, but I think there is also a technical problem: iClone works at the speed of 60 frames per second and thus generates the labial movements at this speed, but when render at 30 fps we lose 50% of the frames.

This is especially visible when there are explosive syllables ('pa' 'pe') that are very fast lip movements.

Perhaps it should be rendered at 60 fps without compression when the movie contains dialogues.

|

|

By Kelleytoons - 6 Years Ago

|

Actually less frames per second works better with fast dialog -- so the more explosive the sounds, the fewer the frames you need (most animation is done at 24 fps and looks terrific).

Having more frames just exposes the "wrongness" of the sync. Try rendering at 24fps and you might get a better match.

|

|

By Zeronimo - 6 Years Ago

|

|

Kelleytoons (7/10/2018)

Actually less frames per second works better with fast dialog -- so the more explosive the sounds, the fewer the frames you need (most animation is done at 24 fps and looks terrific).

Having more frames just exposes the "wrongness" of the sync. Try rendering at 24fps and you might get a better match.

I would have thought that more frames per second would have given a better result, but if you say otherwise I think you tried and you must be right.

I'll have to try the next time.

|

|

By Kelleytoons - 6 Years Ago

|

It may work better for slow speech (or for *very* precise, slow movements of the face) but yes, far fewer frames works better with things like explosives.

Think of it this way -- if you want to see a "flash" (a "real" explosive :> you really only want a frame or two at the most. More frames, even if in Real Life it might be that way, doesn't convey the speed. While things have changed quite a bit (used to be all films were done at 24fps, but with digital we are seeing more and more done at higher frame rates) it's still important for very quick things to be more of an impression than spelled out. So things like "p's" and "f's" will "read" better with just the smallest of frames -- the eye is fooled (remember, we are just fooling the eye anyway, due to persistence of vision). you really only want a frame or two at the most. More frames, even if in Real Life it might be that way, doesn't convey the speed. While things have changed quite a bit (used to be all films were done at 24fps, but with digital we are seeing more and more done at higher frame rates) it's still important for very quick things to be more of an impression than spelled out. So things like "p's" and "f's" will "read" better with just the smallest of frames -- the eye is fooled (remember, we are just fooling the eye anyway, due to persistence of vision).

And even Faceware reads better with less frames -- the guy that does all the tutorials here only does them at 30fps (he doesn't even use a webcam capable of higher rates). I've seen this firsthand myself and have now stepped back to that from the 60fps I was using.

|

|

By Rampa - 6 Years Ago

|

It's the amount of time the explosive takes, as KT has said. If your playing back at 60 FPS, obviously you get twice as many frames in that same time, and thus it would be smoother.

The only CODEC that can output higher than 30 FPS is AVI. It can also be lossless, which is nice if you need it. Image sequences can also be output at any frame rate you choose.

|

|

By Kelleytoons - 6 Years Ago

|

Actually, Rampa, smoothness is not what you want with those sort of lip movements. It's really a trick (as is all animation and, indeed, any film or video itself which always relies on POV effects). For the mouth to look "right" you want less frames -- as simple as that. Unless your lip sync is dead solid perfect (which no animation sync really can be) you will always be better off with fewer FPS.

But just try it and you'll see -- the mind's eye will "fill in the blanks" as long as the beats are correct. But the more frames the less you can get away with.

|

|

By thedirector1974 - 6 Years Ago

|

There are some things you should consider using the lip sync feature of iClone.

First, you have to alter almost every viseme manually, after they were created by analyzing the audio file. Even if you have perfect audio quality, the automatic lip sync is far from perfect. So you have to go from word to word. Delete alle the viseme you don't need. You should only keep the "visible" letters. Do not try to reconstruct whole words, it won't work.

An example: If you have the word "woman", you keep "w" "m" "a" and at the end, if you have time "n". The letter "o" is almost silent and the mouth movement of "w" is not so different to "o".

Keep your viseme track clean. Use only the visible letters and try to use the "expresivness" value for each letter, to in- or decrease the expresivness of your character.

Greetings, Direx

|

|

By K00L Breeze - 6 Years Ago

|

|

Kelleytoons (7/10/2018)

...So things like "p's" and "f's" will "read" better with just the smallest of frames -- the eye is fooled (remember, we are just fooling the eye anyway, due to persistence of vision).

And even Faceware reads better with less frames -- the guy that does all the tutorials here only does them at 30fps (he doesn't even use a webcam capable of higher rates). I've seen this firsthand myself and have now stepped back to that from the 60fps I was using.

Using 30fps, I blend each phoneme three frames before the actual sound and the result is spot on.

I wonder how the speech engine "misses" so many "p's, f's" and so on?

|

|

By justaviking - 6 Years Ago

|

It's strange, but after doing lip sync and viseme work in iClone, I find myself noticing how little people's lips actually "shape" themselves while talking.

When I clean up the visemes, and even when I think I get a good result, I believe I tend to put in too many "shapes." It's especially easy to want to add the "Ooo" lip shape, which (at least where I live) is not used a lot, especially not the upper lip. I also have to beware of too many (or too strong) "w" (whu) visemes.

It's funny after doing that work in iClone. Have you even watched someone talk, and it looks "wrong?" If your avatar's lips moved as little as the real person's did, you'd probably think you're doing a horrible job. Have you even thought a real, live person was out of sync? Everything gets strange when to stare at it too much, I guess.

Anyway, while the timing is important, you don't need to overdo it the number of visemes. In fact, when I do "vocal-to-viseme" (my normal workflow) the first cleanup work I do is to delete anywhere from 1/4 to 1/2 of the visemes that iClone makes.

|

|

By 4u2ges - 6 Years Ago

|

|

justaviking (7/11/2018)

It's strange, but after doing lip sync and viseme work in iClone, I find myself noticing how little people's lips actually "shape" themselves while talking.

When I clean up the visemes, and even when I think I get a good result, I believe I tend to put in too many "shapes." It's especially easy to want to add the "Ooo" lip shape, which (at least where I live) is not used a lot, especially not the upper lip. I also have to beware of too many (or too strong) "w" (whu) visemes.

It's funny after doing that work in iClone. Have you even watched someone talk, and it looks "wrong?" If your avatar's lips moved as little as the real person's did, you'd probably think you're doing a horrible job. Have you even thought a real, live person was out of sync? Everything gets strange when to stare at it too much, I guess.

Anyway, while the timing is important, you don't need to overdo it the number of visemes. In fact, when I do "vocal-to-viseme" (my normal workflow) the first cleanup work I do is to delete anywhere from 1/4 to 1/2 of the visemes that iClone makes.

This very funny Denis! Yesterday, I was reading this thread and started thinking, when was the last time I looked at peoples lips while they were talking. Have I ever payed attention to lips while watching a movie.

Yes I do get extremely annoyed if I watch a movie where audio and video is not synchronized (that happens if you download some poorly ripped/coded video content). But if actors at least open and close a mouth in sync with audio would you really notice if they said "a" but it sounded like a "b"? You have to extremely bored with the plot to start noticing lips movement. How about interpreted movies where actors talk a foreign language and you listen translation in English. Are you getting irritated? No! You are just watching the movie. Now imagine the opposite. Hollywood blockbusters are distributed all around the world. Millions of people are watching them interpreted. Do they care about visemes? No, they are enjoying the content.

So I agree with you. Proper visemes are probably important to some degree. But going crazy about it is a nonsense.

|

|

By Delerna - 6 Years Ago

|

|

when was the last time I looked at peoples lips while they were talking?

Yes, as I said, while I watched the video I only really took notice of the lip syncing because it was mentioned in the OP.

Without that I wouldn't have taken much notice of it at all. Maybe if it was a lot worse but for me, in this video, it didn't look bad at all.

So yes I agree. Get the lip syncing reasonable but don't end up wasting time trying to make it perfect.

One thing I have noticed with my wife, and as the person making something I think its something we can all fall into.

My wife makes cards and she constantly shows them too me and points out all the faults that I didn't notice until she did point it out.

And even then I really didn't see a problem with it. Ok not perfect but not bad either.

Edit.

And yes she does really good looking cards. Too me they look professional. So I'm not trying to say we don't need to make what were working on look good

|

|

By Kelleytoons - 6 Years Ago

|

The thing about the "too many visemes" is exactly what I was talking about when I said the less frames the better. You really don't need many frames and/or visemes (and boy do I wish we could use Python to implement Papagayo, which gets it *exactly* right).

Also, you find that in doing a lot of lip-sync work that while folks are *very* quick to notice sync that's off, it's usually because it's *behind" the sound. People open their mouths before sounds come out, but if the sound is in front of this it looks completely wrong. But having it come a little late is just fine (as long as the mouth is still open before the sound ends). When I say "a little" we're only talking one or two frames at 24fps.

|

|

By sonic7 - 6 Years Ago

|

Interesting observation - the result of your past experiences with all things 'Audio Visual'....

You got me thinking because *another* aspect of this is that 'as it happens' - 'light' travels *way* faster than 'sound' - which re-enforces your point about what would appear 'natural'. There'd be very little in it - but the visual sensation of light travelliing from a mouth opening, would actually be perceived ever so slightly *before* our perception of the sound from that mouth 'speaking' ...... interesting .....

|

|

By K00L Breeze - 6 Years Ago

|

|

sonic7 (7/11/2018)

...what would appear 'natural'.

Nail the first phoneme. (Mouth opening doesn't cut it for P's, F's, W's.... and so forth). Blend it with the "next"....nearest vowel.

Repeat this for the next "sound.".... Don't waste time pronouncing each and every word.

Nailing the sound (with correct starting phoneme) is what you want.

Fast speech is not that difficult.

Hit the first mouth pose and blend the.... "Accented Vowels."

What is of major concern to me is....'How the speech engine flat-out misses the first vital mouth pose."... of many words, ie P's, F's, W's and more.

|

|

By animagic - 6 Years Ago

|

It is instructive to do some lip-synching with FaceWare (if you have it), because then you'll indeed notice that a speaker's mouth moves far less than you would get when using iClone's lip-synching.

For some reason iClone's lip-synching has hardly evolved since it was first introduced (in iClone 2, I believe) and still shows inaccuracies that aren't really necessary if some work was put into it. I have given up pointing out the obvious to RL. There is an FT entry to improve lip-sync, but there haven't been that many takers. As has already been mentioned, most viseme assignments are incorrect and there are also too many. There is no reason to have visemes if there is no speech, and all those stray visemes give a cluttered impression on the timeline. RL has introduced some quick and dirty "fixes", such as smoothing, which help somewhat, but things should be better as not everyone can afford FaceWare.

3DTest (who did the FaceWare tutorials) has spent time analyzing lip-synching issues. One interesting find is that if you use a TTS voice in CrazyTalk the viseme assignments are correct, so it is possible to get it right. My assumption is that the text used to generate the speech in TTS is also used to assign the visemes. Text support for viseme assignment has been used in other lip-synching solutions. The one I'm aware of is Mimic (now included in DAZ), which I used for Poser many years ago. So improvement is attainable, it just doesn't seem to be a high priority for RL.

|

|

By Kelleytoons - 6 Years Ago

|

Ani is correct on all points here. I should point out that my own solution (of using Papagayo) also depends on a text read (it uses both audio and text -- the text assigns the correct phonemes, which are then aligned to the audio. That is why it is a perfect solution -- also moving them around is easy-peasy and even very long 10-15 minute sequences can be done in just a few minutes of work).

I'm still a *tiny* bit hopeful that one day the Python interface will expose the viseme track so that we can do the same thing in iClone, although the hope I'll be alive to see it has mostly faded.

|

|

By Delerna - 6 Years Ago

|

Tony.

I have shown off this video to some of my people at work and there were lots of enjoyment grinning. I have sent the UTUBE URL to them. If they make any comments to me I will share them with you.

|

|

By TopOneTone - 6 Years Ago

|

Thanks Graham, really appreciate passing the link around.

I can confirm what Kellytoons has said about 30 vs 60 fps, I rendered one episode at 60 fps and there was no noticeable improvement in the lip- sync. I have experimented with faceware, but I'm still having difficulty getting consistency. While it added more expressive facial movement it really messed up some of the mouth movement as I was trying to blend it into existing viseme. I'll keep trying, I'm sure it will get better with practice.

I have also thought about using TTS to generate the lip sync and then replace the soundtrack with the real voice recording, though I suspect this will be also require a lot of adjustment.

Its great to see that despite the fact lip sync doesn't get a lot of discussion on the forum that it is an area that people have issues with and would like to see improvement, so thanks to everyone who has contributed to keeping this topic running.

Cheers,

Tony

|

|

By K00L Breeze - 6 Years Ago

|

If you use face ware, analyzer, retargeter.... you don't have your problem..

It's very accurate.

|

|

By pruiz - 6 Years Ago

|

|

Not trying to be cute or controversial or stupid but do you mean 'plosive' (versus explosive) or am I misunderstanding. Which I am wont to do with every day of aging.( Charles de Gaulle called old age 'nauffrage - which is hard to translate exactly - but which means MoL shipwreck. Maybe we are talking an explosive situation in an animation?

|

|

By TopOneTone - 6 Years Ago

|

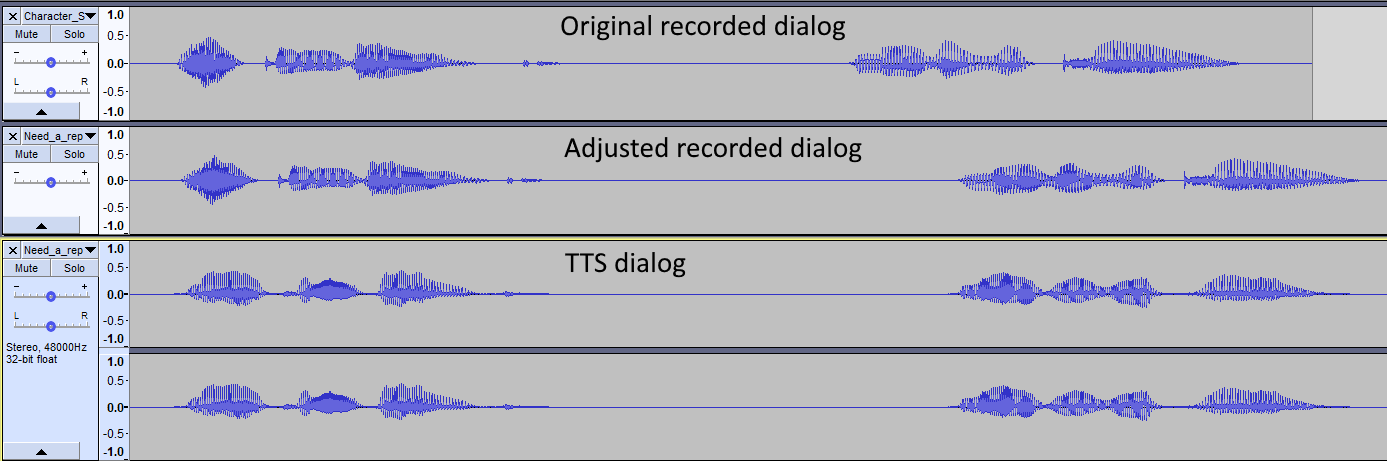

I created this test to compare TTS vs audio to drive the lip sync - typed in the script for the left face and used an audio recording for the right face. Then I realigned as best I could the TTS viseme track to match the audio viseme track, given that the pace was obviously different and less even in the live audio recording. After rendering, I replaced both audio tracks with the original audio file. There is a 2nd take in the video which merely has the right face mouth opened up more than in the first take, but it had little impact.

The first thing I noticed was that the blink rate changed between the faces - no idea why?

More importantly, to me it appears that the left face looks smoother and more consistent and makes me believe that the left face is actually talking. Without making any changes to the phonemes on either face, it would appear that TTS delivers a more accurate lip sync. I know its a one off rough test, but I wasn't expecting as good a result as I got from TTS, so I'm going to keep trying this approach to see whether its worth the inconvenience of typing a whole script.

I did want to try the same comparison using Faceware and the pre-recorded sound track, but I couldn't work out how to get it into the plug-in other than using the "what u hear" option which did not work very well at all. Can anyone tell me how to import a sound file into the Faceware Iclone plug-in?

Cheers,

Tony

|

|

By Delerna - 6 Years Ago

|

I have tried doing lip syncing through TTS. I don't know if this is what you tried. Just saying it here to assist.

I find when using TTS that spelling the words as they sound is usually better that spelling the words properly, can't think of the name for that process.

One more thing. I can't remember if this was mentioned by the people who recommended using audacity for the speaking to do the lip syncing or its something I have thought about?

If you wear headphones and listen to the actual speaking while you record yourself speaking into a microphone then you can get the timing between the actual speaking and your speaking pretty even.

Also your speaking needs to be done in a way to improve lip syncing rather than speaking well. So speaking similar as I mentioned for spelling words for TTS. The primary thing here, I think, is its easier to get the timing right. Although I can only speak with theory because I haven't tried this yet myself.

Maybe the others who have done it can give some tips on it?

|

|

By Delerna - 6 Years Ago

|

|

Just googled the spelling of words for sound. Phonetic Spelling

|

|

By animagic - 6 Years Ago

|

I have to amend what I stated earlier: you now also get correct visemes when using TTS in iClone. That is an "improvement" since IC6. For that version you needed to use CT8 to get correct visemes, which was an extra step.

I haven't really tried to do this myself to see if it's possible to come up with a feasible procedure.

|

|

By ckalan1 - 6 Years Ago

|

Here is an example of lip sync that I did today. It is not perfect but I think with a bit more tweaking it could look pretty good.

If anyone is interested in how I did this let me know.

Craig

|

|

By Delerna - 6 Years Ago

|

|

POST TOPIC :- Lip Sync Improvement...Any Tips or Suggestions

So I'm sure Tony will be interested

The video isn't very long, so I find it hard to see how good it really is.

The last part Is what I noticed most and looked good. The first part just happens too quick from when I start the video for me too notice it well.

Maybe its just my eyes are too slow

Yes please, I'm interested.

|

|

By ckalan1 - 6 Years Ago

|

Sorry, it is so short. First thing I suggest doing is watch this video. This is how this animator does it with some different tools than iclone. But, the general principles are the same. Iclone just has a different set of tools.

1. Record a video of the actor performing the dialog. If you don't have a video of the actor you can record yourself and use that as a reference. I use a 16x9 aspect ratio. You will have to sync your performance to the original audio if you don't have a video of the performance.

2. I create a plane and place the reference video on the plane. This will give you a video that can play both picture and sound.

3. Import the character you want to animate.

4. Import the audio ( from the video that you are going to use as the reference) If the dialog is audio only you will have to sync it to the video reference. Go to the animation tab and select Create Script under the Facial section. Select Audio File and import the audio file. This will create the audio track that you will slide around to sync with the dialog on the video.

5. Open the timeline then select the character and then open the Viseme track and expand it so you can see both the Voice and the Lips tracks.

6. Select all the items on the Lips track and delete them. (You might want to copy the entire Viseme track and save it after the end of the project.)

7. Try to control the playback from the timeline as much as possible. Play the section you are working on to get a feel for how the reference video actor moves their mouth. Pay special attention to where they open and close their mouth.

8. You will also notice that the spoken words (the Visemes) won't always have a direct correlation to the phonemes. You will sometimes have to pick letters from the Lips Editor that look most like the reference video.

9. Move the cursor slowly down the Timeline. Find the places where the mouth closes all the way. Place "None" in those places. Locate the apex of the open mouth before it starts closing again. I typically use "Ah" and place it on the Timeline whenever the mouth opens wide. Scale the Expressiveness based on how wide the mouth opens.

10. Now go through the video and place the "B_M_P" This is easy because it is placed right after the "None".

11. You will have to listen for the rest of the sounds and watch the reference video to determine where the rest of the Visemes should be placed. I listen for one type of phoneme at a time and go through the list of Visemes and place them at the appropriate place on the timeline.

12. Tweak the expressiveness to correspond to the video.

13. Manipulate the character with Face Puppet then Motion Puppet. (Don't overdo it)

14. Play it back and tweak it some more. Practice practice practice.

I know this is a lot of work but I believe iclone is getting to the point where it is going to be possible very soon to get professional results.

I hope this helps.

Craig

|

|

By kungphu - 6 Years Ago

|

|

Very interested! Was this with FC?

|

|

By ckalan1 - 6 Years Ago

|

It was done manually. I just posted how I do it.

I hope this is useful.

Craig

|

|

By TopOneTone - 6 Years Ago

|

Many thanks Craig very interesting. I was originally thinking that using faceware was a way to get a visual reference the way you have used the live video as a guide for mouth movement, but I'm still struggling to get faceware to run smoothly and I can't work out how you can use a pre-recorded soundtrack with it, even though I'm sure I've read stuff that says you can. Anyway I'll try your approach and see how I go.

Have to say for a subject that doesn't get much discussion on the Forum, there have been lots of really interesting contributions to this Post so far.

Cheers,

Tony

|

|

By Kelleytoons - 6 Years Ago

|

|

TopOneTone (7/14/2018)

and I can't work out how you can use a pre-recorded soundtrack with it, even though I'm sure I've read stuff that says you can.

Tony,

You can't just straightforwardly use a pre-recorded soundtrack with Faceware -- IOW, there's no magic button to feed such a thing into the system. You *can* use pre-recorded video (and even live video, which I've covered in a tutorial somewhere) but I suspect what you are thinking about is what others have done like myself, namely do a "lip sync" version of the audio track. So when I wanted to do a song (a pre-recorded song) I set up Faceware and then on my other monitor I played the song while I sang along to the folks singing. Thus my own lips were "synced" as much as I could lip-sync to the song.

You could do the same for any audio track, but the results will only be as good as you are at syncing yourself (the song I did was WAY too fast for me to get completely accurate, although it looks good if I do say so myself).

Here's an example (another example, not the song I first did this on) where I synced the chorus (again, just by singing along with the real singers). It's easier with a song, of course, because lyrics are simpler and easier to remember, but you can do this with a pre-recorded track if you rehearse (and do a few takes):

|

|

By ckalan1 - 6 Years Ago

|

Thanks for sharing your music video. Lots of fun to watch.

Craig

|

|

By animagic - 6 Years Ago

|

I have been playing with the procedure originally proposed by 3DTest. It is similar to Mike's suggestion using Papagayo, in that the visemes are generated with text support, which gives a much more accurate result.

Assuming that you have some recorded dialog, you then proceed as follows. (You have to be comfortable with audio editing to do this.)

1. Select a short section of the recorded dialog, such as a few sentences. This is to make lining up the audio with the visemes easier.

2. In iClone, select your character, and generate a TTS speech script for the dialog, using iClone's built-in TTS lip-synch generator. Set the speed so that it matches the speed of the recorded dialog as well as possible. This will give you a viseme track with correct visemes.

3. Export the audio of TTS dialog (in iClone, select Render => Video => WAV).

4. For the next step, you need an audio editor like Audacity (which is free).

5. In Audacity, load the recorded dialog fragment in one track and the TTS audio in a second track.

6. The length of the audio portions (disregarding silences) should be about the same. If it is not, regenerate the TTS dialog while adjusting the speed.

Now, instead of moving visemes to match the recorded dialog, I adjust the recorded audio to match the TTS dialog. The recorded dialog will then match the visemes.

7. To continue, line up the recorded dialog with the TTS dialog. This may mean adjusting pauses or adjusting the length of certain fragments.

This sound perhaps complicated, but it's not hard. Here is an example screen grab:

You can adjust pauses by either deleting silence or adding it (using Generate => Silence, for example). You can change the length of fragments by using Effect => Change Tempo. This for Audacity, but there should be similar commands in other audio editors.

I'm used to audio editing, and I have looked at speech waveforms as part of my studies, so I have an advantage. It doesn't have to be super precise, but you want to make sure to align the beginnings of phrases and the already mentioned plosives and other short sounds.

Here is a short video (my internet upload speed is slow, so I'm only doing a short sample):

There was one viseme I had to change, but the others were fine. I found it tricky to add facial expressions, so that required some further tweaking.

|

|

By ckalan1 - 6 Years Ago

|

The real challenge is to make the lips move in an asymmetrical manner. Being able to add in snarls, lip curls, deformations and various mouth shapes to correspond to the emotions is what will make iclone avatars come alive. The eyes and forehead deformation plays a big part in making the lip sync aesthetically pleasing.

My next little project is to figure out the best way to bring facial morphs from DAZ to iclone.

Craig

|

|

By animagic - 6 Years Ago

|

|

ckalan1 (7/14/2018)

The real challenge is to make the lips move in an asymmetrical manner. Being able to add in snarls, lip curls, deformations and various mouth shapes to correspond to the emotions is what will make iclone avatars come alive. The eyes and forehead deformation plays a big part in making the lip sync aesthetically pleasing.

My next little project is to figure out the best way to bring facial morphs from DAZ to iclone.

Craig

There are now a lot more facial morphs in iClone, so if you use CC characters that would be easier. The Facial Key option makes these available, for example.

|

|

By doubblesixx - 4 Years Ago

|

Read through this post and i hoped to find a better answer.. I got curious how this all works from another post and started to investigate but when loading in an audiofile and finding it's off by about 8 - 10 frames( counting the initial transient and where the bulk starts maybe another 2 frames) so between 8 - 12 frames early.. it gets tedious to compensate for this and get a working result when you see is not what you get.. i find this useless

One (crappy) workaround could be using a 3'rdparty app like Pure Ref - take screenshot of the audiofile zoomed out and lay it ontop on the current visual representation in to get a reference to place the pnonemes against.. but.. how hard would it be to add a timeshift function in iclone so the visual and audio always would be in sync.. this would help tremendously.

As i see it now knowing the limitations other tools like what Mike's using (papagayo) seems to be the only option.

|

|

By Kelleytoons - 4 Years Ago

|

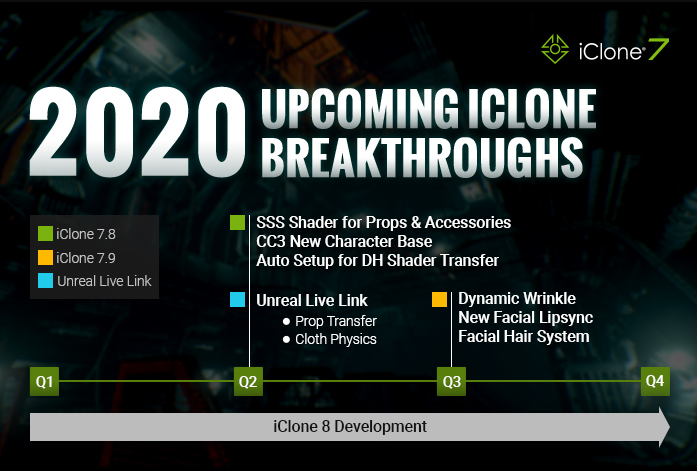

The good news is this will be improved -- we know they are working on a much better system and IIRC it *should* be before the end of the year (but I'm old and don't necessarily remember -- search here for the timeline and you might find more details).

So -- if you have patience you will be rewarded.

|

|

By toystorylab - 4 Years Ago

|

New Lipsync is planned for Q3...

Enhanced Facial Lip-sync (iClone 7.9)- A highly accurate viseme generation based on voice detection will be added (supports English, French, German in the first release)

- Facial animation can be driven by audio strength for different animation styles

- Enhanced smooth lip algorithm for co-articulation.

|