|

By wildstar - 6 Years Ago

|

|

If you like me have a dream to produce movies for netflix, this are some technical requirements for your product can be evaluated by netflix team .

1 - full 4k resolution - 4096 × 2160

2 - 16 bit color space - ( bye iclone render )

3 - 240 megabit h264 compression ( minimum ) at 24 fps

4 - you must have 2 versions of your movie: a uncompressed version with 16 bit color space without color correction , and one compressed version like i said in number 3.

so... lets produce

PS .... unity render export frames in realtime at any resolution with 32 bits extra color space PS .... unity render export frames in realtime at any resolution with 32 bits extra color space

|

|

By TonyDPrime - 6 Years Ago

|

Thx for the topic and info!

Hey - Make a show about rendering! Unity, Unreal, Octane, iClone, 3DS Max and Daz Iray, Marmoset...

I would watch that all day long.

Or...You could even have me as a guest on a discussion panel about rendering, I would go on, and on, and on, and on, and on, and on, about it....

It would fill up a whole 13 shows, easy!

|

|

By wildstar - 6 Years Ago

|

|

TonyDPrime (5/24/2018)

Thx for the topic and info!

Hey - Make a show about rendering! Unity, Unreal, Octane, iClone, 3DS Max and Daz Iray, Marmoset...

I would watch that all day long.

Or...You could even have me as a guest on a discussion panel about rendering, I would go on, and on, and on, and on, and on, and on, about it....

It would fill up a whole 13 shows, easy!

|

|

By Kelleytoons - 6 Years Ago

|

You could still use iClone for your renders -- render to stills (as you should be doing anyway for any pro work) and in your video editor just output to 16 bit colorspace (again, what you should be doing).

|

|

By illusionLAB - 6 Years Ago

|

The spec is 16 bit for dynamic range - which is the only way to get glows without banding, or textures that can hold up to colour grading - so, converting the files from 8 bit to 16 bit using an editor/compositor is not going to fly as 8 bit files only have 256 steps from black to white, whereas 16 bit files have 65,536 steps.

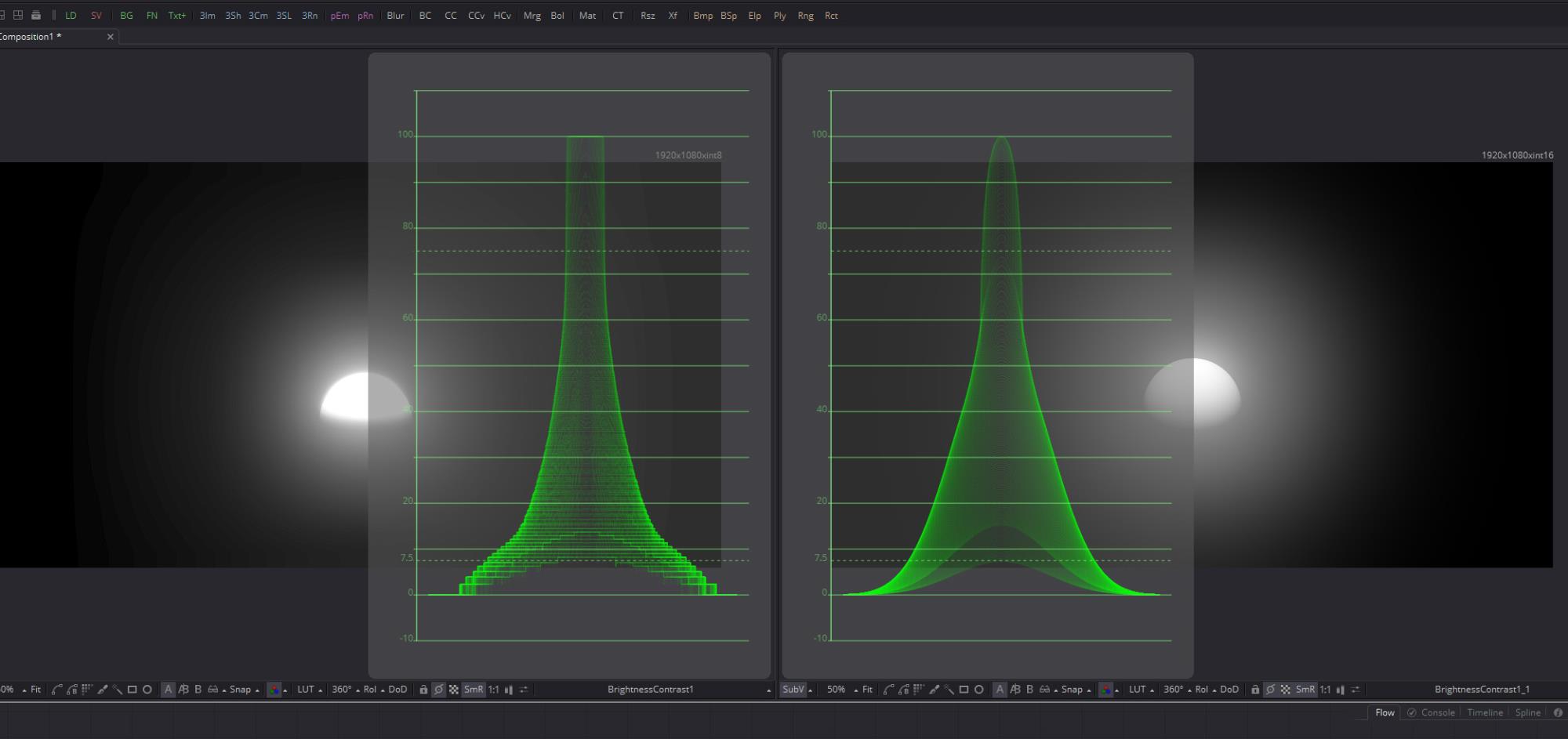

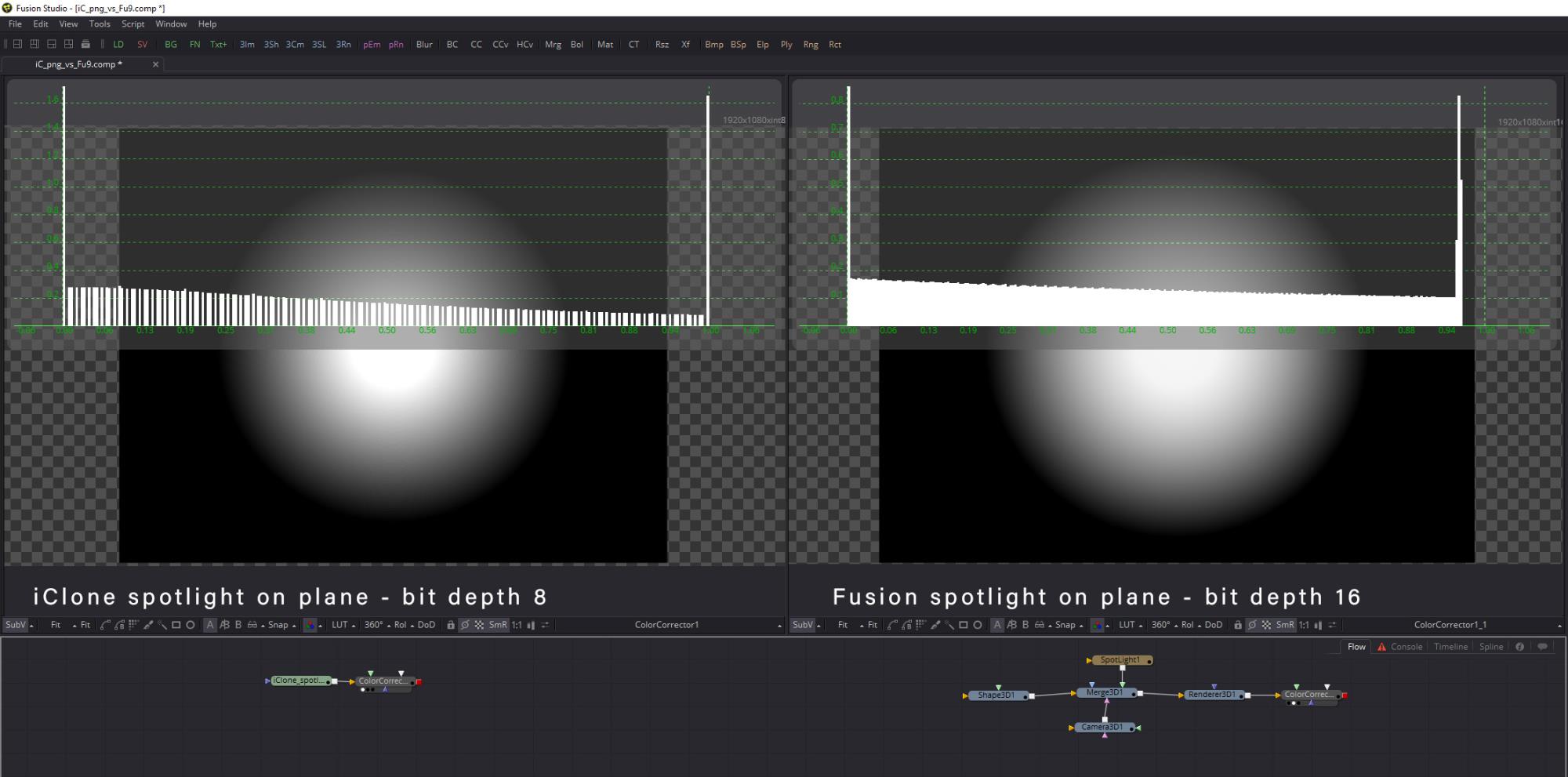

The glow sphere on the left is iClone and the sphere on the right is Fusion (both have had some gamma boost to illustrate how quickly 8bit shows it's shortcomings). So, if you convert an 8 bit render to a 16 bit file... all you get is a bigger file with the same image.

|

|

By Kelleytoons - 6 Years Ago

|

|

But it doesn't matter -- Netflix won't know (and won't care) how it was produced, only what it looks like. As long as it conforms to their specs they have no way of knowing how it was made.

|

|

By illusionLAB - 6 Years Ago

|

|

Not true. I have experience with Netflix and Amazon TV series/films and their technical requirements are as stringent as any broadcaster - the QC department would spot an 8 bit converted to 16 bit in a few seconds (as I was illustrating with the waveforms).

|

|

By wildstar - 6 Years Ago

|

|

Ilusion Lab is right , this is one of reasons i am so happy with unity, i have a powerfull render full featured *realtime GI, descent AO, descent antialiass (at no render time cost ) , screen space reflections , perfect DOF, etc etc etc, and when i turn on the EXR output on uniyt BINGO!, unity give me 32 bits color space. at any resolution , unity only show me "hey put a descent Hard drive and i give you full 60 fps render output bitch! "

|

|

By Kelleytoons - 6 Years Ago

|

|

Considering Netflix themselves upscale SD video to show, I'd be skeptical of this.

|

|

By Dr. Nemesis - 6 Years Ago

|

If I was making something that I knew was gonna end up on Netflix I think I'd IRAY that ish anyway.

I've seen some things on Netflix that I doubt were legit 16bit colour.

Red Vs Blue used to be on it (dunno if it still is).

|

|

By illusionLAB - 6 Years Ago

|

|

I'm pretty sure we were talking about "new content". If they pick up a series that was shot in SD analogue in B&W, then they are bound by the "legacy". New content, must be created to meet their spec unless the capture medium is a part of the show... ie. cell phone vids, CCTV, police cams etc. Obviously, they have poetic license (and do exercise it), but when it comes to a CG series you are up against studios like Dreamworks.

|

|

By Dr. Nemesis - 6 Years Ago

|

|

illusionLAB (5/24/2018)

I'm pretty sure we were talking about "new content".

Well If we're shifting goalposts I might as well point out that Iclone's short comings in this regard aren't really a problem. The number of Iclone films that are the length and production quality to end up on Netflix can be counted on one hand, if they exist at all.

Examples to the contrary are of course welcome.

|

|

By planetstardragon - 6 Years Ago

|

Good post, ty for sharing.

I did a quick search and found this site for more information.

https://partnerhelp.netflixstudios.com/hc/en-us/articles/217237077-Production-and-Post-Production-Requirements

|

|

By Dr. Nemesis - 6 Years Ago

|

Now this is useful. I don't think the issue is "new content". Netflix Originals have one set of requirements, licensed materials have another. This makes a lot more sense.

https://partnerhelp.netflixstudios.com/hc/en-us/categories/202282037

All those who're in a deal with Netflix to make an original series raise your hand.

Everyone else, your dreams remain intact as you'll see their requirements for licensed content are not as stringent as in the OP.

|

|

By animagic - 6 Years Ago

|

It doesn't make much money, but I have sent in one of my shorts to Amazon Video Direct (AVD) and it was accepted. It is streamed through Amazon Prime Video or on demand (I guess). The access page is here.

AVD is specifically for shorts, and the technical specifications are such that they are attainable with iClone (which I used for rendering). The format is HD (1920 x 1080) without anything specific about color as far as I can tell.

More about the video specifications here, if interested.

|

|

By Famekrafts - 6 Years Ago

|

Really guys how many of you are actually making a series to be aired on Netflix?

If you have the budget, money, and manpower to do so, why not use other renderers like Maya and Houdini and Arnold, to give you the best quality required and acceptable world wide.

Yes iClone can be used for creating a cartoon animated series but is it really worth it unless you are crowdfunded or have some funds to do so?

Unity and Unreal are great guys but I would stay away from a game engine for animation work, it has too many distractions and requires coding at some level.

if you already using game engines, it is great but if not focus on iClone rather than trying to learn a game engine.

This thread is another technical issue which should be noted down by Reallusion for future updates and let's move on.

|

|

By TonyDPrime - 6 Years Ago

|

This topic made me think of NetFlix, which I love. The other thing it made me think is that maybe we are touching on something that is never really discussed - the technicals of pro-production.

One thing that I would be interested too, is the audio component, do they need a separate audio file, or it can be as a part of the video file, etc.

Because maybe KT is right, in that if they see a value to the project, they may not care what was used, but how it looks. Maybe they just tell that to people to make them work harder for no reason, so that they can then say to themselves,

"Look!...we made them work harder....FOR NO REASON..."

On the flip side, I understand the need to have strict requirements for products you are in business relationships with. Maybe this is something RL through development can allow iClone users to have in iC8 -

Professional Quality Output. (ie - bit-depth, etc.)

|

|

By animagic - 6 Years Ago

|

@Tony: to answer your question about audio: for Amazon Video Services it is embedded with the video and can be either stereo or 5.1 surround. Details can be found on the page I gave a link to. I have had I long-time interest in sound before I started doing animation, and in my mind there is no excuse for bad audio.

@Famekrafts: I think it is useful to discuss distribution options, especially for short films, which is what most of us are doing.

|

|

By planetstardragon - 6 Years Ago

|

one thing that's always been in the back of my mind, is to become good enough with music videos, to eventually string them all together to be an animated musical in the spirit of the cult animated classic "heavy metal" but for dance music..with newer animation styles, I just liked how they mixed scifi stories with music, bascially turning it into a scifi musical. - my 2 fav subjects lol

.

That's a long way off / in the making, but it's a goal I use to keep me wanting to get better at animation and learning new stuff and not just mindlessly making eye candy for new music I release. Looking at stats like these allows me to add a little more to my process of making stuff so if ever I do eventually make a movie, I've developed the right habits and usable content along the way.

|

|

By justaviking - 6 Years Ago

|

|

@PSD - I really enjoyed Heavy Metal when it came out. I actually purchased TWO soundtracks from it. One was the "obvious" heavy metal music, and the other was "Heavy Meta - The Score." It is surprising how much classical music is in that movie. When I see parts of the movie now, all these years later, it doesn't captivate me as much as I always expect it to. So even though I enjoy it less now, it still holds a special place in my memories. Some scenes are quite memorable, and I really had to show my youngest son the opening scene (convertible coming in for a landing) after SpaceX launched the Tesla Roadster into space.

I did my "Pinhead - Kidnapped!" project by using a lot of music, all from Trans-Siberian Orchestra. In that project, I worked to incorporate the LYRICS into the storyline. It was a very fun and very challenging project. I actually got permission to use their music; that was a very fun email to receive. It wasn't really a direct inspiration initially, but I quickly realized that project was very similar to the movie, "Mamma Mia" which used ABBA music in much the same way.

|

|

By Famekrafts - 6 Years Ago

|

Well Blender 2.8 is coming with real-time engine. That will really help and hopefully, I can use iclone and blender both for rendering in real time.

https://code.blender.org/2018/03/blender-2-8-highlights/

https://code.blender.org/2018/03/eevee-f-a-q/

|

|

By raxel_67 - 6 Years Ago

|

|

Ooking at the evee test the only thing i truly envy from that engine is that it is 95% flicker free, RL really needs to fix flicker in iclone, because under certain circumstances flicker is a real pain, iclone needs a more consistent calculation of light and shadows because the difference between one frame and the next is sometimes too much, sometimes flicker occurs with normal lighting not gi. And settings such as supersampling Just don't do the job. Flickering needs to be addressed asap

|

|

By justaviking - 6 Years Ago

|

|

On the Eevee "Indoor" scene... can one of our resident "DOF Experts" comment on the DOF there? I'm not that "sensitive" to it yet, but I noticed the close-up napkins were out of focus and the flowers were not (T=0:18), so I was wondering how it fares on the "quality assessment."

|

|

By Famekrafts - 6 Years Ago

|

|

justaviking (5/27/2018)

On the Eevee "Indoor" scene... can one of our resident "DOF Experts" comment on the DOF there? I'm not that "sensitive" to it yet, but I noticed the close-up napkins were out of focus and the flowers were not (T=0:18), so I was wondering how it fares on the "quality assessment."

I am no expert on DOF and I am not going to comment on how it was calculated, not to mention 2.8 is not released yet and I find the build version very buggy. The thing is all the video above doesn't look like they were done in iclone which is the key. they are looking professional overall.

|

|

By Famekrafts - 6 Years Ago

|

.raxel_67 (5/26/2018)

Ooking at the evee test the only thing i truly envy from that engine is that it is 95% flicker free, RL really needs to fix flicker in iclone, because under certain circumstances flicker is a real pain, iclone needs a more consistent calculation of light and shadows because the difference between one frame and the next is sometimes too much, sometimes flicker occurs with normal lighting not gi. And settings such as supersampling Just don't do the job. Flickering needs to be addressed asap

Agree with you. Blender has a seed button in render panel. It can take randomly reduce noise for each frame thus reducing overall flicker in the animation.

|

|

By benhairston - 6 Years Ago

|

2.8 does look extremely promising, to the point where I could see doing all the animation in Iclone since I'm more familiar with those tools, and exporting to blender as a finishing tool. I've been circling Blender for ages, but looks like this is the time to dive in and investigate.

Still early in development, so I think it's too soon to comment on the DOF question until it's stable enough for production. Still looks better than Iclone though...

|

|

By Famekrafts - 6 Years Ago

|

|

benhairston (5/27/2018)

2.8 does look extremely promising, to the point where I could see doing all the animation in Iclone since I'm more familiar with those tools, and exporting to blender as a finishing tool. I've been circling Blender for ages, but looks like this is the time to dive in and investigate.

Still early in development, so I think it's too soon to comment on the DOF question until it's stable enough for production. Still looks better than Iclone though...

My only issue with Blender was the rendering time, a good quality composited scene, especially with render layers frames, used to take one hour per frame. With Eevee that is going to change, I anyways use Blender for the modeling.

|

|

By TonyDPrime - 6 Years Ago

|

Looking at this I see what already exists in Unreal Engine as far as graphics go. (which can make us hopeful that the 'tech' will come natively to iClone one day too....)

But, I am thinking where an iCloner will like the Blender route better, is that you can probably import cameras from iClone to Blender.

...in Unreal, you have no ability to do this (import camera) and it kills some of the productivity element of UE4, as nice as it is  . .

@Wildstar - does Unity allow importing camera in an easy way, or is it

|

|

By TonyDPrime - 6 Years Ago

|

Hmmm...looking at what people have accomplished with Daz and iClone to Unity. The models in Unity look pretty bad actually.

Maybe there is a high render speed, but unless you are going some toonish route, I would say, strictly as a cinematic renderer, it may not be worth it, unless you are willing to take the visual hit.

I am finding Unreal is not all its cracked up to be with standard models either. (Siren is a very complex mesh-model, so that is a different story, as she is a mega complex mesh-scape...)

But I'm talking your top Daz G3 or G8 Daz model....they look flat in Unity, somewhat better in Unreal.....

My opinion. or as we say in Deutsche, MEIN OPINIONSHERTZPILLECHTER.

|

|

By wildstar - 6 Years Ago

|

One of the important points in this post is: 32 bits color space. no one realtime solution i tested offer 32 bit color space ( toolbag 3 , unreal included ) i dont know about eevee. but using a realtime engine released now,in place to use a engine with 10 + years old experience like unity or unreal is not much smart in my opnion, i am tired with "betas" tests or whatever. about DOF. any realtime solution on market today have better DOF than iclone ( sad but true ) C4D R19 Realtime viewport have better quality on supersampling and dof masks than iclone.

toolbag 3 have better DOF , unity , unreal. so Eevee have better DOF than iclone is not a advantage. you can import iclone cameras to unity but the control is limited. cause the camera animation information is exported as a fbx clip . and inside unity you cant finetunning it. ( its locked ) so its a little complicated. unity have Cinemachine and its much more powerfull to make camera work , to say you the real, Cinemachine is amazing i am in love with that. and unity force you to use cinemachine cause is the only way to animate camera effects like DOF. bloom, etc. today the unique realtime solution to offer 32 bit color space output is unity .. cause that mid studios use it to produce animated series for VOD systems.

|

|

By benhairston - 6 Years Ago

|

Wildstar, what's the asset conversion process like for Unity? I tried using Unreal for a bit, but it was pretty laborious to get things converted properly. Seems like Unity has improved greatly since I last looked at it...

I'm with you 100% on 32 bit colorspace. I want to be able to stay in linear colorspace from import all the way to final render.

|

|

By wildstar - 6 Years Ago

|

unity supports Fbx. and today has a very clever way of dealing with fbx variations that exists. how to import? drag and drop  . another thing that made me use unity instead of unreal. is the fact that the user can choose between uv0 and uv1. if you try to make a light bake using a scenario made for DAZ for example you will have many problems with light bake within the unreal, which does not happen in unity. and today there are 3 things that made me fall in love with unity. the pre - computed GI. (just do light bake if I really need) the cinemachine and 32 bit color space for render  and i am not talking about the post processing stack ( is wonderfull ) |

|

By wildstar - 6 Years Ago

|

|

TonyDPrime (5/28/2018)

Hmmm...looking at what people have accomplished with Daz and iClone to Unity. The models in Unity look pretty bad actually.

Maybe there is a high render speed, but unless you are going some toonish route, I would say, strictly as a cinematic renderer, it may not be worth it, unless you are willing to take the visual hit.

I am finding Unreal is not all its cracked up to be with standard models either. (Siren is a very complex mesh-model, so that is a different story, as she is a mega complex mesh-scape...)

But I'm talking your top Daz G3 or G8 Daz model....they look flat in Unity, somewhat better in Unreal.....

My opinion. or as we say in Deutsche, MEIN OPINIONSHERTZPILLECHTER.

|

|

By wildstar - 6 Years Ago

|

iam not using HD render pipeline in my projects cause is in "preview" and i find some bugs using it for render frames with post processing stack v2

|

|

By TonyDPrime - 6 Years Ago

|

wildstar (5/28/2018)

TonyDPrime (5/28/2018)

Hmmm...looking at what people have accomplished with Daz and iClone to Unity. The models in Unity look pretty bad actually.

Maybe there is a high render speed, but unless you are going some toonish route, I would say, strictly as a cinematic renderer, it may not be worth it, unless you are willing to take the visual hit.

I am finding Unreal is not all its cracked up to be with standard models either. (Siren is a very complex mesh-model, so that is a different story, as she is a mega complex mesh-scape...)

But I'm talking your top Daz G3 or G8 Daz model....they look flat in Unity, somewhat better in Unreal.....

My opinion. or as we say in Deutsche, MEIN OPINIONSHERTZPILLECHTER.

Yeah, it looks cool...but what don't they show. They show a human hand, and then rock-tree guy. I want to see an imported Daz model or iClone figure in that trailer, head on, looking awesome like the rest of it!

I mean, I could post UE4's Siren right back and say, "Look how awesome!...". But that means nothing...

I am talking about Daz and iClone models in UE4 and Unity. iClone avatars moved to UE4 or Unity look like garbage compared to what the engines do with environments. And I agree, the lighting looks amazing.

But I think it's a limit yet of our theoretic workflow....the iClonian avatars....Unless they are hyper super complex, they look bad.

I think Daz and iClone actually have shown they do a much better job with them, probably because they are native to them (Daz and CC2 avatars).

|

|

By wildstar - 6 Years Ago

|

TonyDPrime (5/28/2018)

wildstar (5/28/2018)

TonyDPrime (5/28/2018)

Hmmm...looking at what people have accomplished with Daz and iClone to Unity. The models in Unity look pretty bad actually.

Maybe there is a high render speed, but unless you are going some toonish route, I would say, strictly as a cinematic renderer, it may not be worth it, unless you are willing to take the visual hit.

I am finding Unreal is not all its cracked up to be with standard models either. (Siren is a very complex mesh-model, so that is a different story, as she is a mega complex mesh-scape...)

But I'm talking your top Daz G3 or G8 Daz model....they look flat in Unity, somewhat better in Unreal.....

My opinion. or as we say in Deutsche, MEIN OPINIONSHERTZPILLECHTER. Yeah, it looks cool...but what don't they show. They show a human hand, and then rock-tree guy. I want to see an imported Daz model or iClone figure in that trailer, head on, looking awesome like the rest of it! I mean, I could post UE4's Siren right back and say, "Look how awesome!...". But that means nothing... I am talking about Daz and iClone models in UE4 and Unity. iClone avatars moved to UE4 or Unity look like garbage compared to what the engines do with environments. And I agree, the lighting looks amazing. But I think it's a limit yet of our theoretic workflow....the iClonian avatars....Unless they are hyper super complex, they look bad. I think Daz and iClone actually have shown they do a much better job with them, probably because they are native to them (Daz and CC2 avatars).

you're totally wrong and I'm going to say why. there is nothing wrong with the characters made in daz genesis or in the iclone character creator (in the case of the iclone character creator as I already mentioned here the body's unwrap uv was very poorly done, but I outlined this by painting a new texture map in 4k using the same genesis 8 textures on character creator bases) using and testing all those engines with iclone or genesis characters. I see that in the iclone the textures get worse. you need to use a texture in 4k to make it acceptable, while in unity for example in 1024 it is already very interesting. the problem is that people test for unity and they think it's bad because in default it really has the quality set to get really poor. to a series of things that need to be done when you want to work with quality in unity. changing the color space from gamma to linear is one of them. among several other things. Want a character with good looks in Unity? What about SSS in real time? apparently all engines today have SSS in real time and the realusion that make us think that SSS is only possible by Iray. I'm finishing 5 minutes of animation fully rendered by Unity. and I'm using CC bases, as I use in any of my projects. and I can tell you that the details and the quality are well superior to the iclone or toolbag 3 or even the realtime viewport of c4d (engines that I had been considering until then for my project)

|

|

By TonyDPrime - 6 Years Ago

|

Hear that, iClone? Unity is going to Bury you....

Bury you in its GPU real-time Ray Tracing Lightmapper!

But, I'm just playing. I am not picking on Unity, I'm including UE4 too, which I have been using a lot lately.

I want to see better results in Unreal at this point, I just think it looks worse than Daz Iray raytrace, for example. And it doesn't really come close, to be honest.

|

|

By wildstar - 6 Years Ago

|

|

TonyDPrime (5/28/2018)

Hear that, iClone? Unity is going to Bury you....

Bury you in its GPU real-time Ray Tracing Lightmapper!

But, I'm just playing. I am not picking on Unity, I'm including UE4 too, which I have been using a lot lately.

I want to see better results in Unreal at this point, I just think it looks worse than Daz Iray raytrace, for example. And it doesn't really come close, to be honest.

will not enumerate all the points and motives. which make unity more viable to render really professional works. I already tired of talking about it all in this forum. I really would not want to have to export all my work done in iclone to an engine that "theoretically" should have the same quality because it uses IBL + PBR. As I said here before. render "features" are not enough for anyone who really wants to work professionally with animation. Reallusion has not understood that yet. I think you have already understood.

|

|

By wildstar - 6 Years Ago

|

Does your girl have mustaches?

|

|

By TonyDPrime - 6 Years Ago

|

wildstar (5/28/2018)

Does your girl have mustaches?

YES. And that is the beauty of what you can do in iClone....grit...

This is exactly what I'm saying, Wildstar, you can get this from iClone, and of course Daz, with the avatars - realism.

in UE4, it's a bit platic-y. Like, the render you made in Octane, they look plastic-y toonish. And believe me, I love it. And I know Unity can replicate that look.

I mean, have you seen a Daz model that looks good in Unity or UE4? probably not, right?

If you did, you would have sent me the link/vid/pic, LOL!....

Because, from iClone, in Unity/Unreal they they look like, well, what they are - game characters.

So, color space or not, Netflix qualifications or not, I just haven't seen anything look Dazzish, or iClonish even, from UE4 and Unity...when it comes to iClonian avatars.

Maybe Netflix wouldn't accept a format that iClone can produce. But...that format, as far as Unity goes, will only give you plasticy characters, from iClone.

Not fresh lively ones, with all their brilliant humanity intact.

By the way, nice going...

|

|

By TonyDPrime - 6 Years Ago

|

Okay...so I talked it over with this avatar.

And surprisingly, she told me that she wants me to try and render her outside of iClone.

So, I said fine, I'll put you in UE4 and we'll make you look great in there.

But she said, she wants not just that...

She wants to be rendered in...

UNITY 18.1!

So!...I'm going to render her in Unreal, and Unity, in addition to iClone.

That's right....she wants a 3-way!!!

|

|

By planetstardragon - 6 Years Ago

|

#justsayin...

|

|

By sonic7 - 6 Years Ago

|

@Tony .... >>> ".... So!...I'm going to render her in Unreal, and Unity, in addition to iClone.... " <<<

Well that sure will be very interesting .... A direct comparison !

(Don't forget to 'log' the *render times* too Tony)

|

|

By TonyDPrime - 6 Years Ago

|

sonic7 (5/29/2018)

@Tony .... >>> ".... So!...I'm going to render her in Unreal, and Unity, in addition to iClone.... " <<<

Well that sure will be very interesting .... A direct comparison !

(Don't forget to 'log' the *render times* too Tony)

I actually will have to log the frustration time with doing a scene. Unity has a new Shader Graph, which is a scaled down version of Unreal Engine, I like this.

But what sux is every export I do from iClone to Unity fails to export textures, for some reason. No idea why.

But then, you can't modify a texture after importing in...What?....Like, it's locked into the FBX, so guess what....you have to recreate the material anway!....YEY!!!!!

What is this Alembic style workflow....

A lot of work to achieve a toon. Which is what I AM FIGURING OUT.

You want real, you have to BUY FROM UNITY ASSET STORE...THEN YOU HAVE GOOD.

They are made by Maya Masters...But, do I look like a Maya Master?! I can't even design in CC, let alone use the Curve Editor...Oh..Forget it, I have NO IDEA.

Okay, but from iClone...I-CLONE- if you bring in your own character - FROM I-CLONE - get ready for that Barbie-thing Planetstardragon showed.

(BTW - that is a great character, I want that morph in CC.)

This Netflix-Unity-Powerhouse better be worth it. I am going to show this thing no mercy!....

NO MERCY!

|

|

By TonyDPrime - 6 Years Ago

|

I think the one thing everyone can agree on is that iClone's has a weaker production spec output.

And, the answer to iClone's weaker production spec output is a Stronger iClone Production Spec Output....(specs like Wildstar has been talking about).

|

|

By 45thdiv - 6 Years Ago

|

|

This is an interesting thread to follow. I appreciate the links to the Amazon and Netflix requirements.

|

|

By yoyomaster - 6 Years Ago

|

Interesting thread indeed, my two cents!

I have been eyeballing Netflix for sometime now, I even contacted a company that deals with them, the criteria are not as tight as one might think, this said, you need decent output.

As for Eevee, it has been in development for over 2 years now, and with the current code quest, Blender 2.8 should be in beta in July, the Blender Institute just switched to 2.8 for their current animated short, Eevee supports 8 and 16 bits output, SSS, IBL lights, DOF, volumetrics, including smoke and fire, and much more, not to mention the new Fracture modifier and Mantaflow, which should be included in it, and the list goes on, not to mention that it is 100% free and that it has a HUGE community and hundreds of free tutorials!

Iclone + Blender 2.8(Eevee) would be a good match IMHO!

|

|

By TonyDPrime - 6 Years Ago

|

YoYoMaster made me think about this...

I wonder if some of the hold off on updating iClone's PBR has to do with them implementing Iray. For example, it would be a big mess if they tweaked iClone's PBR, but then it did not translate well over to Iray...it would screw their marketing of automatic conversion.

Things like volumetrics and SSS might be held back, in theory, until Iray is up and running and they have a beat on what could get ported over automatically, or how to shunt what shouldn't get ported over to the Iray renderer.

@YoYoMaster, or anyone Blender-familiar - what is the material conversion process going to blender from iClone like, smooth and easy...or rocky and painful?

Also, anyone - is iClone's output 8-bit right now, as in, anyone know what exactly is the iClone output spec?

|

|

By yoyomaster - 6 Years Ago

|

|

TonyDPrime (5/29/2018)

@YoYoMaster, or anyone Blender-familiar - what is the material conversion process going to blender from iClone like, smooth and easy...or rocky and painful?

Blender has a Disney shader(Principled Shader), which is pure PBR, the same shader used by Eevee, I did some tests and it went very smoothly, if the texture files are named properly, you can even automate the process, at least for the most part!

|

|

By illusionLAB - 6 Years Ago

|

|

If they commission a series the spec is a negotiation point. If they license a series, they get what they get. Ultimately it's "content" they are interested in... 3D animation is trickier than 2D... we all love South Park - which was actually originally animated with Maya... the simplicity of the style is deliberate and not a limitation of the chosen platform. 3D animation is a trickier medium as things like aliasing, moire, flickering and colour banding are pretty much universally seen as "technical limitations" - and let's face it... aspiring to meet "minimum standards" is not really the best way to approach a project. Ultimately, you are creating a visual story... but then so is your competition. How about this analogy... a builder said to me yesterday "when I build a wood fence I use 6 inch posts instead of 4 inch... digging the holes costs the same - so, a few extra bucks for a way stronger fence!". If you're going to put 100 hours into animating your story... then surely it's worth 100 hours rendering time?

|

|

By illusionLAB - 6 Years Ago

|

iClone's output 8-bit right now, as in, anyone know what exactly is the iClone output spec

As iClone can use .HDR files the "engine" must be at least 16bit - most likely 16bit half float. I very much doubt we'll ever see anything but 8bit PBR renders from iClone's realtime engine... which is why implementing an iRay workflow will solve a whole bunch of limitations - quite possibly offering 16bit output as well (although 99% of us will be using 8bit textures - 16bit would help with lighting - especially banding on the glows).

|

|

By animagic - 6 Years Ago

|

|

For me, banding and Moire are the biggest issues I run into now. Whenever that occurred with iClone's old renderer, I could just add some noise to the Diffuse texture, but that doesn't always seem to work. So, overall the output looks much better, but I also encounter more problems I have to work around.

|

|

By planetstardragon - 6 Years Ago

|

actually the png image sequence format is 32 bit. That's really the proper way to render the best HD formats for commercial / big budget release. Any other format is just trying to find a shortcut with codecs.

You can actually go up to 32bit 8k in iclone png image sequence. - but prepare to eat up a ton of drive space, long render times, and work with proxy formats during editing then wrestle with the encoder in post production.

|

|

By yoyomaster - 6 Years Ago

|

Forgot to mention that Eevee now supports particle hair, yes, real hair, and there is a new Cycles/Eevee advanced hair shader in the making as we speak!

|

|

By illusionLAB - 6 Years Ago

|

"actually the png image sequence format is 32 bit"

Not true.

What we call 8 bit, are actually 24 bit files.... that is, 8 bits for channel R/G/B (8+8+8=24 bit file) . PNG supports an alpha which adds another channel of 8 bit depth making the file total 32 bits. When we refer to 16 or 32 bit files, they too are calculated "per channel" - so a 16 bit file is R16,G16,B16,A16 making the file 64 bit. There are only a handful of 32 bit depth formats... PSD, HDR, EXR and TIFF are the most used in production (PNG maxes out at 16 bit).

This graphic shows an iClone spotlight rendered as PNG (8 bit) and a similar spotlight in Fusion rendered at 16 bit. They appear similar, but the histograms clearly show the 256 'steps' from black to white on the 8 bit resolution, versus the highly compacted 65536 'steps' of 16 bit resolution. 8 bit can display smooth gradients, but manipulating the colour and contrast soon shows the 'steps' which is why it's prone to "banding".

It's exactly the same as audio format bit depths... a 16 bit audio file has 65,536 integers to represent one sample, where a 24 bit audio file has 16,777,216 integers to represent one sample (that's 255 times more resolution!)

|

|

By TonyDPrime - 6 Years Ago

|

So, iClone is somehow prevented from ever rendering out true-32 bit, due to some fashion by which it currently operates?

On a side, I am using Unity....man, you have to rebuild every single material. Even if exported out properly, you have to go in and tweak.

I am not giving up, but I have seen this with UE4...It gets to the point where you get done, and then you're like, "the avatar looked better in iClone to begin with..."

However, for backgrounds, environments, and effects, the are better than iClone's PBR. Like, iClone has better character PBR,and the game engines have better environment PBR...at least for iClone characters.

|

|

By illusionLAB - 6 Years Ago

|

|

Rendering with iRay may offer a higher bit depth for output - as iClone can work with HDR format, which is 32bit, (not 16 bit or 16 bit half float like I mentioned earlier) then it's most likely capable of rendering higher "bit depth" formats. Obviously, it won't improve your materials if they are all 8 bit jpeg/png/targa... but at least lighting falloffs and glows could render without banding. Hopefully iRay rendering will also give us access to "passes" like z-depth, diffuse, reflection, uv etc.

|

|

By TonyDPrime - 6 Years Ago

|

You know what is interesting, whereas iClone's Post Processing Bloom gives you banding, Octane Render can output Bloom, as a Post Processing effect, and it lets you render 8 or 16 bit PNG, and EXR.

But on a 16 bit PNG, there is no Bloom banding. (I never use Octane's 8 bit so I don't know what is case in that instance). Meaning, EXR is not required for an image without Bloom banding.

And then in Unreal Engine, its Post Processing Bloom also produces no Bloom banding.

So aside from not meeting a Netflix requirement, iClone's Bloom just looks bad. Probably the design of it somehow...

So, as (1) Octane and (2) UE4 can output a standard 16 bit render *without* Post Processing Bloom banding, I think it is in fact an iClone 'bug', and not something that would be solved actually by a higher quality output.

I say bug, because:

(1) Q - YES or NO, is the intent of iClone's HDR bloom to produce banding?

A - NO

Thus, iClone bug.

(2) Q - Do Octane Render or UE4 require 32 bit image processing in order to achieve Bloom without Bloom banding?

A - NO

Thus, iClone bug.

I think if you could output to 32 bit in iClone, as is, you would in fact just get a higher quality Bloom banding.

|

|

By TonyDPrime - 6 Years Ago

|

|

Dupenheimmerspeilichtenzuckht

|

|

By yoyomaster - 6 Years Ago

|

Here is a short video showing Blender Eevee capabilities at the moment, pretty amazing, and Blender can output 8 or 16 bits, even full float OpenEXR images, doesn't get any better than that IMHO!

|

|

By illusionLAB - 6 Years Ago

|

|

I'm very impressed with eevee and if I'm going to take the time to learn another software, the Blender route makes much more sense than trying to use game engines. I figure if I start getting comfortable in Blender now... once 2.8 is released I'll have better than a working knowledge and should find it easy to move my iClone projects (providing RL fixes their FBX export/import issues before then!)

|

|

By animagic - 6 Years Ago

|

|

Tony, banding occurs in other instances as well and also with non-iClone imaging. From what I understand it's just a limitation of of 8-bit per color channel output. I wouldn't call it a bug as I don't think there is a way that you can prevent it with 8-bit output. Going to 16 bit would resolve that, or possibly adding some noise component like I did in iClone 5.

|

|

By illusionLAB - 6 Years Ago

|

Banding is not an iClone bug... but a limitation of images created in 8 bits. Our computer monitors and TVs can only display 8 bits (yes, there are exceptions) so it's generally an accepted 'display' format.

Any sort of gradient... sky, glow, shadows etc. are prone to banding as the change in luma may be very gradual. So, imagine a gradient from 50% grey to 60% grey across an HD image. With 8 bit having only 256 "steps" of luma , that means the 10% change of luma would be represented with only 25.6 steps across 1920 pixels - hence the distinct bands. The same scenario with 16 bit images means you'd have 65535 x 0.1 = 6553.5 steps of luma across 1920 pixels - more than 3 times the minimum resolution to avoid banding.

To get 'true' 16 bit depth renders, your texture bitmaps would all need to be 16 bit as well. This puts a tremendous strain on any software to try and do 'real time'. In the "high end" animation/VFX world we primarily use "floating point 16 bit" files (like EXR) as they can offer the same quality as 32 bit integer in a smaller file size - of course, "real time" is not even a consideration

|

|

By sonic7 - 6 Years Ago

|

My Laptop PC seems to show up this 'banding' issue quite noticeably (more than a desktop?) - don't know for sure.

Maybe it's also a graphics card related setting? .... But I'm accutely aware of it and don't like it at all.... I don't understand the deeper technical side of things, but my eye knows when something looks right or not. (Well, most of the time anyway).

** Like when you have a transition from a shade of gray through to a 'slightly' darker shade of gray, but over a large(ish) distance ..... and it looks like a relief contour map !! Lol

|

|

By justaviking - 6 Years Ago

|

@Sonic - Laptops are notorious for having bad displays (cheap screens). You can buy a cheap $200 tablet device, and it will have a better screen than a $1,200 laptop. Slowly, ever so slowly that is changing.

The "banding" issue, as explained, can be caused by 8-bit input images to even the best software. Here is a post in the Substance Designer forum, complete with picture. If you look closely, it looks like a "contour map."

https://forum.allegorithmic.com/index.php/topic,24285.0.html

|

|

By TonyDPrime - 6 Years Ago

|

Yeah, but UE4's Bloom and Octane's bloom don't produce banding. I use them so I experience first hand.

However, okay, for Octane they are 16-bit renders. For UE4, I don't know what the heck it is actually. Production -Default.

@Animagic, I don't dispute that banding exists elsewhere, but mind you your fix was in fact for iClone, so I'm just pointing that other rendering tools don't have the issue at 16-bit.

But yeah, watch the Eevee video YoYoMaster posted with the creature. At the end when it is a dark scene with a light, you do see the banding in Eevee, too.

@Illusionlab - okay, let's go with the idea that you need 16 bit to eliminate banding, or reduce it, but then I thought you had said in this thread that iClone probably outputs 16-bit?

But so, it is actually 8-bit? In iClone's case, PNG vs JPG does nothing as far as banding goes. If they gave us EXR and it would go away, that would reveal a lot.

Because, if the banding could be fixed by implementing a 16 bit render output, then I'm a 'bit' disappointed that iClone hasn't done this yet.

So that's why I say a bug. Maybe not a programming glitch coding bug, but definitely a performance/output bug.

It is not the intended output AND there is NO way around it through iClone itself.

Anyway at minimum, we all wish it would go away!

|

|

By illusionLAB - 6 Years Ago

|

"let's go with the idea that you need 16 bit to eliminate banding"

It's not an idea it's a fact. There are, however, ways to minimize 'banding'... like adding noise or dithering - usually "a process" when creating the rendered file.

The effects that produce the most banding in iClone are essentially 'real time' post effects, so I suspect they are calculated as 8 bit to maintain 'real time' performance (they could actually be hardware provided from the graphics card... so there's nothing RL could do to change it). If they were calculated at 16 bit during final render it would pretty much solve the issue (at the expense of render time). The upcoming addition of iRay rendering will improve things, as it's 32 bit it should substitute iC glows, lighting etc. with it's own (converted, like indigo did). I amended my guess at iClone's architecture to 32 bit as it does handle HDR - but, just because the environment is 32 bit does not mean all the calculations are made at 32 bit... this is probably true of all the game engines, as they wouldn't possibly be able to offer real time. It's common practice to dynamically assign bit depths 'where they're needed' - you can get away with 8 bit for most things... like texture maps.

Also, it's absolutely possible to see 'banding' on 16 or 32 bit images... like I said before 'most' computer displays are 8 bit (the higher quality the better the 'interpolation'). My setup is optimized for colour grading, so I've splurged for a 10 bit (30bitRGB) monitor... driven by a 10 bit Quadro card. All 'gaming' cards, like 1080s, are 8 bit. My theory is iClone actually uses the images generated by the graphics card for renders... which would ultimately mean the PBR renderer in iClone will never produce a 16 bit image. Again, this is why iRay (or other 3rd party 'non real time' renderer) will be the only option for 16 or 32 bit depth images.

|

|

By yoyomaster - 6 Years Ago

|

|

Banding, from my experience, appears mostly in overblown shots, like with bloom, also, depending on the codec used for streaming, it may become more apparent, so I understand why Netflix ask for 16 bits, only 8 bits is definitly a limitation on iClone's part if you are looking to output for any serious production, not to mention that many compositing tools prefer 16 bits over 8 bits!

|

|

By yoyomaster - 6 Years Ago

|

Another Eevee render test.

Model by Jakub Chechelski, you can find it on Gumroad at https://gumroad.com/l/JiRtZ, I gave 5 bucks for it, as it is a great model and a great asset to learn character texturing in Substance Painter. The Node Wrangler add-on with the CTRL/SHIFT/T combo key, makes it a snap to setup Substance Painter textures in Cycles or Eevee, took me a whole 5 minutes to set this up, including the SSS setup. This was rendered in Blender Eevee in 19 minutes (600 frames) with 25 4K maps, on a GTX 960 with only 2GB of ram, which is impressive!

|

|

By TonyDPrime - 6 Years Ago

|

yoyomaster (6/13/2018)

Another Eevee render test. Model by Jakub Chechelski, you can find it on Gumroad at https://gumroad.com/l/JiRtZ, I gave 5 bucks for it, as it is a great model and a great asset to learn character texturing in Substance Painter. The Node Wrangler add-on with the CTRL/SHIFT/T combo key, makes it a snap to setup Substance Painter textures in Cycles or Eevee, took me a whole 5 minutes to set this up, including the SSS setup. This was rendered in Blender Eevee in 19 minutes (600 frames) with 25 4K maps, on a GTX 960 with only 2GB of ram, which is impressive!

How many maps does this thing have...5 minutes, but for how many textures did you have to do?

Just curious what kind of workflow you are really going to have to deal with here when it comes to a full scene, or avatar, coming from iClone . Looks cool!

|

|

By TonyDPrime - 6 Years Ago

|

|

illusionLAB (6/13/2018)

"let's go with the idea that you need 16 bit to eliminate banding"

It's not an idea it's a fact. There are, however, ways to minimize 'banding'... like adding noise or dithering - usually "a process" when creating the rendered file.

The effects that produce the most banding in iClone are essentially 'real time' post effects, so I suspect they are calculated as 8 bit to maintain 'real time' performance (they could actually be hardware provided from the graphics card... so there's nothing RL could do to change it). If they were calculated at 16 bit during final render it would pretty much solve the issue (at the expense of render time). The upcoming addition of iRay rendering will improve things, as it's 32 bit it should substitute iC glows, lighting etc. with it's own (converted, like indigo did). I amended my guess at iClone's architecture to 32 bit as it does handle HDR - but, just because the environment is 32 bit does not mean all the calculations are made at 32 bit... this is probably true of all the game engines, as they wouldn't possibly be able to offer real time. It's common practice to dynamically assign bit depths 'where they're needed' - you can get away with 8 bit for most things... like texture maps.

Also, it's absolutely possible to see 'banding' on 16 or 32 bit images... like I said before 'most' computer displays are 8 bit (the higher quality the better the 'interpolation'). My setup is optimized for colour grading, so I've splurged for a 10 bit (30bitRGB) monitor... driven by a 10 bit Quadro card. All 'gaming' cards, like 1080s, are 8 bit. My theory is iClone actually uses the images generated by the graphics card for renders... which would ultimately mean the PBR renderer in iClone will never produce a 16 bit image. Again, this is why iRay (or other 3rd party 'non real time' renderer) will be the only option for 16 or 32 bit depth images.

Interesting. How come a 3xSS render from iClone taking 6 seconds to render at UHD not eliminate the banding.

It's just an up and down-scaled 8-bit image?...

Remembering those introductory iClone 7 tutorials, Stuckon3D had never discussed THIS stuff with Kai in the tutorials when they spoke about the realtime PBR workflow.

You never heard them say, "Oh, by the way, for those of you looking for 16-bit output with no banding from the bloom, this is not going to give you that performance yet..."

So, either they didn't know, despite all the background in lighting and 3D, etc, in which case us users are more observant and interested in the subject of image output.

Or, they just figured, "Why bother explaining it, let's not explain in detail and hope nobody cares or notices."

|

|

By yoyomaster - 6 Years Ago

|

|

Banding has nothing to do with scaling up then down, it had to do with color depth, an 8 bits images(16 million colors) just doesn't have enough colors depth to totally prevent banding, while 16 bits images(68 billion colors) do!

|

|

By TonyDPrime - 6 Years Ago

|

In Octane Render, when you render with Bloom Post Processing effect, the render completes, and then you can save the image in a format. But the image saving part isn't really what slows down the rendering part, as far as the GPU is concerned. As in, Octane's render engine isn't held up by completed image output time. It processes rendering of textures and lighting, then saves the 16bit PNG image, even a UHD one, quite instantly.

So in iClone, actually, if we did have 16 Bit, we may not have a noticeably slower rendering time after all...I'm not saying it would be, in mathematical absolute terms, as fast as an 8-bit render, but it may be an inconsequential speed difference.

Okay, so wait, would Netflix accept a 16 bit, or they need 32 bit.

We have to tell RL to give us whatever Netflix requires, or has someone done this?

|

|

By yoyomaster - 6 Years Ago

|

|

Heheh, 32 bits is a bit much, these are only used in stuff like vector displacement maps and the such, and they are huge files, I mean huge, I have 32 bits OpenEXR here that are over 800 mb each!

|

|

By TonyDPrime - 6 Years Ago

|

Okay, 16-bit it is!!!

|

|

By TonyDPrime - 6 Years Ago

|

And what is the deal with the TGA file format, still gives banding. Is that higher bit depth, or is it just an 8-bit 'image' packaged in a higher bit depth format?

(which we do not want, as I understand now we want the rendered image's origin to be at least 16-bit, as far as this discussion goes.)

|

|

By yoyomaster - 6 Years Ago

|

|

I dont think TGA supports 16 bits, at least it doesn't in Photoshop!

|

|

By illusionLAB - 6 Years Ago

|

TGA max bit depth is 8. I presume it's presence in iClone is for an "uncompressed" still format with PNG being the "compressed" format.

I just did a couple of tests that confirm that iClone's PBR renders are indeed 'frame grabs' of the display output. Remember the discovery of making the 'window' smaller and larger for different quality of DOF? Well the same holds true for the other "post process" effects... the HDR glows etc. Changing the size of the viewport is effectively telling the GPU to 'change gears' on the "post process" effects, so if a render is different depending on the size of the viewport - it goes without saying that the render is basically a screen grab of the GPUs output. Although, theoretically, nvidia GPUs have 10 bit architecture... it's only really available in their Quadro cards - got to have some reason for charging twice as much! (also, the software driving the Quadro card has to be written to support 10 bit - Photoshop and DaVinci Resolve are two that do).

So, there you are... iClone's 'realtime PBR' render is limited to 8 bit. iRay, or other 3rd party renderers will be, in theory, the only way to create 16 and 32 bit depth renders with iClone.

|

|

By yoyomaster - 6 Years Ago

|

I just checked, Blender Eevee can export 32bits OpenEXR, damn!

I read a bit more on the Netflix guidelines, they seem to be just that, guidelines, I doubt they would refuse a movie based on the fact that it is not 16 bits!

|

|

By wildstar - 6 Years Ago

|

unity, unreal , eevee, all this engines export with 32 bit color space. and just to rememember

unity and unreal use TAA in realtime,at any resolution you can render in 8k if you want with 32 bit color space. and is not secs per frame. its frames per sec so how much more fast its your Hard drive more fast is the render.

eevee use the old metod of supersampling , the same used in toolbag and iclone ( toolbag still better and fast than iclone ) supersampling is cpu based and slow. TAA is GPU based and realtime.

this discution ends for me 2 months back. i just dont use iclone for render .. final point! *for me. but i use iclone for all other stuff like facial and body anymations

all things not related for characters i animate inside unity. and cameras animation i got a new love. cinemachine .

|

|

By yoyomaster - 6 Years Ago

|

Blener Eevee actually uses TAA, it also uses screen space reflections, I think iClone mainly uses probes, either way, same goes for me, no rendering in iClone, but its still is a great tool for character animation!

Eevee Roadmap

|

|

By TonyDPrime - 6 Years Ago

|

Wildstar brings out a great point - Unity, Eevee, and UE4 all can render higher than 8-bit (16, 32) with no consequential render time impact.

With Octane, you can select 8-bit, 16-bit, or EXR, and the speed of image save upon render doesn't noticeably change, certainly render speed does not at all.

So imagine if Octane only had just the 8-bit option, that sounds that is where iClone is.

But it sounds like maybe iClone, is by structure, unable to generate beyond 8-bit because the engine 'screenshots' itself in realtime, and in order to be realltime it has to be 8-bit.

Like,they way they have it working, they would have to re-structure the whole show, at start, to run at 16-bit, and then it thus could capture itself at 16-bit.

Hmmm...I wonder what that would look like, or what impact that would have.

Is that Unity and UE4's visual secret, they are running the whole show at 16-bit? Or, no, bit they do just have a mechanism of render that doesn't work how iClone's does, you know?

In any event, the Iray part now seems to go beyond a visual raytrace tool. It becomes the de-facto 'answer' to any critique on visual.

Like-

Q - We need (X) in iClone, can we have it?

A- Just use Iray...

|

|

By yoyomaster - 6 Years Ago

|

|

TonyDPrime, iClone just cant screenshot itself, if that was the case we could only render at screen resolution, which is not the case, this means that iClone saves the buffer, but it is poorly designed, as it only saves 8 bits of information, and dont forget that Octane is not a real-time engine, it is an un-biased render engine that uses GPU power, while iClone is real-time, well, sort of, but still, modern GPU save internal data in a linear way, so it is a bad design on the part of RL if iClone cant save 16 bits images IMHO!

|

|

By TonyDPrime - 6 Years Ago

|

yoyomaster (6/15/2018)

TonyDPrime, iClone just cant screenshot itself, if that was the case we could only render at screen resolution, which is not the case, this means that iClone saves the buffer, but it is poorly designed, as it only saves 8 bits of information, and dont forget that Octane is not a real-time engine, it is an un-biased render engine that uses GPU power, while iClone is real-time, well, sort of, but still, modern GPU save internal data in a linear way, so it is a bad design on the part of RL if iClone cant save 16 bits images IMHO!

One thing I want to point out is that even with Iray, that doesn't guarantee iClone will have higher than 8-bit output. They actually need to give us an option for 16-bit output. It's a clerical-administrative matter, they need to either default it to that output depth, or give us a button that lets you select 16-bit, for example. But if default is set to 8-bit, and no 16-bit button, then we get renders at 8-bit.

I know you know this, just mentioning that by mere fact that we say "Iray", this conceptually doesn't mean in all theoretic situations that a render is > 8-bit.

Interesting what you mention, a design choice to "save the buffer". (LOL- when I mentioned the screenshot, I didn't mean it literally, I was using it to refer to the 'capture of data', like you are terming it here, 'saves the buffer')

And so this 'buffer' is then inflexible, like in this case it would need a 16-bit structured engine running underneath in order to save a 16-bit buffer, right? Or, no maybe?

|

|

By animagic - 6 Years Ago

|

As far as I know, PNG only supports 8-bit, so it would require another image format. I personally wouldn't rush to 16-bit yet as it involves immense storage requirements. And unless there is a guarantee that the final distribution format supports it, it would be a waste of space, I think...

It's a bit like a 192 KHz sample rate in audio. It makes more sense to me to introduce dithering or some such measure.

|

|

By yoyomaster - 6 Years Ago

|

|

PNG and TIFF supports both 8 and 16 bits, OpenEXR supports 32 bits!

|

|

By illusionLAB - 6 Years Ago

|

The whole "bit depth" equation can be a bit confusing. The 'shortcomings' we're noticing aren't completely to blame on 8 bit. All the CGI we view, whether it's on our monitors or TV is 8 bit... and yet, most professional productions can be 'banding' or other artifacts free. The key is the 'creation' process. A raytrace renderer like Octane will always render in 32 bit - which is why you won't see any speed differences when 'saving' as 16 or 8 bit - operative word being 'saving'. Octane creates a 32 bit image in it's buffers and 'saves' it to any format you like - clever algorithms work hard to maintain the integrity of the image as it's being written to a lower bit depth. Exactly the same as the digital audio equivalent... you may record at 24 bit, but if you're making a CD it has to be 16 bit - dithering and noise shaping are needed to help control the artifacts created when reducing the resolution. iClone supports HDR, which is 32 bit, so the "engine" is capable of feeding a raytracer to make the calculations at 32 bit. iRay supports 16 and 32 bit output with Daz, so I'm pretty sure we'll see the same in iClone.

|

|

By TonyDPrime - 6 Years Ago

|

animagic (6/15/2018)

As far as I know, PNG only supports 8-bit, so it would require another image format. I personally wouldn't rush to 16-bit yet as it involves immense storage requirements. And unless there is a guarantee that the final distribution format supports it, it would be a waste of space, I think...  It's a bit like a 192 KHz sample rate in audio. It makes more sense to me to introduce dithering or some such measure.

The whole point of this thread is to meet a company's requirement for a submitted media work. So on that level, you would need your rendering agent capable of outputting whatever their requirement is. Even if, like you say, you weren't typically making or distributing at that higher bit depth. With audio, hell, I'd even prefer a simple EQ and pan in iClone itself so I wouldn't have to use an external NLE, but some prefer it.

Eh? EH?!!!......

Anyway...

|

|