|

By GOETZIWOOD STUDIOS - 9 Years Ago

|

Hi,

It seems the iClone lipsync engine is having hard time to detect or at least place correctly the detected visemes.

I did a little test to verify that, and to compare I also did a manual detection of the visemes (up until "its like a jungle../.."):

(iClone script done on the acapella file)

As you can see the results are far from optimal but perhaps some of you got better results ?

I would be interested to hear from you guys, if you have done lipsync tests and experiments on your side.

Cheers,

Guy.

|

|

By mrmdesign - 9 Years Ago

|

This looks really cool. Great song too.

I have been trying a few things out with Crazy talk 8 and getting worse results. I have considered using the text to speech tool to create the correct lip / mouth movements then doing a voice over in another program but then the timing wouldn't flow as well.

I will also try in iclone and let you know how I get on.

|

|

By justaviking - 9 Years Ago

|

I think iC6 is improved over iC6, but if you want the best-possible results, plan on doing some manual viseme editing. For clean-up, usually removing some helps. That's step #1 for me.

iC5 had way too many, especially too many "None" visemes, making the mouth look spastic. A good "live" recording has always given me better results that Text-to-Speech. And it should be "voice-only." The engine cannot separate out vocals from music in a song. I record my own voice-over for a song to get the visemes, and replace the audio in my video editing software.

If you spend too much time editing visemes, real live people talking to you will start to look funny, and after a while you're not sure what looks real and natural anymore. :P

|

|

By mtakerkart - 9 Years Ago

|

|

One of my favorite song ever Grabiller! Motions on the beat is very rare in the Iclone world. I never had better results and my problem is that the majority of my voices are in french language and as you know there're sounds that does'nt exist in english language that the Iclone detection algorythm is not enable to detect. because lot of network cartoons productions are so so with the match between viseme and mouth shape, the audience is very tolerant about lipsync. I focus only on the vowel.

|

|

By mtakerkart - 9 Years Ago

|

I know Swooop. I can have perfect result but the time consuming nedeed for it is not in my philosophy why I bought Iclone.It will be the same time consiming as blender or Maya.

But I notice that Iclone is optimize for english viseme. In the expression editor , if I build a perfect "th" viseme with visible tongue on up-teeth, it's useless

because this sound doesn't exist in french. When english people said "e" it's "i" for french language. May be with the new render release I'll built an

efficient CC viseme build for French language. ;-)

|

|

By animagic - 9 Years Ago

|

|

@grabiller: I usually edit the visemes, especially for close-ups. iClone viseme detection has not substantially improved since it was introduced. It got a little bit better in iClone 6 as far as blending goes, but there are still many spurious visemes being generated.

I have suggested for a couple of years now an approach where the viseme detection gets help from the text of the dialog. This approach is not new, I came across in a product called LipSync (now Mimic) that allowed you to do lip-synching in Poser. It worked quite will. Maybe I should make this a wishful feature, because things could be much better. Or, we get your SDK/scripting approved and we can build our own.

|

|

By VirtualMedia - 9 Years Ago

|

You did a helluva job on this!

justaviking and sw00000p had some very helpful comments, I generally delete all the suggested visemes straight away as it takes longer to hunt, peck, move and delete than it is to put what I want when and where I want it. One thing I would add is the mouth always makes the shape a few frames before the sound, and to paraphrase JV what you don't set is as important as what you do, just like playing music. Don't forget holds, avoid twinning (Two similar sounds next to each other) tweak one to show movement of the mouth while retaining a slightly different but similar shape. Its a skill that develops over time and eventually becomes second nature, you eventually won't need charts for reference.

One thing I hope that gets addressed soon is the IC facial animation limitations, one of the first rules of creating believable character facial animation and lipsync is to avoid twinning as in (The mouth repeats the same static shapes over and over - watch anyone talk and notice their mouth, eyes, cheeks, lips.. seldom move symmetrically, the left side seldom mimics the right side) hence the robotic wooden appearance. Trying to make asymmetrical facial expressions and visemes in IC is difficult when you only have a couple sections of the face to move which often makes a characters face look disfigured.

Rant aside, you did a great job on this, you obviously know what your doing.

|

|

By VirtualMedia - 9 Years Ago

|

Thanks for the comments sw00000p, I've been at it for awhile and always learning. I can't understand why RL keeps adding all the random bells and whistles and doesn't address the animation limitations, it's really a shame considering IC at it's core is an animation program.

|

|

By Bellatrix - 9 Years Ago

|

|

grabiller (5/29/2016)

Hi,

It seems the iClone lipsync engine is having hard time to detect or at least place correctly the detected visemes.

I would be interested to hear from you guys, if you have done lipsync tests and experiments on your side.

Yep keys alignment is an issue, though not as big as the excess redundant keys issue

Your actors look like CC... (and simple but effective use of HDR btw):)

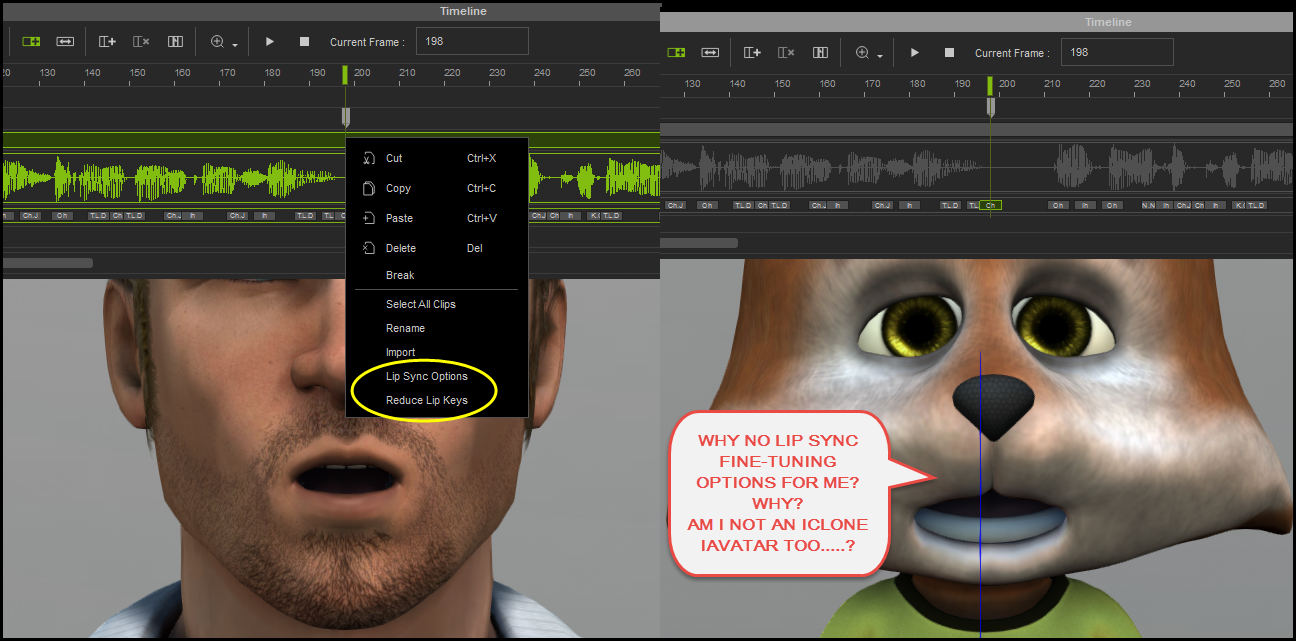

I trust that you've already cleaned up the auto-generated mess with the 2 extra right click options activated only on CC

CC certainly have more refined viseme shapes than G5

(even then I always redo and custom all lipsync morphs and face profiles)

But no face profile customization experties can overcome antique lip sync process

iCloners who use G5 G6 Genesis1/2/3 Poser/ Max/ Maya/ Blender/ Mixamo rig

still suffer unnecessary time loss: no reduce redundant keys mess, nor smoothing fix

either manual and eyeball it, carefully click on cute little overlapped TLD TLD TLD keys

or do the "faster" way: erase auto-generated keys and start all over from scratch!

because...

Two crucial LipSync fix: Reduce Lip Keys + Lip Sync Options

are STILL not implemented globally for Non-Standards or G5/G6 characters!

I have just added a new suggestion

Enable Lip Sync Options & Reduce Lip Keys for ALL iClone iAvatars

Do vote or (if you've run out of votes) do add comment

|

|

By Bellatrix - 9 Years Ago

|

|

sw00000p (5/31/2016)

I KNOW Team RL can code "Pose to Pose" animation.

The REAL problem is....

This would eliminate 90 % of the EZ buttions.

• No Point and Click... Instant Walk.

• No Click the Path... POOF! the character follows the path.

Users would actually have to LEARN TO ANIMATE.

I'm slightly more optimistic, and I think...

Adding "standard basic" animation tools

will not lead to anim-novice suddenly dumping presets for DIY custom sets

Just like

Adding "standard basic" dynamic vertex morph tools

will not lead to modeling-novice suddenly dumping pre-made assets for sculpting

Case in point:

RL CC dev team has made it SUPER EASY to mouse-manipulate CC body parts

as in gamer-easy to transform neck/ trunk/ chest/ hip...No need to touch sliders even!

Yet?

There remained many perma-novice who'd scoff at "mastering" EZmouse-CC-interface...

but would spend crazy amount of mone/ time/ energy to have?

One single hyper-specific PRESET something!

So no worries, RL can add as many new functionalities

as many parameters, splines, controllers, skeleton-hierarchy exposure as they have been requested...

the novice preset content consumers market, a done deal.

In STARK contrast...

The pro-ish scripts & functionalities consumers front

97% under-exploited! :)

IMO of course

Regardless of what we think RL is thinking

"Pose to pose" possibility is, at least, being considered

at best, "Assigned and WIP"

which incidently - if the face bones are incorporated as detailed in that request

will take care of most face expression adjustment issues mentioned in this thread.

So it's not if RL will improve iClone for character animators

They will

It is, when?

Yesterday, I hope ;)

|

|

By Bellatrix - 9 Years Ago

|

@Swoop

I'm pragmatic, only seeking the most efficient path to a visualized end

I learn CG organically, acquire tool/ knowledge as the need arises

I use whatever the tool designer come out with, experiment, push limits...

So am not set on whether progressive or dynamic vertex morph is The Way

or "straight" keying is a "better" fix than "graph/curve keying" to viseme matching issue...

Getting the job done with fewest click as and when the creative vision arises, is the thing I aim for

Anywayz...

hopefully still staying close to the topic: lip sync (and related face expression puppeteering):P

Those interested in wrinkle-morph...or ERC...

Or the "snappy" face rig in Virtual Media's recent post...

or realtime keying for character, face part or body parts...

Another path:

a "beefed up" Avatar Toolkit could well be a potential "global solution" to many iCloner animation headaches...

So I just put in a suggestion (don't expect anyone to vote just me opining suggesting)

some of it is relevant to this discussion..cut and paste first paragraph

------

Avatar Toolkit 2.5/3.0 - a True Avatar Controller for ALL iClone iAvatars

Avatar Toolkit has superior puppeteering features to legacy iClone Puppet tools

-Ability to set key and reset key where the action is (under-sold)

-Ability to dynamically snap/ flex morph in REAL-TIME (under-exploited by RL)

-Ability to control multiple joints at the same time (under-integrated)

-Ability to link MATERIALS (so under-sold that wrinkle/HD morph seekers haven't noticed this AT potential)

-----

Back to main discussion...

I'm optimistic, that's why I continue to make Feedback Tracker suggestion

even if I get 0 vote, I know RL is listening...

(Face) Pose to (Face) Pose options existed in iClone5

All they need to do is to reinstate it back in Face Key for all iAvatars

And then add Face key layers, not hard at all; body part keys existed already....just an extension...

Yes scripting will solve most of the minor issues...

Curve/ Graph editor has been Assigned too, so there's hope...

RL doesn't need to be a charity, just need to stay relevant and beefy enough

Like I said, even if iClone gets to Daz Studio/ Animate2/ Poser Pro level functionality...

people will STILL buy presets, iTalk iEmotion iWalk iFly iTourette...

iClone just needs to add a few more functionalities to keep itself on par with mocap peers

Mixamo has cheapen canned animation clips, dedicated mocap tools own timeline/sequencer are getting beefier

Export-friendly CC still need to be animated in iClone, canned/mocap/DIY clips or not

RL should know iClone needs basic CLEAN UP and EDIT preset/captured clip functions

starting with the most conspicuous missing Timeline feature

Multiple MOTION CLIP layers per character....NLA 101

I'ts a matter of design and vision...

Hitfilm Vegas Unreal Daz Poser had clip layers, lo-fi animators will look for that!

Nothing to stop iClone getting a new updated motion clip with built-in curve per clip!

Visemes can then be baked (by script or update) into another morph motion clip, sans audio...

another iClone-speicifc Timeline headache solved...

one tiny step at a time...:)

|

|

By planetstardragon - 9 Years Ago

|

"I'm pragmatic, only seeking the most efficient path to a visualized end"

you said that just to make me smile, didn't you ?! admit it!!

|